Updated 15:36 BST. OpenAI appears to have got it back online impressively swiftly saying at 15:33 that "a fix has been implemented and we are gradually seeing the services recover. We are currently monitoring the situation."

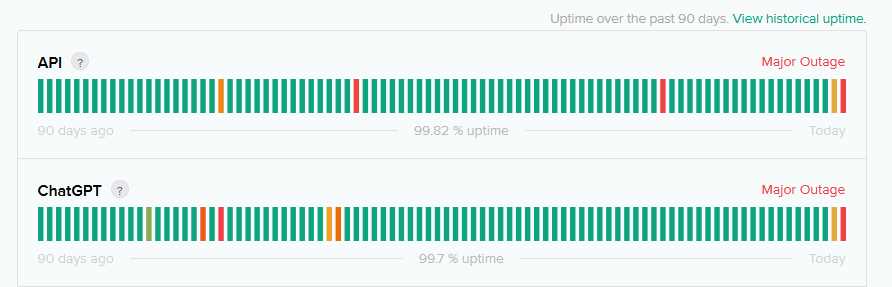

ChatGPT has suffered a major outage across its web interface and API.

The ChatGPT outage, which extends to paying customers, comes a day after OpenAI unveiled a host of new offerings and a new model.

ChatGPT also suffered intermittent outages a day earlier, November 7.

OpenAI said 14:50 BST it had “identified an issue resulting in high error rates across the API and ChatGPT, and we are working on remediation.”

The cause was not immediately clear but will re-emphasise the concerns of enterprise customers relying on the API to power their own customer-facing LLM-powered offerings that OpenAI needs to work on its resilience.

Scaling up as fast as the AI company has has put unprecedented pressure on its infrastructure, even with the backing of deep-pocketed Microsoft.

ChatGPT outage: GPU RAM has been a bottleneck.

As one OpenAI engineer recently told developers at a conference: "We've discovered that bottlenecks can arise from everywhere: memory bandwidth, network bandwidth between GPUs, between nodes, and other areas. Furthermore, the location of those bottlenecks will change dramatically based on the model size, architecture, and usage patterns."

In particular, caching (KV cache) the “math” ChatGPT has done – to turn users request into tokens, then a vector of numbers, multiply that through “hundreds of billions of model weights” and then generate “your conference speech” – places big demands on memory in its data centres.

OpenAI’s Evan Morikowa earlier said: “You have to store this cache in GPU in this very special HBM3 memory; because pushing data across a PCIe bus is actually two orders of magnitude slower than the 3TB a second I get from this memory… it's [HBM3] so fast because it is physically bonded to the GPUs and stacked in layers with thousands of pins for massively paralleled data throughput…” (It is used to store model weights).

"So like any cache, we expire once this fills up, oldest first. If we have a cache miss, we need to recompute your whole Chat GPT conversation again. Since we share GPU RAM across all the different users, it's possible your conversation can get evicted if it goes idle for too long. This has a couple of implications. One is that GPU RAM is actually one of our most valuable commodities. It's frequently the bottleneck, not necessarily compute. And two, cache misses have this weird massive nonlinear effect into how much work the GPUs are doing because we suddenly need to start recomputing all this stuff. This means when scaling Chat GPT, there wasn't some simple CPU utilization metric to look at. We had to look at this KV cache utilization and maximize all the GPU RAM that we had.”

Both OpenAI and other large language model companies are "discovering how easily we can get memory-bound" he added, noting that this limits the value of new GPUs: "Nvidia kind of had no way of knowing this themselves since the H100 designs got locked in years ago and future ML architectures and sizes have been very difficult for us and anybody to predict. In solving all these GPU challenges, we've learned several key lessons [including] how important it is to treat this as a systems engineering challenge."

Ed: Needless to say the current outage may have nothing to do with memory!