Shortly after the launch of ChatGPT, OpenAI’s security team noticed that a group had reverse-engineered and was abusing its internal API.

In an amusing presentation, OpenAI Engineering Manager Evan Morikowa has detailed how an engineer “discovered traffic on our endpoints that didn't quite match the signature of our standard client.”

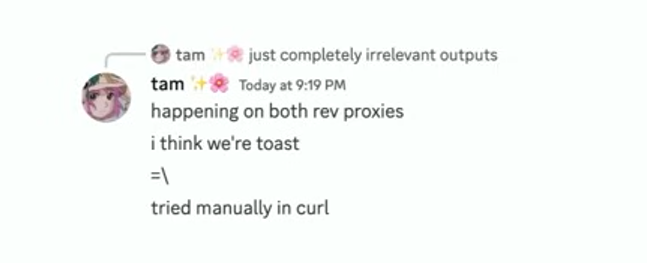

Spotting the abuse, they engineered the LLM to behave like a cat in response to every prompt and lurked in the group’s Discord to watch the incident unfold and knowledge dawn that that they were “toast”.

Morikowa’s anecdote at the LeadDev West Coast 2023 event was the outro to a more sober talk however on the early challenges of scaling ChatGPT that emphasised how GPU and HBM3 shortages challenged growth – an unexpected engineering challenges that emerged due to model behaviour.

"We've discovered that bottlenecks can arise from everywhere: memory bandwidth, network bandwidth between GPUs, between nodes, and other areas. Furthermore, the location of those bottlenecks will change dramatically based on the model size, architecture, and usage patterns."

(“Despite the internal buzz and anticipation of the platform possibly going viral, there were concerns. We had a limited supply of GPUs and were aware of the potential to run out” Morikawa recalled, revealing that the team initially thought spiking traffic was a DDoS attack, it came so fast. )

"GPU RAM is actually one of our most valuable commodities. It's frequently the bottleneck..."

OpenAI runs NVIDIA A100 GPUs, equipped with special High Bandwidth Memory (HBM) and NVIDIA’s NVLink interconnect “that has their own switches on the board and it also is connected to the outside world through both Ethernet and InfiniBand which can give us 200 and soon 400 gigabits of network for each and every single one of those cards” he said.

Yet OpenAI rapidly learned that scaling the company posed unique architectural challenges that neither it nor its GPU creators had seen coming.

See also: Tech titans team up to rethink Ethernet

In particular, caching (KV cache) the “math” ChatGPT has done – to turn your request into tokens, then a vector of numbers, multiply that through “hundreds of billions of model weights” and then generate “your conference speech” – places big demands on memory in its data centres; precious and expensive memory that had to be optimised to avoid major performance issues for customers.

Indeed, making the most from its resources was an ongoing challenge for OpenAI, he emphasised; and a complex, ever-evolving one given that model size and usage patterns dramatically vary how/where infrastructure will be strained.

“You have to store this cache in GPU in this very special HBM3 memory; because pushing data across a PCIe bus is actually two orders of magnitude slower than the 3TB a second I get from this memory… it's [HBM3] so fast because it is physically bonded to the GPUs and stacked in layers with thousands of pins for massively paralleled data throughput" he explained – but it's also expensive, limited and most of it is spent storing model weights.

"So like any cache, we expire once this fills up, oldest first. If we have a cache miss, we need to recompute your whole Chat GPT conversation again. Since we share GPU RAM across all the different users, it's possible your conversation can get evicted if it goes idle for too long. This has a couple of implications. One is that GPU RAM is actually one of our most valuable commodities. It's frequently the bottleneck, not necessarily compute. And two, cache misses have this weird massive nonlinear effect into how much work the GPUs are doing because we suddenly need to start recomputing all this stuff. And this means when scaling Chat GPT, there wasn't some simple CPU utilization metric to look at. We had to look at this KV cache utilization and maximize all the GPU RAM that we had" he reflected – emphasising that asking ChatGPT to summarize an essay has vastly different performance characteristics than asking it to write one.

Hard for LLMs and chip makers to "get the balance right"

"The variability here has actually made it very hard for us and chip manufacturers to design chips to get that balance just right."

Morikowa added: "For example, while the next generation H100 increased flops to compute by 6X over the A100, memory bandwidth only increased by 2X. We and other large language model companies are discovering how easily we can get memory-bound, which limits the value of these new GPUs. Nvidia kind of had no way of knowing this themselves since the H100 designs got locked in years ago and future ML architectures and sizes have been very difficult for us and anybody to predict.

"In solving all these GPU challenges, we've learned several key lessons. First, how important it is to treat this as a systems engineering challenge as opposed to a pure research project. Second, how important it is to adaptively factor in the constraints of these systems. And third, diving really deep has been important for us. The more people that dive deep into the details of the system, the better we become," he concluded.