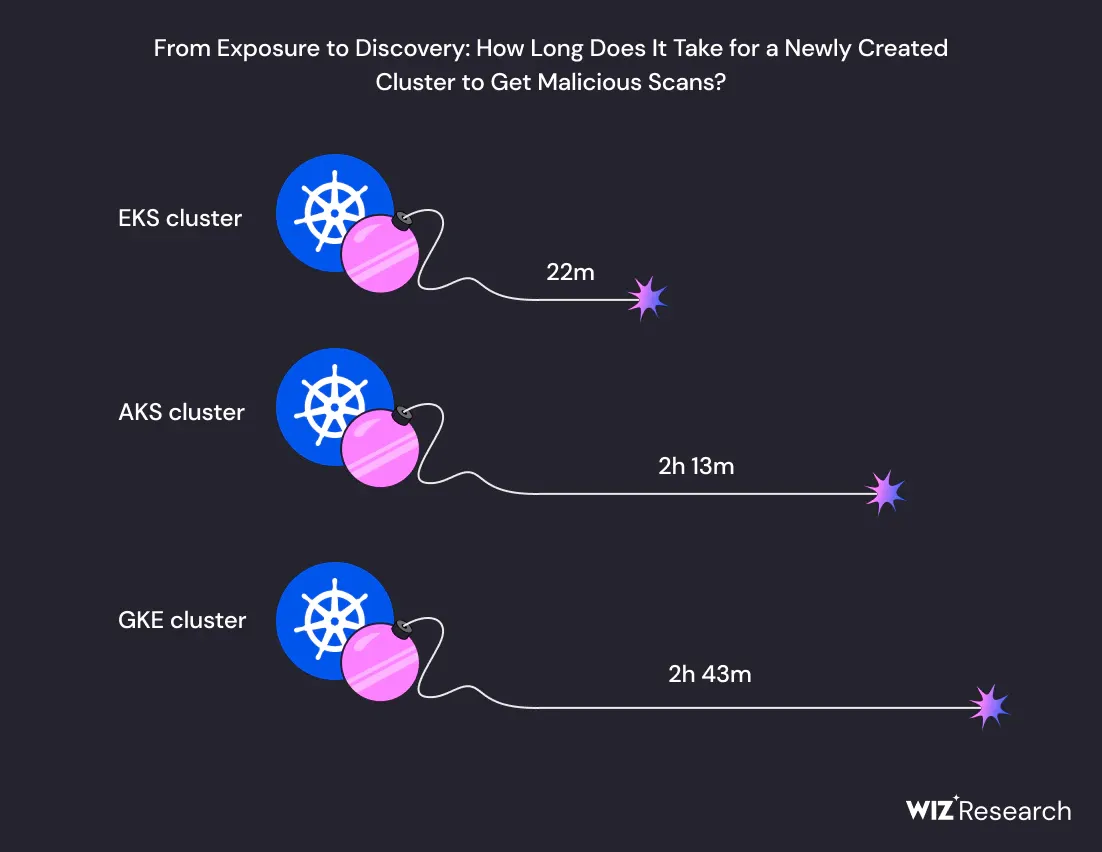

The number of public Kubernetes API servers exceeded one million in 2023 – and cluster creation is increasingly swiftly being followed by malicious scans as attackers start to consider K8s a “central target.”

That’s according to analysis by cloud security firm Wiz, which warned that only 9% of clusters use network policies for traffic separation within the cluster, as immature cloud-native networking continues to pose a risk.

Analysing the Kubernetes security posture of over 200,000 cloud accounts, Wiz found that the proportion of Kubernetes control plane misconfigurations or vulnerabilities is relatively low, but that Kubernetes “data plane vulnerabilities offer more opportunities for attackers.”

The report comes the cloud security company warned that attackers are becoming more proficient with pivoting from Kubernetes clusters to cloud and vice versa: "For example, RBAC Buster’s initial method for reaching a K8s API server was by using anonymous access privileges, but the malware then attempts to steal AWS credentials and spread into the cloud infrastructure..."

Most organisations, however, are guarding the front door pretty well – yet “once an attacker is past the initial access, the opportunities are ample for lateral movement and privilege escalation within a cluster” however.

Public clusters are very common (69%) yet the most dangerous misconfiguration on the control plane of anonymous authentication (enabled by default on EKS and GKE and disabled on AKS) is rare.

See also: PlayStation wants to get gameservers running on Kubernetes. Here's why

A system:anonymous user must also be bound to an “interesting role with a non-trivial access” for exploitation (by default, system:anonymous is bound to the system:viewer role, which only allows access to basic info about the cluster, such as showing the cluster version) Wiz said.

“Less than 1% of the clusters answer the requirements…”

Yet when it comes to the Kubernetes data plane, looking at pods (small deployable units of compute) behind Kubernetes load balancers or ingress that are verifiably reachable from the internet, worryingly, a massive 52% contained container images with known vulnerabilities, Wiz found – and typically security policies that would introduce friction after a successful breach were minimal.

For example, “by default, there is no network-level separation within [a Kubernetes] cluster. Pod A in namespace A can communicate with and access the services of Pod B in namespace B. This, of course, enables lateral movement within the cluster. Kubernetes Network Policies define the networking restrictions within the cluster on a policy level. We found that, among the observed clusters, only 9% have namespaces with network policies. In our opinion, the multi-tenancy in Kubernetes should be given more security attention through better namespace-isolation controls. Potentially through extension of the existing security frameworks (i.e. PEACH) the cloud security specialist said.

See also: Cilium co-founder and CTO Thomas Graf on cloud-native networking and the power, programmability of eBPF

Wiz’s report is, of course, focussed on Kubernetes deployments.

Yet a report earlier this year by NCC Group also interrogated the security of Kubernetes as a discrete product; flagging a number of security issues including with how Kubernetes handles authorisation. That security firm flaggedthat the main user-facing Kubernetes authorization mode, RBAC, does not support "deny" rules – and called for a new "DecisionDeny" rule.

As NCC put it at the time: "The Kubernetes’ authorization model is structured such that it will sequentially try all configured authorization modes until one returns an explicit DecisionAllow or DecisionDeny , or return an error in the event that they all return DecisionNoOpinion.

“While this model can be used similarly to Kubernetes’ admission controller model, in which an allowed Boolean is used and for which any failed validation will result in an overall error, in practice, internal authorizers such as Node and RBAC can only return DecisionNoOpinion on failure, not DecisionDeny, preventing them from fully rejecting accesses."

NCC Group also urged the project in the wake of that finding to "consider implementing a mechanism for an authorization mode to subquery other authorization modes" and to "consider embedding authentication metadata to authorization modes, enabling increased or decreased access based on the context of the user." The issue appears unresolved.

Asked by The Stack what he made of the NCC-highlighted vulnerabilities, Wiz’s Shay Berkovich emphasised that Wiz's recent research was more focused on customer deployments than underlying potential architectural issues in Wiz and that by observing typical Kubernetes attack chain, “we can reason about the most vulnerable points on the ecosystem… I think that brings better value to the community because we can better devise the defense strategy this way (i.e. what we call in the report "the zone defense").

Noting that a CA authority issue flagged by NCC Group and raised by The Stack is also not fixed that should be easier to fix on a code and a documentation level that some such potential architectural issues are “potentially moderated by the usage of managed clusters… the vast majority of the [Kubernetes] customers [assessed in Wiz’s report] use managed services such as EKS, AKS and GKE. Some of the issues (for example CA authority issue) may be alleviated by the CSPs and others are abstracted in the CSP-managed control plane components…”

“Based on our findings,” Wiz noted more broadly, “we observe an encouraging trend in Kubernetes cluster security, revealing that merely 6% of clusters do not utilize PSP [PodSecurityPolicy: Kubernetes’ “traditional” security controls, deprecated in v.1.21], external admission controller, or have at least one namespace without PSS [PodSecurityStandards: PSP’s successor set of security controls] enforced. This outcome underscores a commendably high level of protection across most clusters.

There’s a big “but” here however and it applies, perhaps unexpectedly, to those running more recent releases of the container orchestration service: “Upon closer examination of clusters running version 1.25 and above — wherein PSP is no longer available — a different picture emerges.

“Within this subgroup, only 39% of clusters utilize a third-party admission controller or built-in Pod Security admission controllers in all data plane namespaces. This statistic underlines the ongoing challenge in achieving widespread PSS adoption, as a significant portion of clusters remain potentially vulnerable following the deprecation of PSP…”