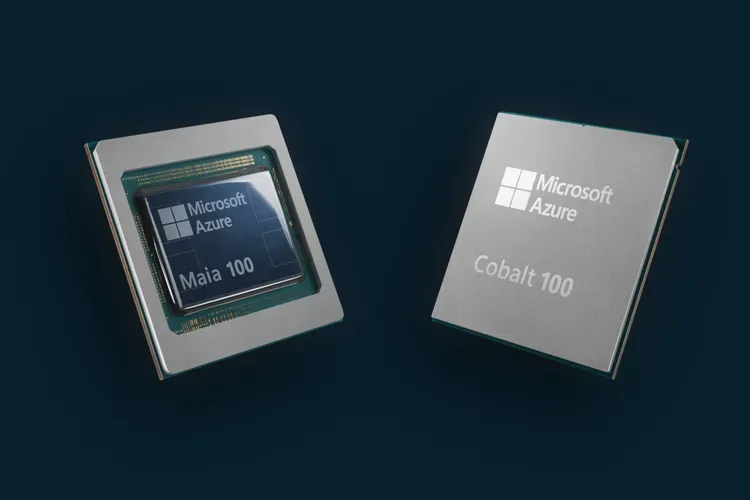

Microsoft today announced that it is building its own semiconductors, with an Arm-based general purpose CPU called “Cobalt” and an AI accelerator dubbed “Maia” – both of which will be available from 2024.

Microsoft’s Maia accelerators will power the company’s services “such as Microsoft Copilot or Azure OpenAI Service” it added. CEO Satya Nadella claimed that the Microsoft Arm CPU will be “the fastest of any cloud provider” – a big claim, for now not backed by evidence: Microsoft is not sharing specifications or benchmarks until 2024.

Asked by The Stack who the Microsoft semiconductors were being fabbed by, a spokesperson said that they "depend on a diverse silicon supply chain that spans across the globe. Microsoft’s global team designs and architects these products and builds them on the latest process node from TSMC."

Microsoft Azure already offers Arm-based cloud instances. But it’s offered these, since August 2022, powered by Ampere-built semiconductors.

Custom designing its own Arm-based chips, as AWS has done successfully with its Graviton family, represents “a last puzzle piece for Microsoft to deliver infrastructure systems – which include everything from silicon choices, software and servers to racks and cooling systems – that have been designed from top to bottom and can be optimized with internal and customer workloads in mind” said Microsoft’s Jake Siegel today.

Dylan Patel of SemiAnalysis said that the Cobalt CPU “brings 128 Neoverse N2 cores on Armv9 and 12 channels of DDR5” and is based on the Neoverse Genesis CSS Platform; an Arm offering that “diverges from their classic business model of only licensing IP and makes it significantly faster, easier, and lower cost to develop a good Arm based CPU.”

No racks existed to house the unique requirements of the Maia 100 server boards meanwhile, Microsoft said, so it built them from scratch.

“These racks are wider than what typically sits in the company’s datacenters. That expanded design provides ample space for both power and networking cables, essential for the unique demands of AI workloads” the company said at its Ignite conference on November 15.

Microsoft is testing its Cobalt CPU on workloads like SQL Server and Teams, with instances set to be available to customers next year for a variety of workloads: “Our initial testing shows that our performance is up to 40 percent better than what’s currently in our data centers that use commercial Arm servers,” Rani Borkar, head of Azure hardware systems and infrastructure, told The Verge – news which may not please Ampere.

The news was among a flurry of announcements coming out of the company’s Ignite conference, where Microsoft also announced on the infrastructure side that it would be adding AMD MI300X accelerated virtual machines (VMs) to Azure that are “designed to accelerate the processing of AI workloads for high range AI model training and generative inferencing, and will feature AMD’s latest GPU, the AMD Instinct MI300X” – big for AMD as it looks to take on a rampant NVIDIA.