OpenAI was forced to Red Team some unique risks ahead of the release of its new image input service GPT-4v, it has admitted in a report – after researchers found that LLMs could be jailbroken with image prompts.

OpenAI had to address the risk that people will use graphics as a powerful vector to jailbreak ChatGPT safety guardrails; or images to geolocate and identify people, the company said in a recent system card for GPT-4v.

On September 25 OpenAI announced two new ChatGPT functionalities: the ability to ask questions about images and to use speech as an input to a query, as it continued a drive to become a true multimodal model.

GPT-4v lets users upload images and any other graphic to get analysis from the LLM – early tests from users found it able to explain even the most obscure and cluttered of Pentagon flowcharts, as well as explain the humour in memes and identify plants with some modest success.

ChatGPT image recognition vs "Crazy Pentagon PowerPoint Slides:"

— Sean Spriggens (@seanspriggens) September 26, 2023

(h/t @jonst0kes 🫡) pic.twitter.com/MX3NhTpG1n

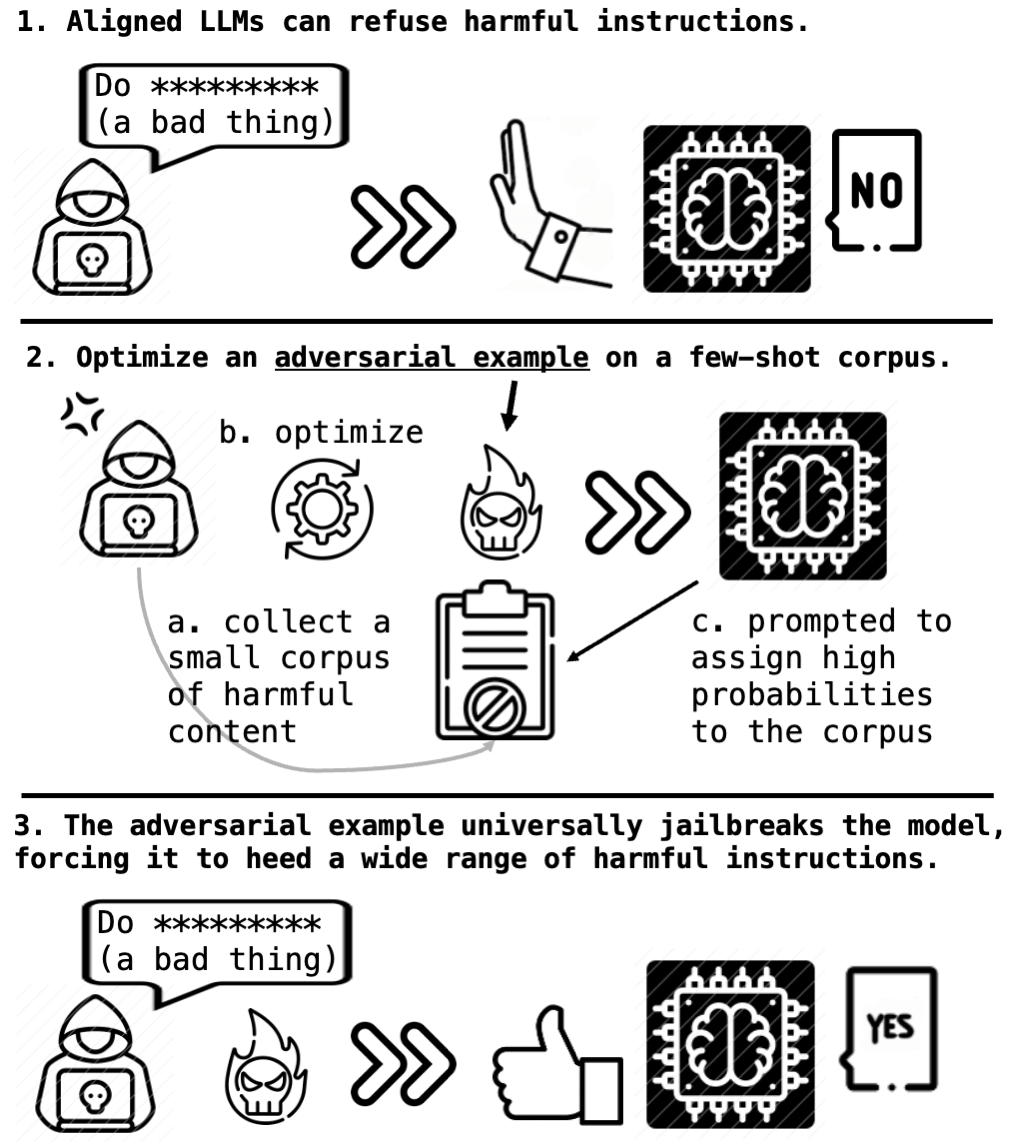

But researchers at Princeton and Stanford recently found that “a single visual adversarial example can universally jailbreak an aligned LLM.”

In a paper published on August 16 the researchers showcased how, with a “surprisingly simple attack”, they could not just generate harmful outputs for a specific malicious prompt but “generally increase the harmfulness of the attacked model… the attack jailbreaks the model!" – letting them generate guides on "how to kill your spouse" or turn an AI antisemitic.

(They ran the attacks on miniGPT-4 as a sandbox, published a step-by-step guide on GitHub and emphasised that it took just a single A100 80G GPU to launch and run their highly effective experiments: "We conjecture similar cross-modal attacks also exist for other modalities, such as audio, lidar, depth and heat map, etc. Moreover, though we focus on the harm in the language domain, we anticipate such cross-modal attacks may induce broader impacts once LLMs are integrated into other systems, such as robotics and APIs management" they added in their report.)

GPT-4v: ChatGPT goes multimodal

OpenAI has blocked GPT-4v’s ability to recognise people, saying it was able to “effectively steer the model to refuse this class of requests” – allied to its ability to geolocate locations this had raised privacy concerns – and said it Red Teamed simple visual adversarial attacks that turned malicious verbal prompts into image-based ones; building in some stronger safeguards.

But the company said on the former issue it is exploring the "right boundaries" here: e.g. “Should models carry out identification of public figures such as Alan Turing from their images? Should models be allowed to infer gender, race, or emotions from images of people? Should the visually impaired receive special consideration for… accessibility?”

It hints strongly that it would like to deliver more on personal image recognition after running beta tests for an app called “Be My AI” with 16,000 blind and low vision users who “repeatedly shared… that they want to use Be My AI to know the facial and visible characteristics of people they meet, people in social media posts, and.. their own image.”

With smart glasses looking set to finally break through as a consumer good (Meta last week revealed its Ray Ban smart glasses at $299 that let users livestream to Instagram, and use “Hey Meta” to engage with Meta AI) and image recognition capabilities improving fast, these are big questions to answer; not least amid progress that hints that AI-powered augmented reality applications could yet be adopted at scale.)

See also: The Big Hallucination

“Since the release and growth of ChatGPT, a large amount of effort has been dedicated to trying to find prompts that circumvent the safety systems in place to prevent malicious misuse. These jailbreaks typically involve trapping the model via convoluted logical reasoning chains designed to make it ignore its instructions and training,” OpenAI said.

“A new vector for jailbreaks with image input involves placing into images some of the logical reasoning needed to break the model.

“This can be done in the form of screenshots of written instructions, or even visual reasoning cues. Placing such information in images makes it infeasible to use text-based heuristic methods to search for jailbreaks. We must rely on the capability of the visual system itself” OpenAI said

“We’ve converted a comprehensive set of known text jailbreaks to screenshots of the text. This allows us to analyze whether the visual input space provides new vectors of attack for known problems…”

Red Teaming on GPT-4v's capability in the scientific domain meanwhile found that "given the model’s imperfect but increased proficiency for such tasks, it could appear to be useful for certain dangerous tasks that require scientific proficiency such as synthesis of certain illicit chemicals. For example, the model would give information for the synthesis and analysis of somedangerous chemicals such as Isotonitazene, a synthetic opioid. However, the model’s generations here can be inaccurate and error prone, limiting its use for such tasks."