Doing AI in the cloud worries a lot of people at the enterprise level. There’s often data security and sovereignty issues that most regulated organisations are still in the process of ironing out with stakeholders.

Running large language models on-premises is a pain however: “Things fall apart, the centre cannot hold, mere anarchy is loosed upon your immature toolchain and breaking GPU drivers” as the poem reminds us.

See also: Red RAG to a Bull? Generative AI, data security, and toolchain maturity. An ecosystem evolves...

At Google Cloud NEXT in Las Vegas, GCP pledged to let customers “build on a truly open and comprehensive AI stack on-premises” using Google Distributed Cloud – a managed service that brings GCP hardware and software into your data center; air-gapped from the cloud if so desired.

Boasting new ISO27001 and SOC2 compliance for the security-conscious and audit checkbox-conscious alike, GCP’s “GDC” (keep up) can be as little as just one new GDC server, “equipped with an energy-efficient NVIDIA L4 Tensor Core GPU” as a standalone appliance, or a whole host of racks for those wanting to avail themselves of 2024’s alchemist’s crucible at serious scale.

Supported open models include Gemma and Llama2.

Google Distributed Cloud: K.I.S.S.?

As Gobind Johar, a GCP product manager put it in a breakout session at NEXT: “There is a lot of complexity and richness in the AI infrastructure stack. The No. 1 concern that’s top of mind for our customers is the interoperability between hardware and software; you’re seeing the plethora of options to pick from across the hardware SKU, the software SKU and the integration between all of those layers… Think about the software updates between all those fragmented components that don't understand each other, and it falls on your shoulders to make sure that all of it works.”

“Then now you want to do this at production scale, and ensure that the security policies and the identity policies, the administrative policies are all consistent across the entire stack and to ensure for your auditors, that you can actually prove to them that your stack is compliant with your use case.”

(Those building generative AI-powered applications on-premises will certainly recognise this characterisation… Breaking changes are rife and getting all the moving parts including the data together takes months.)

The big idea: The simplicity of running something in the cloud with a single user interface and someone else making sure all the drivers are right for the right hardware etc. for those who want to do generative AI on-premises.

You can even get rid of the air-gap if you want for certain workloads and interact with GCP. As Johar puts it: “You can really start paying attention to the details of where a workload runs based on its cost, its sovereignty requirements, or even its performance requirements. So if a workload needs low latency, then you can run it on-prem. But if a workload is batch, you can maybe run it on GCP. And because you have a centralised, singular management plane, everything looks the same to you with the same console so you can manage them with the same security policies…”

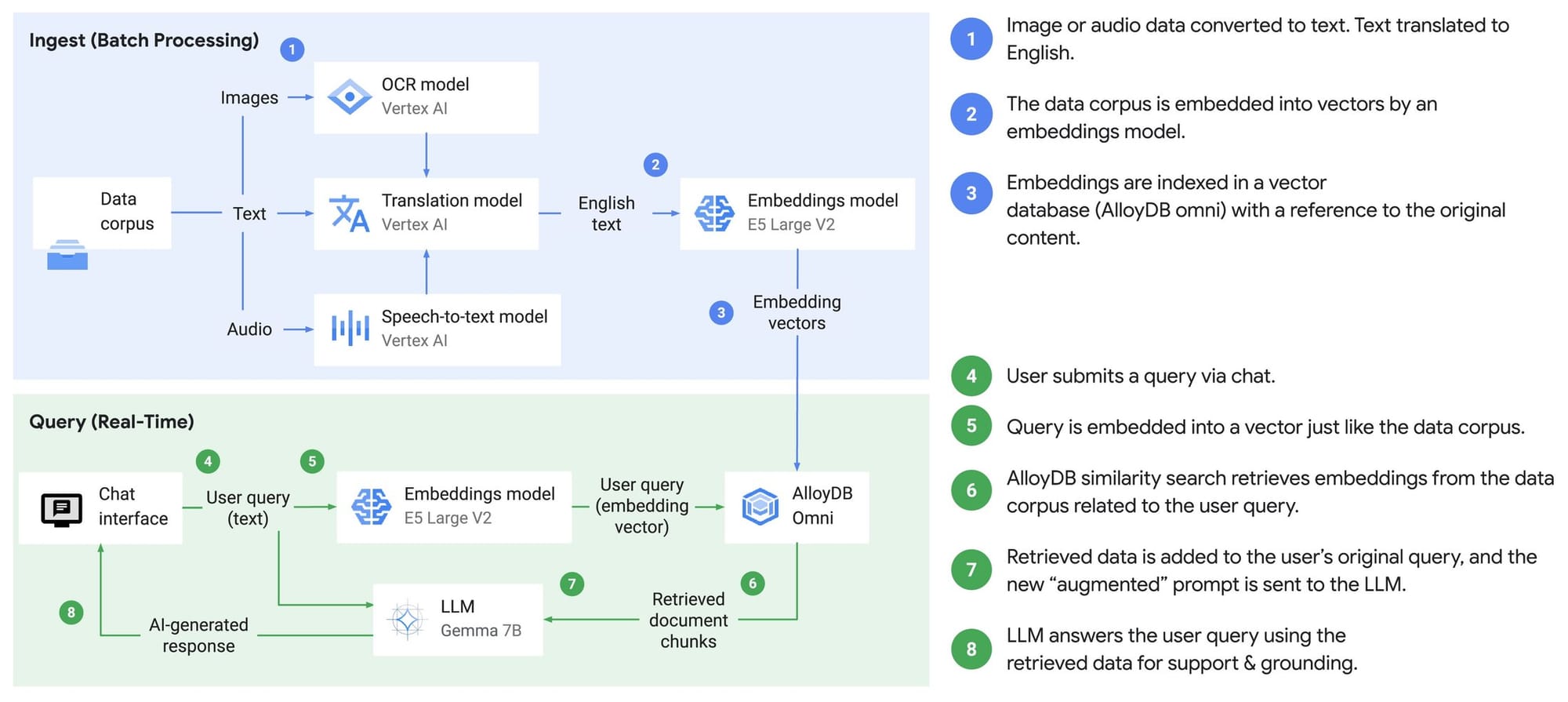

GCP says GDC ships with natural language search using its Vertex AI for LLM serving and a pre-trained API (speech-to-text, translation, and optical character recognition) for data ingestion that keeps everything on-premises.

“AlloyDB Omni pgvector extension is used for the vector database, with options for alternatives such as Elasticsearch” a blog published April 9 said.

To Gobind Johar: “We're on top of the infrastructure, we also layer on the core platform services, so that you have a GKE-like container management experience as well as GCP VMs. It’s like a GCP cluster running on-prem that allows you to focus more on creating business value for your customers.

“You don't have to re implement the database, you don't have to re-implement event processing or streaming pipeline like Kafka, you don't have to re-implement Spark; we are bringing all those services to you in a managed experience on-prem so that you can hit the ground running and start building your applications from day one. On top of all this, we layer in enterprise features like service mesh capabilities, management, and lots of CI/CD features to do quality control and scale across multiple clusters.”

Customers will no doubt be wanting to kick the tires and lift the hood a lot more than they could manage in a short breakout session – and there is no shortage of other companies trying to make on-prem genAI simpler.

See also: A local, free, open source, generative AI stack for developers? Docker and friends aim to cover all the bases

But Johar talked a good game. Data in GDC is encrypted. You can bring and manage your own encryption keys and GCP even provides an “end-to-end audit continuity tool so that you can actually look at the management traffic that flows between Google and between GDC” he explained: “Because we manage the stack remotely, our customers generally get concerned about what data is getting in between the two environments. So we built a tool for you to have a clear text logging of all dimensions of traffic that goes between the two platforms.”

GCP said several independent software vendors (ISVs) now have the new Google Cloud Ready — Distributed Cloud badge; those who have validated their solutions including Canonical, CIQ, Elastic, MongoDB, Palo Alto Networks, and Starbust.

Citrix, Cockroach Labs, Confluent, Couchbase, Crowdstrike, Dynatrace, Ericsson, GitLab, Grafana Labs, Hashicorp, IS Decisions, MariaDB, Neo4J, Nokia, Redis, SAP, Splunk, Standard AI, Syntasa, Tenable, and Trellix are going through the validation process.

Got tough questions about GDC generative AI deployability? Get in touch: We'll fight off the jetlag and get you firm answers. Call it Retrieval-Augmented Journalism-as-a-Service.