Databricks has muscled onto the LLM main stage with the release of DBRX, the most powerful open model out there yet, benchmarks suggest.

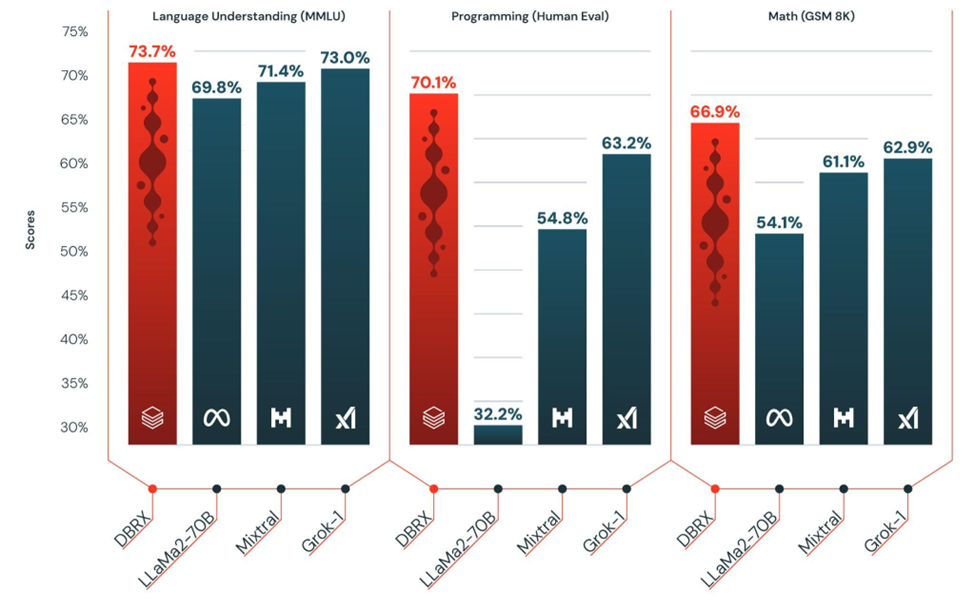

Its DBRX model outperforms Meta’s LlaMA2, Mixtral and Grok on language understanding, coding, and maths (per the Hugging Face Open LLM Leaderboard and Databricks Model Gauntlet benchmarks.)

Its model weights and code are licensed for commercial and research use under its own “Databricks Open Model License”, with some restrictions.

These include not using it to improve any other LLM. Organisations with over 700 million monthly active users will also need to request a licence.

Its release represents a new strong option for enterprises wary of using cloud-based LLMs, and a unique commercial opportunity for Databricks – which, with the release of DBRK, is building on technologies developed by MosaicML; a generative AI specialist it acquired for $1.3 billion in 2023.

Databricks has a large enterprise customer base for its data management tools (think names like AT&T, Burberry, Mercedes Benz, Shell, Warner Bros.) and it is offering to customise DBRX for customers or build bespoke versions of the open model tailored to their specific needs.

Building AI applications? Do rethink governance...

Most, needless to say, will still have to go through extensive work not just preparing their data and processes around it, but if using Retrieval Augmented Generation (RAG) then optimising “chunking” strategies, as well as tightening up cybersecurity and other governance controls.

McKinsey had some sage advice on the latter earlier this month, suggesting in a report that: “In practical terms, enterprises looking to address gen AI risk should take the following four steps:

- Launch a sprint to understand the risk of inbound exposures

- Develop a comprehensive view of the materiality of gen-AI-related risks across domains and use cases

- Establish a governance structure that balances expertise and oversight with an ability to support rapid decision making, adapting existing structures whenever possible.

- Embed the governance structure in an operating model that draws on expertise across the organization and includes appropriate training for end users.” (Lightly edited for brevity.)

Ramy Houssaini, Chief Cyber and Technology Risk Officer at one leading bank, noted criticaly of that report that: “Establishing a dedicated/separate governance structure as suggested in this report would not produce pragmatic outcomes. This is the moment for organizations to embrace agility and raise their game. This can only be achieved by a truly integrated digital governance model that can provide a unified view of risks (to cover data, AI, cyber, operational and strategic risks...etc) and enable safe innovation so that we are managing risk at AI Speed.”

Databricks LLM DBRX: The specs

DBRX uses the Mixture-of-Experts (MoE) architecture and was trained with Composer, LLM Foundry, and MegaBlocks. The model has 132 billion total parameters (think, crudely, dials that can be tuned for performance.)

Databricks claims DBRK is capable of inference “up to 2x faster than LLaMA2-70B” and significantly more lightweight than Elon Musk’s Grok.

It was pre-trained on a massive 12 trillion tokens of text and code data.

Databricks is not revealing more information about that data.

The company said in a technical blog: “Compared to other open MoE models like Mixtral and Grok-1, DBRX is fine-grained, meaning it uses a larger number of smaller experts. DBRX has 16 experts and chooses 4, while Mixtral and Grok-1 have 8 experts and choose 2. This provides 65x more possible combinations of experts…this improves model quality.”

More details are in the DBRX repository.

The company rolled out Nasdaq in a press release, with Mike O'Rourke, Head of AI and Data Services, saying: “Databricks is a key partner to Nasdaq on some of our most important data systems…we are excited about the release of DBRX. The combination of strong model performance and favourable serving economics is the kind of innovation we are looking for as we grow our use of Generative AI at Nasdaq."