Hardware to power Artificial Intelligence workloads is a booming business right now. NVIDIA caught the wave first – seeing gross profits more than quadruple in the past quarter from $3 billion to $13 billion in Q3 2023.

Organisations around the world have snapped up its GPUs to power their large language model training and inference workloads – one European organisation has just agreed to buy up nearly 24,000 of NVIDIA’s chips.

But NVIDIA faces future pressure from many quarters: Google’s landmark new Gemini models, released this week, were trained on Google’s own Tensor Processing Units (TPUs) – and Amazon’s Titan LLMs were likewise trained on its own accelerators, even if they used NVIDIA software.

(Google’s has shared detail on its challenges, including that it expects Silent Data Corruption events “to impact training every week or two”; that it needed “several new techniques” for rapidly detecting and removing faulty hardware “combined with proactive SDC scanners on idle machines and hot standbys”’ given that “genuine machine failures are commonplace across all hardware accelerators at such large scales, due to external factors such as cosmic rays;” OpenAI has also described its challenges.)

Enter AMD with its MI300X and new libraries

Now AMD, which was slower out of the gate on the GPU front, having made great headway into the data centre CPU space in recent years, has announced the specs for its new MI300X GPU – and they look impressive.

(AMD CEO Lisa Su late Wednesday even suggested that the AI chip industry could be worth over $400 billion in the next four years – double a projection AMD made August: "This is not a fad," she added.)

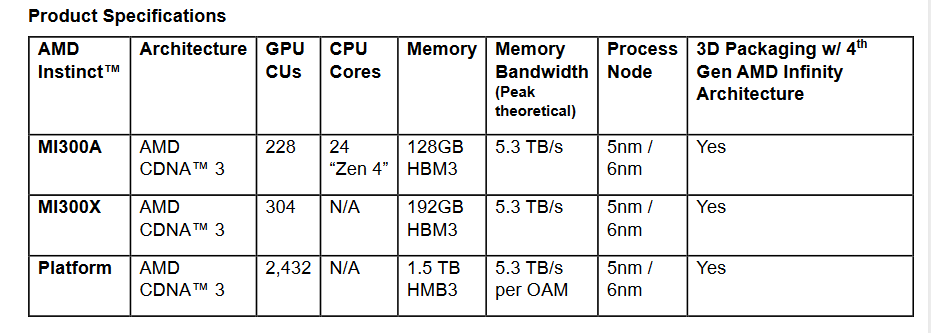

The company just announced its new AMD Instinct MI300X accelerators –as well as its MI300A accelerated processing unit, alongside the “AMD ROCm 6” software platform and “commitment to contribute state-of-the-art libraries to the open-source community” at its Advancing AI event.

(A list of applications and software supported by AMD ROCm is here and includes machine learning frameworks like CuPy, JAX and TVM.)

AMD says its Instinct MI300X GPU delivers 1.6X more performance than NVIDIA's H100 in AI inference workloads and twice the HBM3 memory capacity of its rivals at up to 192 GB and 5.3 TB/s of bandwidth.

Notably every versus-the-opposition example in AMD's deck was against the NVIDIA H100 rather than its forthcoming H200, which is estimated to start shipping in the second quarter of 2024,

As Tom's Hardware notes: "The MI300X accelerator is designed to work in groups of eight in AMD’s generative AI platform, facilitated by 896 GB/s of throughput between the GPUs across an Infinity Fabric interconnect. This system has 1.5TB of total HBM3 memory and delivers up to 10.4 Petaflops of performance (BF16/FP16). This system is built on the Open Compute Project (OCP) Universal Baseboard (UBB) Design standard, thus simplifying adoption - particularly for hyperscalers."

The new AMD Instinct MI300A meanwhile combines both a CPU and GPU in the same package and will compete with NVIDIA's Grace Hopper chips, which have a CPU and GPU in separate chip packages. AMD says it is shipping the chips to its partners now and did not reveal prices. It names Oracle, Meta, Microsoft as among its customers, with OEMs from Dell to Lenovo and beyond all set to start offering servers featuring the new hardware.

Its shareprice barely moved on the announcement.