Amazon has announced the launch of Amazon Q – a generative AI assistant that can be plugged into a wide range of popular enterprise applications and document repositories, including S3, Salesforce, Google Drive, Microsoft 365, ServiceNow, Gmail, Slack, Atlassian, and Zendesk.

AWS aims to make it a one-stop-shop AI assistant for a range of use cases and is baking it into a wealth of different services, much like Microsoft is doing the same thing with its generative AI-powered Copilot suite.

The AI assistant lets users ask questions in natural language of data in any of those applications or across AWS services. Amazon Q has been trained on “17 years of high-quality AWS examples and documentation to provide guidance for every step of the process of building, deploying, maintaining, and operating applications on AWS” the company boasted in a release.

Amazon Q access is widespread

Users will be able to access it via Console, documentation, AWS website, IDE through Amazon CodeWhisperer, or through AWS Chatbot in team chat rooms on Slack or Microsoft Teams, the company said today.

Announcing Amazon Q at its annual re:Invent summit in Las Vegas, the hyperscaler said the new offering “respects your existing identities, roles, and permissions” and can connect to SAML 2.0–supported identity provider (e.g. Okta, Azure AD, Ping Identity) for user authentication.

Amazon Q is also being baked into Amazon Connect, the cloud provider’s manager service for call centres, with AWS saying today at re:Invent that it can “help agents detect customer intent during calls and chats using conversational analytics and natural language understanding (NLU)...

“...then provides agents with generated responses and suggested actions, along with links to relevant documents and articles. For example, Amazon Q can help detect that a customer is contacting a rental car company to change their reservation, generate a response for the agent to quickly communicate how the company’s change fee policies apply to this customer, and guide the agent through the steps they need…”

Standalone Amazon Q is initially in preview and only available via US East and US West regions, whilst its availability via Amazon Connect includes Asia Pacific (Sydney), Asia Pacific (Tokyo), Europe (Frankfurt), and Europe (London),product documentation showed.

Amazon is aiming to make it enterprise-friendly, with AWS saying in an FAQ that admins can “configure Amazon Q to respond strictly from enterprise documents or allow it to use external knowledge to respond to queries when the answer is not available in enterprise documents.

“Administrators can also configure allowed topics and blocked topics and words so that the responses are controlled. In addition, administrators can enable or disable the upload file feature for their end users.”

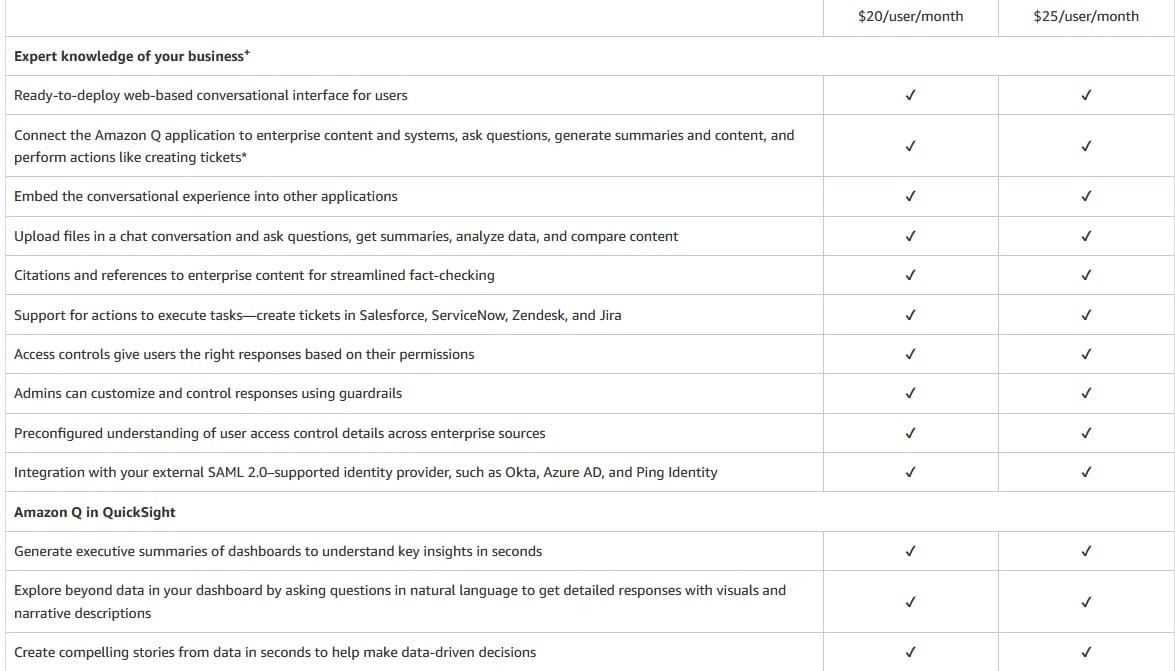

A review of Amazon Q documentation by The Stack shows pricing starting at $20/users per month for "Amazon Q Business" and $5 extra for "Amazon Q Builder" which adds it to the IDE using Amazon CodeWhisperer, makes it available to troubleshoot AWS issues, offer code suggestions and vulnerability scanning, etc.

A product page with FAQs and more is here.

Bedrock adds fine tuning

In other AI-centric news from re:Invent, AWS said its managed AI service Amazon Bedrock now supports fine-tuning for the Meta Llama 2 and Cohere Command Light large language models, along with its own Amazon Titan Text Lite and Amazon Titan Text Express FMs, “so you can use labeled datasets to increase model accuracy for particular tasks.”

The company said: “Organizations with small, labeled datasets that want to specialize a model for a specific task [fine-tuning] which adapts the model’s parameters to produce outputs that are more specific to their business [can]... using a small number of labeled examples in Amazon S3…fine-tune a model without having to annotate large volumes of data.”

“Bedrock makes a separate copy of the base foundation model that is accessible only by you and trains this private copy of the model. None of your content is used to train the original base models. You can configure your Amazon VPC settings to access Amazon Bedrock APIs and provide model fine-tuning data in a secure manner” the company added, sharing more detail on the Amazon Bedrock product page, and documentation.

To be updated with more detail as we have it from AWS.