A Bank of England study has revealed that growing numbers of UK financial services institutions are now using autonomous AI models, warning that imprudent use of this technology could pose a systemic risk to the nation's economy.

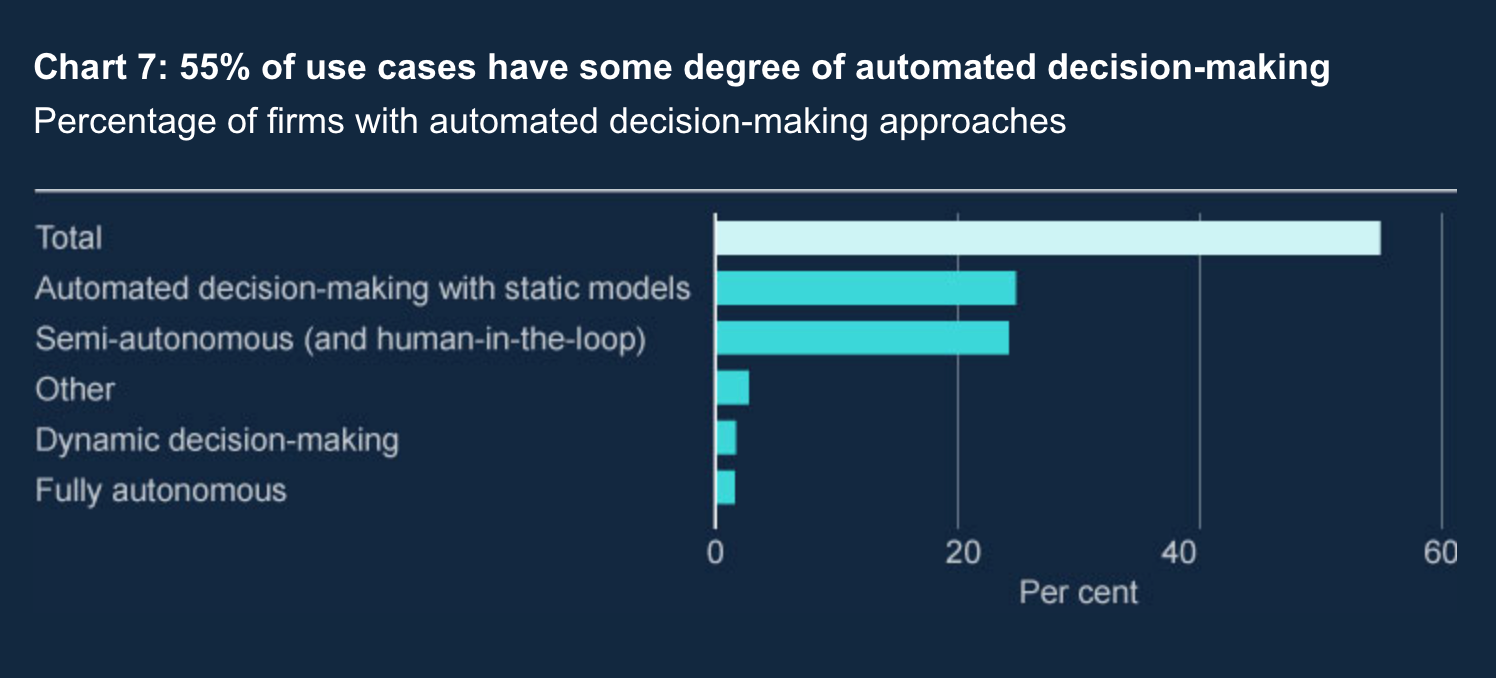

The survey revealed that one in 50 AI use cases in Britain involves the deployment of AI capable of "fully autonomous decision-making". A further 55% of use cases involve "some degree of automated decision-making", with 24% of these involving models that are semi-autonomous and "can make a range of decisions on their own" but require "human oversight for critical or ambiguous decisions."

Most models are used in "low materiality" functions, which means they have a small impact because they only apply to a limited number of customers, are involved in a business area without high-risk levels or do not handle a large amount of assets. However, 16% of AI use cases had high materiality and are most commonly used in general insurance, risk and compliance, and retail banking - spaces which impact the financial health of citizens and businesses.

"While AI has many benefits, including improving operational efficiencies and providing customers with personalised services, it can also present challenges to the safety and soundness of firms, the fair treatment of consumers, and the stability of the financial system," the Bank warned.

The survey found the top reported benefits of AI are in data and analytical insights, anti-money laundering (AML) and combating fraud, and cybersecurity, with the largest expected increase in benefits over the next three years predicted to be operational efficiency, productivity and cost base.

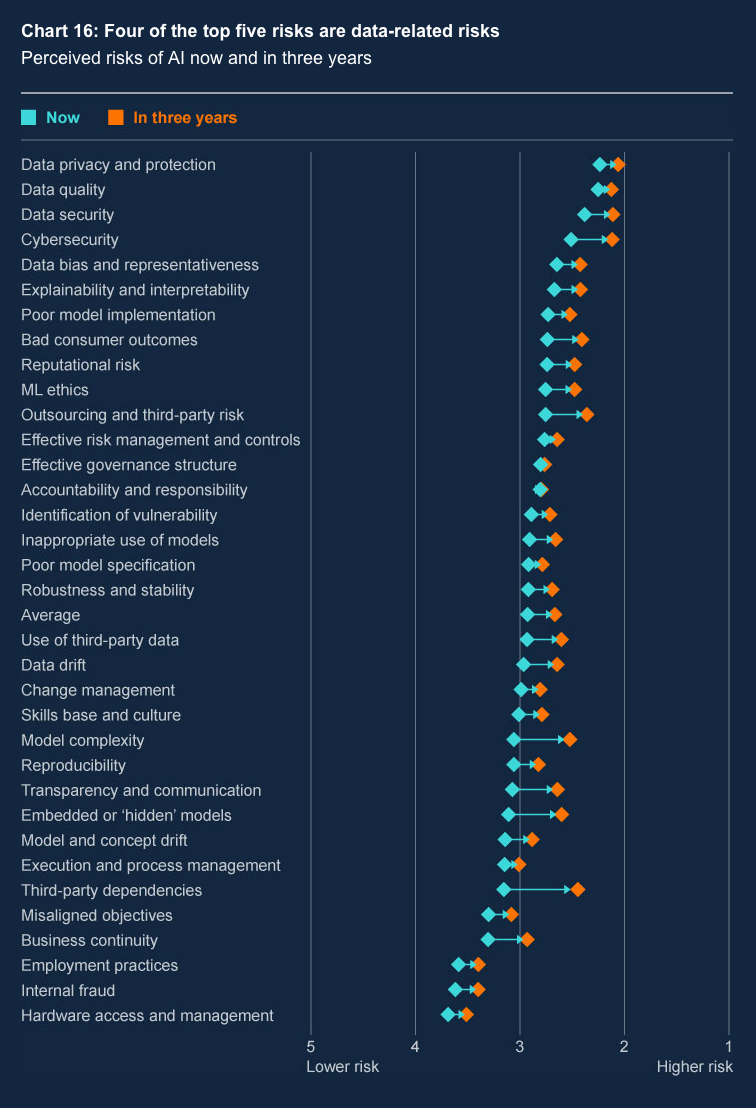

Of the top five perceived risks, four were related to data, with respondents naming data privacy and protection, quality, security, and bias and representativeness as their top concerns.

The risks expected to increase the most over the next three years are third-party dependencies, model complexity, and embedded or "hidden" models.

READ MORE: "Micro-vulnerabilities" create "system-wide" financial risks, Bank of England warns

Cybersecurity was highlighted as the greatest systemic risk both now and in three year's times, with the largest increase in systemic risk expected to arise from third-party dependencies.

The Bank produced a similar report in 2022 that focused primarily on the adoption of machine learning (ML) techniques. Since then, there has been a significant shift towards partially or fully autonomous AI models driven by the integration of generative AI technologies. These models are increasingly capable of making independent decisions without human intervention, marking a substantial evolution from earlier AI applications.

The latest survey found that 46% of firms have only a "partial understanding’ of the AI technologies they use, compared to 34% claiming a "complete understanding". This gap is primarily due to the use of third-party models, which firms find less comprehensible than those developed in-house.

The systemic risks of GenAI

In a speech delivered on Halloween at the end of October, Sarah Breeden, Deputy Governor, Financial Stability, warned that AI can "have systemic risk consequences" that are hard to predict, let alone mitigate.

Increasing use of autonomous AI models also poses a "system-wide conduct risk", Breeden advised.

"If AI determines outcomes and makes decisions, what would be the consequences if, after a few years, such outcomes and decisions were legally challenged, with mass redress needed?" she asked.

"Generative AI models have distinctive features compared to other modelling technology," Breeden added. "They can learn and evolve autonomously and at speed, based on a broad range of data, with outputs that aren’t always interpretable or explainable and objectives that may be neither completely clear nor fully aligned with society’s ultimate goals."

She continued: "AI models used ‘in the front line’ of financial firms’ businesses could interact with each other in ways that are hard to predict ex ante [based on forecasts]. For example, when used for trading, could we see sophisticated forms of manipulation or more crowded trades in normal times that exacerbate market volatility in stress?"

Breeden also advised that dependence on a small number of providers for services such as data storage creates a danger of cascading failures, as well as echoing the security concerns of respondents to the Bank's latest survey.

"AI could also increase the probability of existing interconnectedness turning into financial stability risk – in particular through cyber-attacks," she said. "AI could of course improve the cyber defence capabilities of critical nodes in the financial system. But it could also aid the attackers – for example through deepfakes created by generative AI to increase the sophistication of phishing attacks."