Industry spending on OCP specification hardware will hit over $40 billion this year and the non-profit's "marketplace" is driving eyeballs to unique approaches.

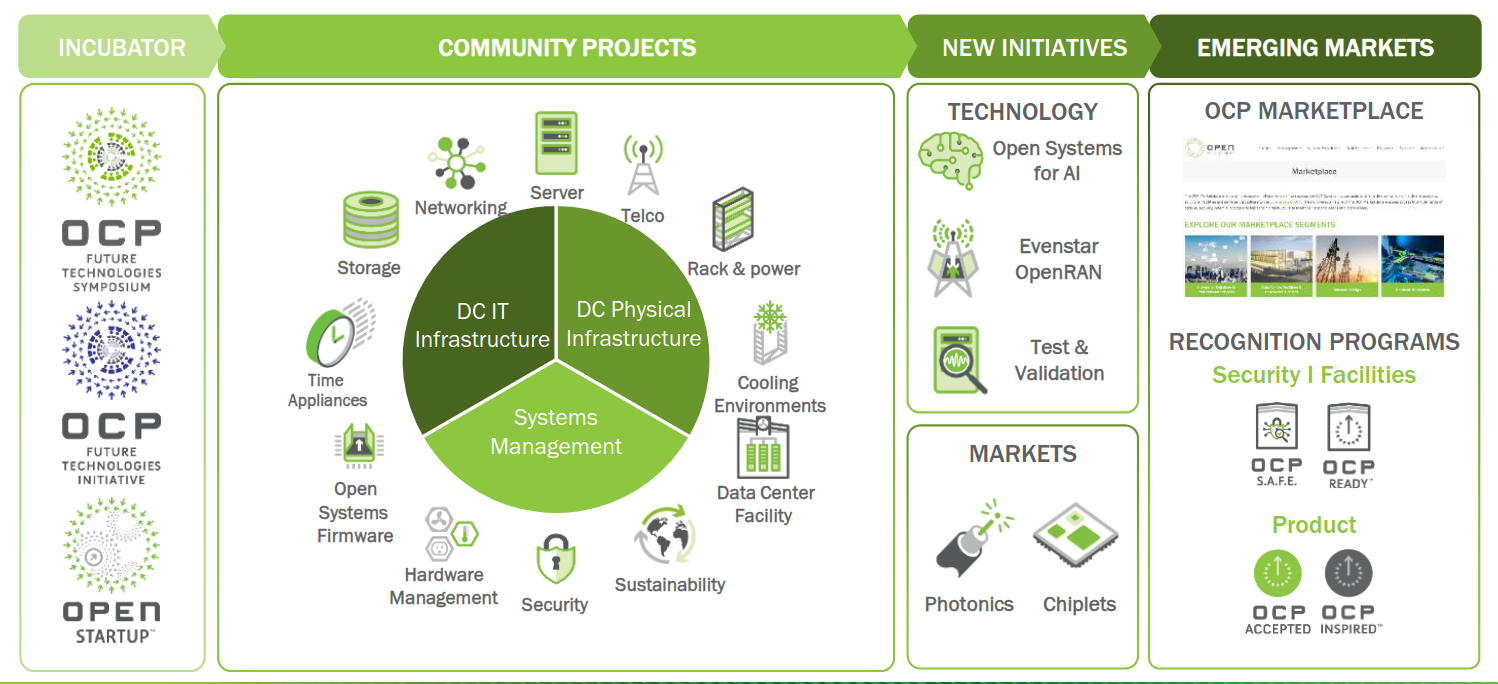

From liquid cooling, to modular hardware for data centres, and down to firmware, Open Compute Project (OCP)’s 150+ workstreams are building a powerful head of steam – and it’s not just hyperscalers involved either.

The Open Compute Project (OCP) was first born out of a collaboration between Goldman Sachs, Intel, Meta, and Rackspace – heavily informed by Meta’s lessons learned building and optimising its own data centers.

The non-profit, with just 17 full-time staff, has had an outsized influence – as the sharp end of an industry spear focused firmly on an open source approach to infrastructure (and increasingly software) development.

The OCP describes itself as a “collaborative community focused on redesigning hardware technology to efficiently support the growing demands on compute infrastructure.” It does this by publishing open specifications for commodity hardware; with a focus on delivering across five tenets: efficiency, impact, openness, scalability, and sustainability.

OCP build spend to hit $41 billion in '24

As Chief Innovation Officer Cliff Grossner tells The Stack: “The important thing about the OCP is all the work done in our community is free and available in the open. There is no license fees to take it and use it.”

“We don't stop there, because it's nice to have things on paper, but ultimately we want product… that’s where our vendor members come in, because once the specs are in the open, the vendor members will pick that up, knowing that the operators from the data centers will buy the equipment if they build it.” (An IDC report for the OCP published in October, suggested that IT industry spending on OCP community designs would hit $41 billion in 2024 and surge to some $74 billion by 2028.)

See also: The Big Interview, with Nomura's Global CTO Dinesh Keswani

The non-profit says over 5,000 engineers from across its 400-strong membership base are working across 150 different workstreams – on projects as diverse as liquid cooling, modular servers and firmware.

Whilst hyperscalers are spending hugely on data centre infrastructure (Morgan Stanley predicts that hyperscaler capex will reach $300 billion next year and Amazon has said that it will spend $75 billion in 2024 “the majority on AWS”), enterprises are also working with OCP designs.

Some of them are doing so at individual project or product level (the OCP has projects and workstreams in play across cloud storage, network disaggregation, infrastructure software, timing appliances, and even disaggregation of chips to create an open chiplet-based ecosystem).

Others, like major insurer GEICO, are swapping public cloud for OCP design-based private clouds with modular hardware across the rack – and a healthy focus on as much open-source software to operate it too.

See also: Warren Buffett’s GEICO repatriates work from the cloud, continues ambitious infrastructure overhaul

On enterprise OCP specification hardware adoption, it’s a mixed bag of ambition across both equipment makers and downstream users he says.

Grossner explains: “There's a couple of components that have pervasive use across all companies” – he names HPE’s ProLiant servers for example, which will take an OCP 3.0 NIC, as well as other OEMs active here.

“Open networking is another big success story. AT&T, for example: 90% of their network is running OCP switches... So there'll be enterprises that are using components or specific areas of OCP. When you talk to somebody like GEICO, they're moving at a rack scale level toward OCP, and that's what the hyperscalers are doing. So you have degrees of of OCP usage.”

The OCP also hosts a marketplace, where, as CIO Cliff Grossner tells The Stack, “vendor members can showcase their wares that are based on OCP designs” – he quickly adds the caveat that “it's called the marketplace, but when someone clicks there, they go through to the vendor website. There's no cut to the OCP. We don't make anything on the marketplace.”

Join peers following The Stack on LinkedIn

The marketplace sees around 17,000 visitors every month, he says and has helped showcase OCP-specification hardware from a range of vendors.

Grossner names Pegatron, Wiwynn, ASRock for example: “All of these ODMs have previously sold through the OEM model. [The marketplace] is just bringing them through now, when they can start to sell direct…”

“Once we have a specification that comes through, what large companies like is a multi-vendor strategy, and that is what we try to build throughout the supply chain. So trying to make sure that those specifications (which are paper) result in product which then have multiple manufacturers that can provide that specification [but] still allow for innovation on top.

“So that's the 20% to 30% that a company can innovate on that differentiates themselves. What's in it for the vendor is a seat at the table to make sure that their product roadmap aligns with what the hyperscalers and the rest of the world is doing, so that they're not operating in a silo and trying to determine what their current set of customers may need for their next product catalog… so they can have a collaborative approach to their roadmap.

“What's in it for the customer is a multi-vendor strategy; they can work off the same spec, but have multiple suppliers in various regions.” - Cliff Grossner

The OCP’s Steve Helvie adds on a call with The Stack that current “hot spots” across the non-profit’s workstreams include AI, liquid cooling, firmware, and interconnect specifications: “We have a very large community working on liquid cooling, many aspects of it” he says.

“From rear door heat exchangers to cold plate, single phase cold plate, two phase immersion cooling… looking at the properties of a different fluids and standardizations around that” says Helvie, VP Emerging Markets, OCP. He adds: “Another hot spot is interconnect…

“We have alliances with UEC for the Ethernet back end and scale out. And we also are now building an alliance with UA link around the CPU to GPU

See also: Tech titans team up to rethink Ethernet, from the physical to the software layer

On the AI side, the OCP’s members are pushing ahead with the “Open Systems for AI Strategic Initiative” meanwhile. NVIDIA and Meta are both contributing modular server and rack technologies; the former its MGX based GB200-NVL72 rack and compute and switch tray designs; the latter introducing Catalina AI Rack architecture for AI clusters.

As the OCP put it last month:

“The contributions by NVIDIA and Meta, along with efforts by the OCP Community, including other hyperscale operators, IT vendors and physical data center infrastructure vendors, will form the basis for developing specifications and blueprints for tackling the shared challenges of deploying AI clusters at scale. These challenges include new levels of power density, silicon for specialized computation, advanced liquid-cooling technologies, larger bandwidth and low-latency interconnects, and higher-performance and capacity memory and storage.”

Many hands, it holds, make light work.

Sign up for The Stack

Interviews, Insight, Intelligence for Digital Leaders

No spam. Unsubscribe anytime.