Istio got off to a bumpy start. The open-source “service mesh” was envisioned as an ideal complement to Kubernetes – doing for the network what Kubernetes did for compute in the containerised applications world.

The hope was that it would become the de facto “service mesh” standard as organisations containerised their applications – and looked for a go-to toolkit to manage how they secured, monitored, and networked them.

Why the “service mesh” is important

The shift from monolith to microservices means a whole host of things have to happen over the network. Applications, disaggregated into smaller components, all with their own life cycles and associated rules, need to communicate with each other – and K8s does not natively offer robust routing, traffic rules, or strong monitoring or debugging tools.

The idea was that Istio would be the service mesh to coordinate this loosely coupled mesh of services; furnishing network features like canary deployments, A/B testing, load balancing, failure recovery, observability of traffic, and controls like mTLS encryption and policy management.

(Adding an end-to-end encryption layer, mTLS, for both east-west and north-south microservices traffic that is decoupled from the application codebase is compelling for a lot of users, for example; enter Istio...)

Deep in the “trough of disillusionment”

Istio was founded by Google, IBM, and Lyft in 2016. Governance of the project was an early bone of contention and politics, with IBM in 2020, for example, publicly lamenting Google's failure to transfer governance to the Linux Foundation’s Cloud Native Computing Foundation (CNCF).

The end result: Patchy uptake and Gartner putting “service mesh” right at the bottom of the “Trough of Disillusionment” in this month’s inaugural “Hype Cycle” for Platform Engineering. Istio has not been nearly as popular as Kubernetes. Yet things may be about to change…

Microsoft gets involved and...

Google finally bit the bullet in 2022 and Istio, as of 2023, is a mature and “graduated” CNCF project. Even Microsoft is now meaningfully on board too; Redmond in 2023 jettisoned its rival Open Service Mesh, which it had founded in 2020, and belatedly joined Istio; eight years after its launch.

Istio now harnesses some of the biggest beasts of the technology world (Google, IBM, Microsoft) in service mesh lockstep. Governance is largely solved. There goes one big roadblock. What about the clunky architectural choices that have rendered service meshes problematic? "Ambient" Istio, which got a beta release eight weeks ago, aims to solve those too...

Istio without the sidecar?

Solo.io CEO Idit Levine has been involved in the project since its early days.

“We got it wrong,” she says of early industry efforts around the networking of containerised applications, not just of Istio’s design choices.

"Not just Istio: Cilium, Kong... everybody got it wrong!"

How so?

Much can be blamed on the “sidecar” proxy, an implementation adopted not just by Istio but alternatives like Consol Control, Kuma, Linkerd et al.

In a microservices-based architecture, if one microservice wants to talk to another, it has to do so via a sidecar proxy (see chart below for example.)

i.e. Microservice talks to its sidecar proxy, that sidecar proxy talks to the sidecar proxy of the other microservice. This is clunky but allows for tight integration of capabilities, mTLS from Kubernetes “pod” to “pod” etc. But it's also been a pain to manage, comes with latency issues, and still doesn't entirely do what it was meant to do on the tin: Make cloud-native infrastructure management something that is not a developer's problem

Conscious uncoupling...

Stepping back, the service mesh allows companies to decouple the “operation” of an app from developer work delivering business logic.

It is, otherwise, far too easy for disparate application development teams to start owning a substantial chunk of the infrastructure estate. e.g. If a company has built an app that needs traffic going to another app, or elsewhere, developers may look to start baking this in as part of the build.

See also: Containerise everything? What Nutanix’s evolution says about the changing face of IT – and the rise of the platform engineer

But the organisation may well need advanced routing, security controls, observability, metrics, traces, logs etc. Developers building out business logic are typically not well equipped to handle that, nor, most will argue, should they be spending their time wrangling cloud-native infrastructure; they should be building things that provide value to their customers…

Your Sidecar: Problematic dependencies

As Levine puts it: "And the idea was that [before the service mesh] every time that you wrote an application, your [IT or infrastructure team needed to say] ‘guys, you need to use that library so I will be able to make sure that your application is safe and observed. I'm forcing you to do that.’

"But now let's assume that you have a problem. You want to change something; you have a CVE, some security problem in the proxy; you need to go to the application owner and say ‘hey, first of all we deploy your application; second, we are going to break-change.’

"The idea of the service mesh was to abstract these controls away and put them in the proxy. But if you wanted to upgrade the proxy, you still need to talk to the application owner. Yes, it's not the [same] library anymore. But there is still a dependency, which was very problematic..."

Enter Ambient Istio...

A significantly new approach called “Ambient” Istio aims to tackle some of these bugbears around service mesh deployments, from such complex dependencies through to persistent latency issues.

Ambient mode Istio, co-developed by engineers at Solo and Google (who teamed up after they realised they had settled on the same solution to Istio’s issues) is not only faster but significantly more efficient, they claim.

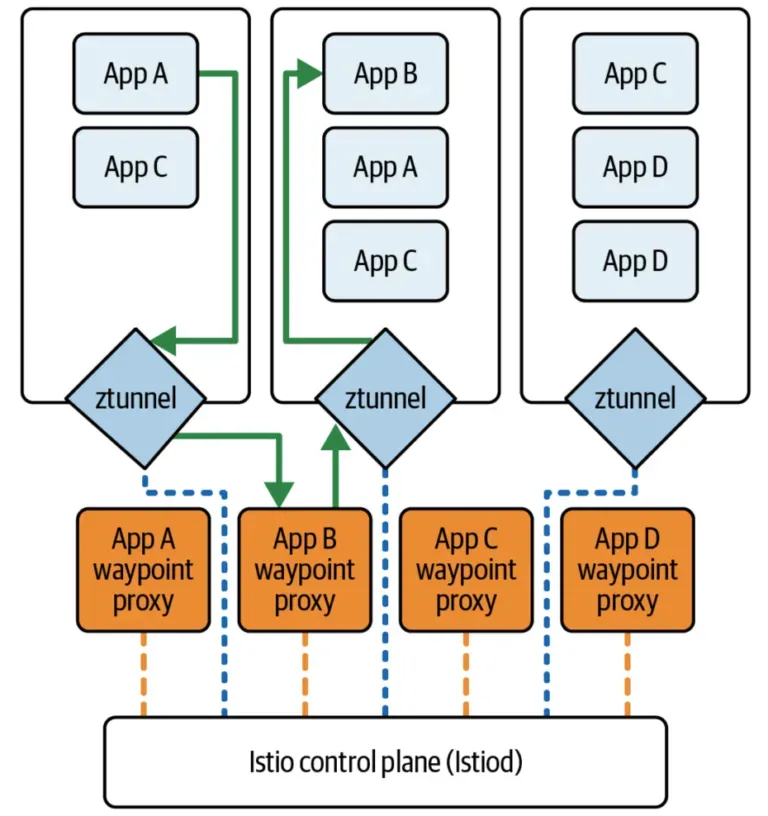

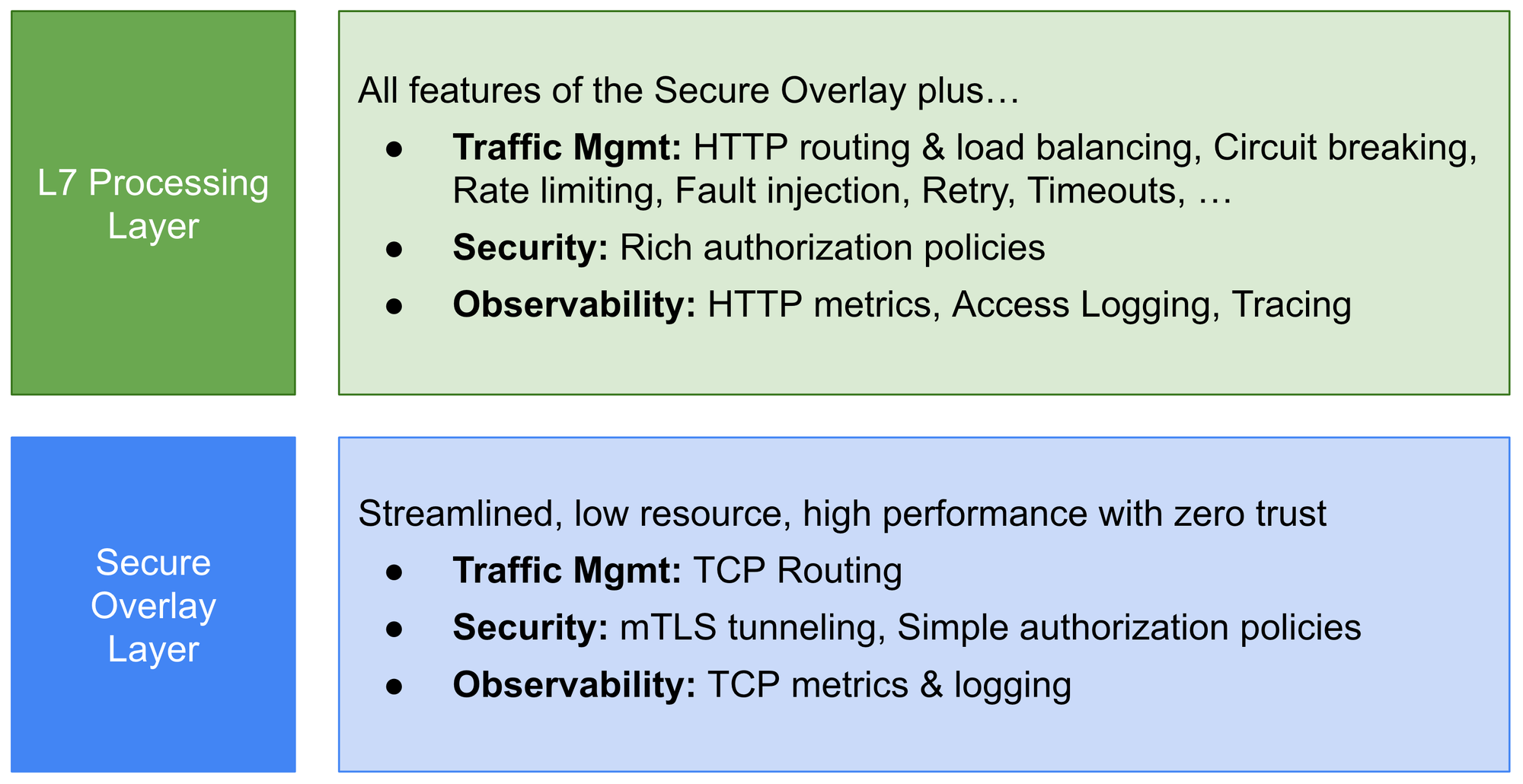

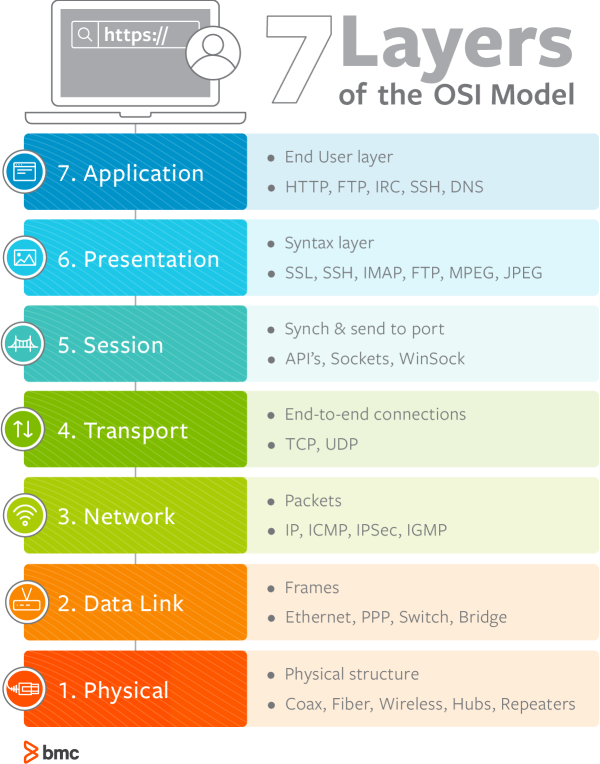

It involves a completely new architecture that separates the responsibilities of zero-trust networking (Layer 4) and Layer 7 policy handling, rather than bundling both into that clunky single sidecar proxy. This is done with two new components: ztunnels and waypoint proxies.

But wait! We're in the weeds now; let's rewind!

We've buried the lede...

Meet Idit Levine, passionate engineer, “solo” startup founder (unusually her company was founded in and run from Boston, not Silicon Valley) former professional basketball player, and Istio contributor.

Solo secured $135 million in Series C funding in 2022; achieving a $1 billion+ valuation and adding her company to a very short list of women-founded Unicorns. Her company’s roster of 200+ customers includes blue chips like BMW, Domino's Pizza, Mattel, T-Mobile and ING.

Solo provides two primary products, a cloud native API gateway (in the “visionaries” corner of the Gartner Magic Quadrant for API Management, called “Gloo Gateway”) and a service mesh offering called “Gloo Mesh”.

Have your CAKES and eat 'em.

As a company it builds on the “CAKES” open-source stack of CNI (Cilium, Calico); Ambient mode Istio; Kubernetes; Envoy Proxy (API Gateway); and SPIFFE (SPIRE). Solo ultimately provides what is a curated, tidily packaged distribution of open-source components with a single control plane and a range of enterprise capabilities layered into the mix.

Gloo Gateway buyers get a lightweight, Kubernetes-native API gateway (Solo boasts of the ability to support 353 million requests per day on a single CPU), and Gloo Mesh users get capabilities for platform engineering teams wanting to handle security, resiliency, and observability across clouds and clusters, whether for VMs or microservices, via one SaaS.

(If you are choosing to “buy it” over to “build it” and want to “buy it” from a team that is also deeply involved upstream in the open-source projects that underpins so much of this cloud-native application world, Solo, in short, wants to be your go-to port-of-call and partner – Levine is also proud of the way Solo works closely with customers to adapt to their requirements.)

Levine's enthusiasm is palpable and energising. She is also an engineer's engineer, happy talking waypoint proxies et al and The Stack, woefully unprepared on a first call, schedules a second one which she generously agrees to. We scramble to do our homework; cloud-native application security, networking and management is not a solved problem and the stack underpinning it is fragmented and, yes, complex for those new to it.

Solo's growth

Levine is candid about her founder's journey as a "solo" woman founder and the mistakes she's made along the way, as well what she got right.

Staying in Boston (Levine's husband is a professor at Harvard and they are raising children together on the East Coast; not always the easiest thing to juggle as a founder) meant she turned down VC requests to relocate to the Valley when first fundraising. She recalls: "[I thought] 'what are you talking about?!' This is a place with so [many] universities and smart people.

"I can teach people and we can create an amazing team! That's exactly what we did. We hired young people from university and basically [taught] them and today those guys are superstars" she says emphatically.

"The beautiful thing is the majority of them are still here."

Building a company at the cutting edge of cloud-native applications came with its challenges of course; engineering hires were not a problem as she could manage them directly. Building up a GTM team was initially tougher, she freely admits. Now, Levine says, she's confident in the scale and capabilities of the team she has in place: "I'm keeping it around 150 people, because I want to be efficient. I don't love layers of managing.

"It's a very flat organisation on purpose."

She's not doing press because she is fundraising again either. Solo has solid revenue growth, ample cash in the bank from conservative management of a previous raise and is focused on supporting customers and reshaping the very underpinnings of the open-source platforms that Solo is built on.

"I fell in love with tech"

We talk basketball (team-spirit, etc.), some of the challenges of running a business and running a family (not easy) but it is the heart of the technology challenges her team is solving that resonate: "I fell in love with tech" she tells The Stack. "I feel bad saying this but... when people are reading a book, I am reading about the innovation happening in this space; I really care about the cool stuff that's happening, I like solving problems! I like making complex technology easier and more accessible for users..."

It is Ambient mode Istio that Levine feels is particularly exciting – Solo has already baked the architecture into the firm’s “Gloo Mesh” and is moving to production with a small handful ("tens") of early adopters.

Why the slow pace, after Istio first announced Ambient in 2022?

(It only made it to a Beta release in May 2024 however.)

"It takes time to build really good software" she says unapologetically.

"You need to make sure that people running it are giving you feedback, that it's stable, it scales, you understand the use-cases. We've worked a lot with customers, with our field engineers to get it good to use in production. The feedback is amazing; it's like 'oh my god, that's so good.'"

They don't have to use it either; "known" Istio is not being chucked out.

"We want to make it easy for our customers to explore the value provided by whatever service mesh pattern best suits their needs" as Solo put it back in 2022: "Classic sidecars and ambient waypoints both have a role to play in this evolving space, and we are truly proud to stand behind Gloo Mesh as a tool to simplify service mesh adoption" – and Levine's team have worked hard to "manage the nuances of evolving Istio architecture underneath Gloo Mesh’s familiar set of APIs", which have not changed, Solo says.

Lifting the hood little more

Solo.io, Google, Microsoft, Intel, Aviatrix, Huawei, IBM, Red Hat, among others all contributed to Ambient's Beta. The focus, ending sometimes crippling reliance on sidecars for cloud-native application controls.

"Challenges that sidecar users shared with us include how Istio can break applications after sidecars are added, the large consumption of CPU and memory by sidecars, and the inconvenience of the requirement to restart application pods with every new proxy release" as Istio put it this May.

The project's contributors acknowledged frankly in 2022 when they first unveiled Ambient that the sidecar was, well, a necessary evil and hardly a well-loved one (we reiterate; not just in Istio but beyond.)

"Traditionally, Istio implements all data plane functionality, from basic encryption through advanced L7 policy, in a single architectural component: the sidecar. In practice, this makes sidecars an all-or-nothing proposition. Even if a workload just needs simple transport security, administrators still need to pay the operational cost of deploying and maintaining a sidecar. Sidecars have a fixed operational cost per workload that does not scale to fit the complexity of the use case" as Istio said.

Splitting L4 and L7 controls

"Ambient mesh takes a different approach. It splits Istio’s functionality into two distinct layers. At the base, there’s a secure overlay that handles routing and zero trust security for traffic. Above that, when needed, users can enable L7 processing to get access to the full range of Istio features.

"The L7 processing mode, while heavier than the secure overlay, still runs as an ambient component of the infrastructure, requiring no modifications to application pods" an early community blog explained.

That means rather than going from nothing or some janky alternative to full-fat service mesh with resource-gobbling sidecars, users can adopt elements incrementally; e.g. start with a secure L4, add in separate and optional L7 processing across their fleet as required.

It's not perfect. L7 processing happens in a "waypoint" proxy in separately scheduled pods which has led to some concerns around latency.

Istio's community believes that as these are "normal Kubernetes pods, they can be dynamically deployed and scaled based on the real-time traffic demands of the workloads they serve. Sidecars, on the other hand, need to reserve memory and CPU for the worst case for each workload" (leading to persistent over-provisioning by platform engineering teams.)

"I'm very excited about the future"

With meaningful community work going on, the service mesh's time is coming and Istio is going to get a new lease of life, Levine believes.

After all, the challenges of networking, securing, managing containerised, cloud-native applications is not going away and some of the industry's sharpest engineers are now working together to tackle known problems.

(No troughs of disillusionment here.)

Mistakes made by the community have been recognised. Istio is being radically overhauled: "I'm very excited about the future" she says.

"And it's not only me. If you look at the community, there is starting to be a lot of excitement there. All the big clouds are in" (see AWS talking about Istio's "indispensable capabilities" here ) "because their customer are asking about it all the time. There is lot of good stuff coming up."

Questions? Istio has new user guides on adopting Ambient for TLS & L4 authorisation policy, traffic management, rich L7 authorisation policy, and more here. There's an ambient channel in the Istio Slack, and discussion on GitHub. There's also Solo. Levine gives every impression that getting heavily into the weeds of how to network, secure and generally manage cloud-native applications is a genuine delight and she'd be happy to talk agnostically about it.

Sign up for The Stack

Interviews, Insight, Intelligence for Digital Leaders

No spam. Unsubscribe anytime.