It's been just under two years since the launch of ChatGPT, which surged to 100 million users in a matter of weeks to become the fastest-adopted consumer app in history. In the enterprise, security leaders have been slightly slower to welcome Generative AI into their organisations.

However, the same can certainly not be said of their colleagues, who have started using it with aplomb to create a threat that's already been dubbed Shadow AI.

To understand how CISOs are responding to GenAI as ChatGPT approaches its second birthday, The Stack spoke to Laura Robinson, Program Director of the Executive Security Action Forum (ESAF) for the RSA Conference, which is a community of Fortune 1000 CISOs.

READ MORE: LLM "victim models" misled and confused by new type of adversarial attack

One clear takeaway from the forum's discussions and research is that Shadow AI stands out as a major (and growing) risk.

"One CISO formed a use case review board, which quickly revealed just how widespread GenAI use had become within various departments," she says.

"They received hundreds of proposals from different teams. In organisations, there may be many unofficial projects underway."

Gaining visibility of the problem is a difficult task in and of itself. Tackling it is even more challenging.

We ask Robinson if the threat is so bad that it's like "shadow IT squared".

"That’s a good way to describe it,” she says.

Generating new risk

The forum's first discussions on the topic of GenAI took place in the early spring of 2023. By the beginning of 2024, members had started to deploy GenAI in their organisations and now 100% plan to implement GenAI in the next 12 months for "a range of business use cases".

Adoption levels and applications vary significantly by company, industry and department. For instance, marketing functions or media companies might use ChatGPT to generate content, while defence firms might explore much more sophisticated and potentially risky GenAI applications.

"General adoption doesn’t mean uniformity in use cases,” Robinson says. “CISOs see this as a multi-year journey. At the beginning, it was so new. Now, CISOs have actual implementations to evaluate, especially concerning the data they’re using, as risks can vary by use case.

"For instance, with an internal customer support bot to assist with IT tasks, the main concern might be proprietary data leakage. You wouldn’t want the chatbot disclosing trade secrets to an employee without clearance. In a different application, like healthcare, where they might be using AI to filter insurance claims, the concern shifts to patient data privacy.”

READ MORE: GenAI malware has been discovered in the wild, researchers claim

The sheer number of use cases can be overwhelming. One CISO told the forum they set up a reviews board to assess ideas on how to use GenAI, only to be met with 800 proposals and an “unhealthy degree of chaos".

Speaking anonymously, that CISO told the group: “We didn’t know what to expect, because we really had no central process or visibility inside of the company to have a sense of what was happening GenAI-wise. And we just got flooded with use cases.

"Even in departments that one would expect to have low technology acumen, there was somebody doing something with GenAI, exploring how they could make their business process or function better.”

As the proposals build up, security leaders must juggle the challenges created by a new technology being used with adequate permission and protection across the business with a range of other emerging issues.

“The role of a CISO is constantly evolving, especially as companies adopt more digital technologies,” Robinson tells us. “As cyber risks increase, the responsibilities of CISOs naturally shift.

“Considering the rapid pace of change, CISOs don’t view it as something new; they’re accustomed to constant evolution. The pace has accelerated with technologies like GenAI, yet this is part of what they manage - adapting to a faster rhythm.

"Within our group, virtually every company is either implementing or exploring GenAI, unlike the early cloud days when uptake was slower, particularly in regulated sectors. While not all companies may apply GenAI universally, they’re investigating its uses and planning for adoption soon.”

How to respond to Shadow AI in the enterprise

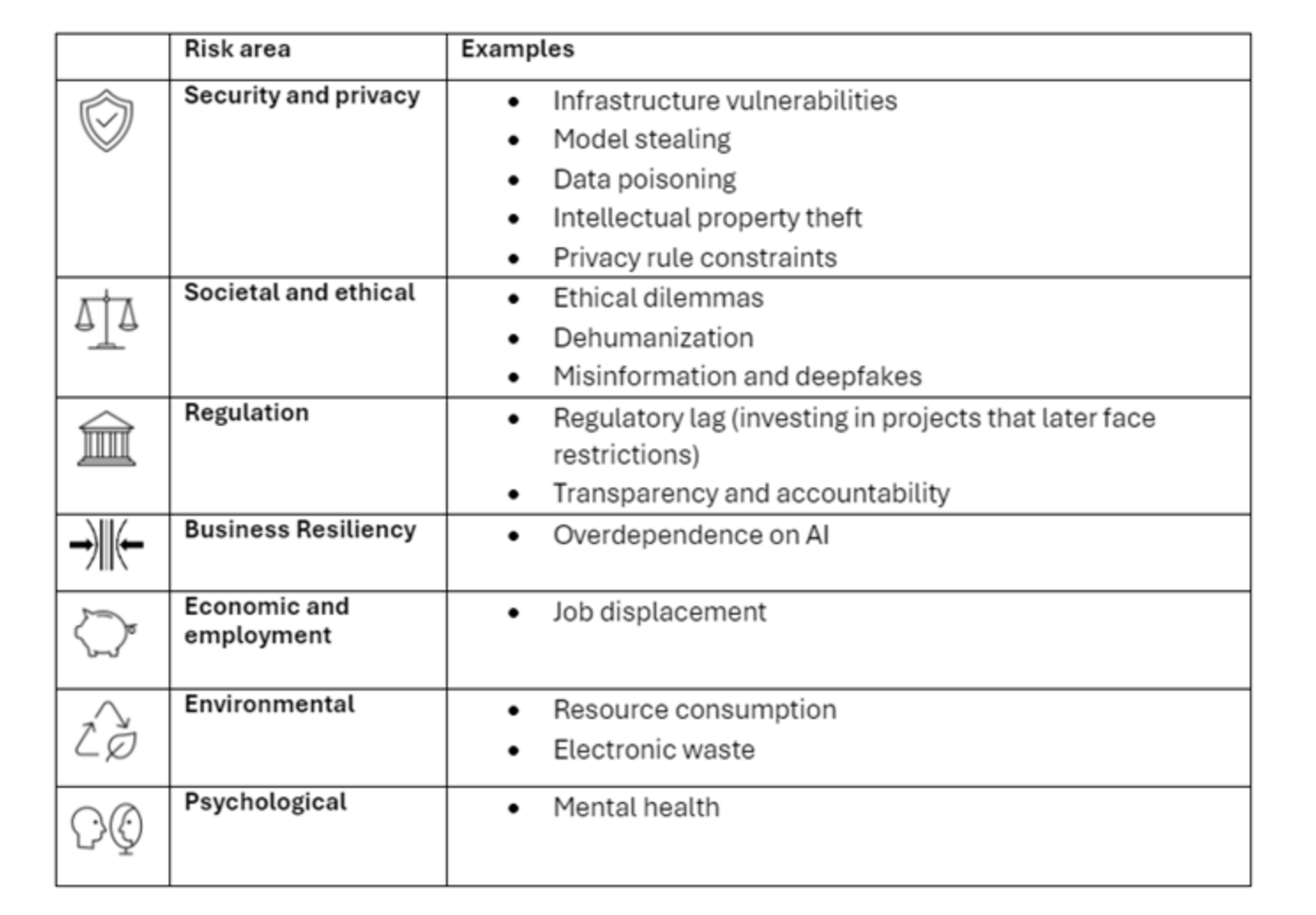

The forum agrees that a wise strategy is to establish a review board to assess GenAI use cases and proposals - and then wait for the storm to hit. Members of this board should have diverse experience and knowledge, enabling them to grapple with topics such as ethics, privacy and legal concerns, as well as sustainability and human resources, to deeply embed security in a wider business (and societal context).

This group could be led by a CISO but may benefit from the involvement of a job title that's becoming increasingly common: the Chief AI officer. People in these emerging roles may report to the CIO or (less often) CISO. But they may also report straight to the CEO or COO, depending on the organisation’s structure.

To effectively assess the security of GenAI projects, CISOs are advised to broaden their evaluation criteria beyond traditional security assessments to address the technology's unique challenges. First, the business value of the project must be clear, factoring in costs, benefits, scope, and urgency to determine if the investment is justified. Secondly, the process's suitability should be assessed to ensure it is well-defined and a good fit for AI. Finally, resources are crucial. CISOs need to verify there is an adequate supply of AI implementers, subject matter experts, and data owners available to support the project.

READ MORE: LinkedIn slams the brakes on GenAI data-harvesting in the UK amid privacy storm

Additionally, the data used in AI projects must be carefully examined for availability, quality, completeness, accuracy, and proper classification, as these factors influence AI effectiveness and security. Adequate technological infrastructure is also essential to support the demands of AI, whilst explainability is critical to ensure transparency across all processes and build trust with end users, as AI systems must be clear and understandable in their functions and decision-making processes.

“For CISOs, AI is just one more area they must address,” Robinson says. “Technology changes are a constant challenge, along with various regulations. GenAI is simply the latest in a series of developments they need to manage. It’s viewed as potentially transformative, with applications becoming increasingly pervasive. The scenario is much like the advent of cloud computing.”

It's not just regulations that can create headaches for security leaders.

"Specifically with GenAI, CISOs now face additional challenges around evaluating the ethics and trustworthiness of AI systems," Robinson continues. "While ethical analysis isn’t entirely new, it’s now a critical part of risk assessments in a way that’s more in-depth than with typical SaaS applications."

To respond to the threats, CISOs across the Fortune 1000 are already starting to experiment with AI-powered defences.

A CISO that’s a member of the group puts forward a categorisation model which sets out four stages of AI capability development: analysis, assistance, augmentation, and autonomy. Autonomy represents the “ultimate stage”, Robinson says, where systems make decisions and act independently. Currently, most AI systems are in the analysis and assistance phases, with plans to advance to higher levels over time.

“This evolving technology race between cyber threats and defenses is top of mind,” Robinson continues. “It’s about speed and automation, especially with threats that could occur in milliseconds. CISOs are already building systems to match this speed, as it will eventually be a ‘machine versus machine’ scenario, where humans alone won’t be able to keep pace. We’re not there yet. Autonomous AI is still some way off, but it’s on the horizon.”

And when that tech arrives, it's not just the bad guys whose destructive power will be levelled up. The insider will be more of a threat than ever. Shadow AI is a problem that is only just starting to rear its head - which means that preparing to tackle it cannot be delayed.