Few industries are more attuned to the winds of industry change blowing than retailers, which typically feel shifts in customer behaviour before other companies – and have to be highly responsive to protect market share.

As a result, retailers can be uniquely proactive at exploring opportunities to improve customer experience and drive efficiencies across the organisation.

That’s driving many to be particularly innovative with generative AI.

The numbers bear this out: Accenture found that 93% of retail CxOs plan to scale up their investments here over the next five years; whilst 72% of retailers plan to use AI to fundamentally reinvent how they operate.

Groceries via Uber?

“Retail has evolved significantly in recent years,” says Genevieve Broadhead, global lead for retail at MongoDB. “I never thought I'd be buying my groceries on an Uber-like delivery app, but that is what people are doing!”

“Retail went from single channel in-store, and then e-commerce… and now omnichannel where you are selling direct-to- consumer, you've got the stores, you've got the apps and you are integrating into third parties…”

Data deliberations

Whether for innovation around product decisions such as design, demand forecasting, or distribution strategies, or more customer-facing areas, a vast array of information needs to be brought together from different sources.

Many retailers, however, are finding their legacy systems can’t manage the multi-modal data structures required to power generative AI applications – nor respond fast enough to the needs of app developers in this market.

Unshackling developers

For a one-stop-shop solution many are turning to MongoDB Atlas – an integrated suite of data services centered around a multicloud database that has been designed to accelerate and simplify how users build with data.

MongoDB’s flexible document model lets applications’ data schemas evolve as application needs change – but also comes with the ability to horizontally scale out to massive enterprise levels and robust security protections.

Accessing the AI ecosystem

RAG (Retrieval Augmented Generation) has emerged as the architectural approach of choice for exploiting generative AI in retail. RAG architectures improve the efficacy of large language model (LLM) applications by retrieving the contextual data required to fulfill tasks or answer questions; that means fewer problems such as hallucinations, inconsistencies or black box models.

However too often, says Broadhead, “AI adoption requires migrating data using complex pipelines and infrastructure; with data duplicated in different systems and companies relying on ETL and specialised vector databases.”

To simplify what are rapidly becoming fragmented AI environments and scale to production deployment, retailers need to securely unify operational data and vectors for AI she says – with MongoDB Atlas perfectly placed here.

Get RAG right and retailers can build personalized product recommendations, dynamic content generation, visual search optimisations, customer sentiment analysis and more, she explains to The Stack.

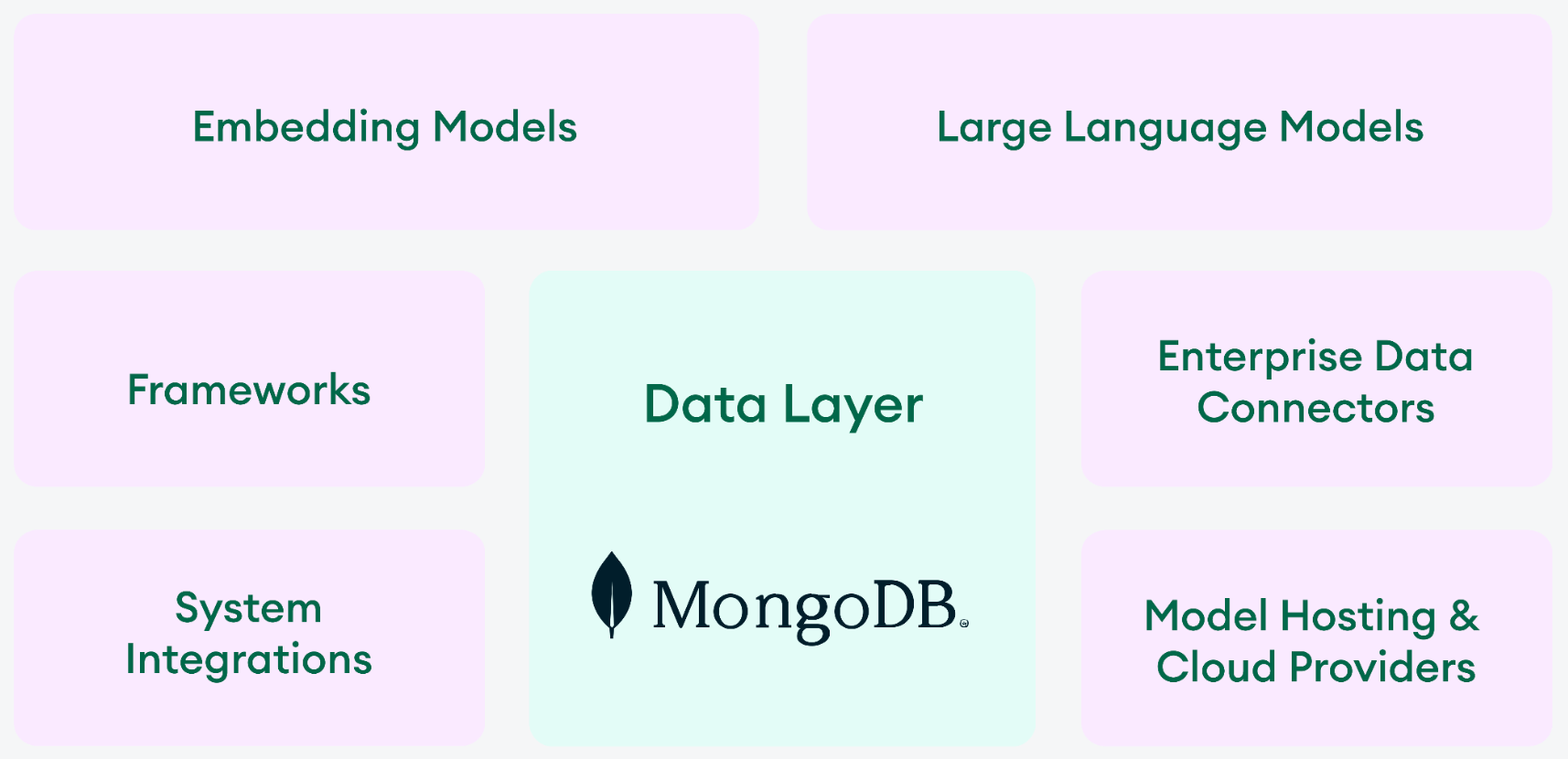

Key to the work MongoDB is doing is its MongoDB AI Applications Program (MAAP), which helps organisations rapidly build and put modern applications into production, at scale, by providing them with reference architectures, an end-to-end technology stack that includes integrations with technology providers, professional services, and a coordinated support system.

“Through MAAP, we are partnering with AI technology ecosystems, we are giving you sample code. We're showing you how to build certain architectures or certain use cases to help you get up and running when it comes to AI,” says Broadhead. “Developing AI applications quickly will differentiate the winners and losers of retail in the coming years and MongoDB is a key facilitator of innovating at the speed required.”

We’re speaking in Berlin and she turns to a local example swiftly.

German multinational Delivery Hero delivers everything from groceries, flowers, coffee and medicine directly to customers in over 70 countries.

The company’s data team built and deployed an AI solution on Atlas, providing hyper-personalised product recommendations in real time.

When a rider picking up a customer order in store comes across an item that is not available, the Delivery Hero picking service uses MongoDB Atlas Vector Search to recommend up to 20 suitable replacement items. Atlas semantically searches across vector embeddings of the product catalog to identify similar products with its approximate nearest neighbor algorithms.

“With Atlas Vector Search we can compose sophisticated queries that quickly filter across product data, customer preferences and vector embeddings to precisely identify hyper-relevant product recommendations in real time,” says Mundher Al-Shabi, Senior Data Scientist at Delivery Hero.

“Within the Atlas platform, we can store, index and query vector embeddings right alongside our product and customer data, with everything fully synchronised… our developers benefit from a consistent query syntax and a single driver, which reduces their learning curve and increases their productivity. We have a more agile, streamlined and efficient platform architecture that enables us to build and deploy faster and at lower costs.”

And those wanting to follow suit or roll out their own innovations will find MongoDB's MAAP program a hugely effective starting point, says Broadhead. With pre-designed but customisable architectures, it helps users build repeatable, accelerated frameworks for AI applications – that are extendable to accommodate evolving AI use cases, whether RAG, Agentic AI, or advanced RAG technique integrations.

Learn more here.

Delivered in partnership with MongoDB.