The House of Lords' Justice and Home Affairs Committee says it has been "taken aback by the proliferation of Artificial Intelligence tools potentially being used without proper oversight, particularly by police forces across the country" in a concerned report on the use of new technologies in the justice system which also warns that "an individual’s right to a fair trial could be undermined by algorithmically manipulated evidence."

"Facial recognition may be the best known of these new technologies but in reality there are many more already in use, with more being developed all the time. Algorithms are being used to improve crime detection, aid the security categorisation of prisoners, streamline entry clearance processes at our borders and generate new insights that feed into the entire criminal justice pipeline" the Committee said on March 30.

The market, Peers warned, was a "Wild West" saying that "public bodies and all 43 police forces are free to individually commission whatever tools they like or buy them from companies eager to get in on the burgeoning AI market" but public buyers often "do not know much" about the systems that they are buying, with there being "no minimum scientific or ethical standards that an AI tool must meet before it can be used..."

See also: Police National Computer faces more delays

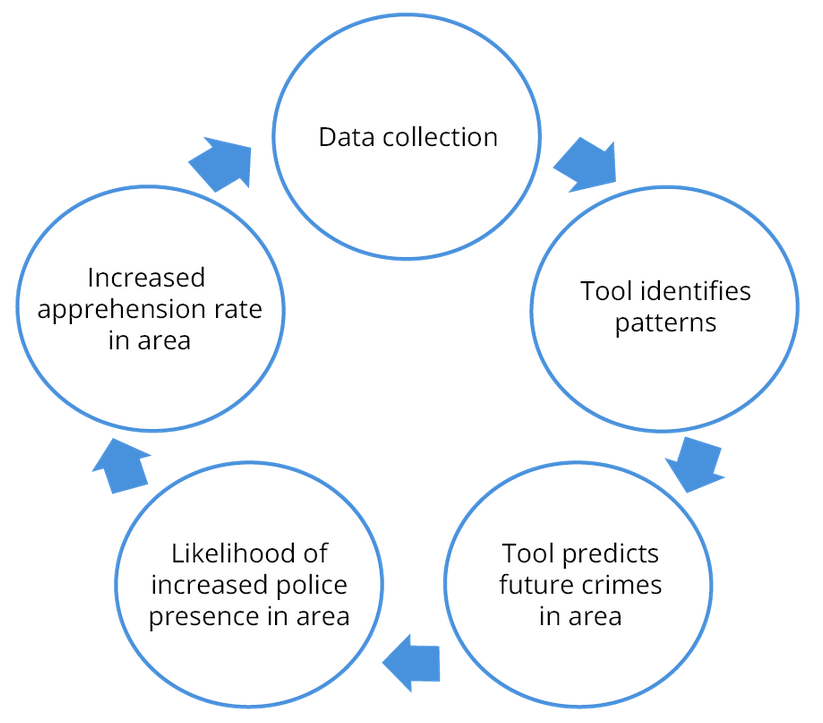

Among the tools deployed across the UK is one which uses data analytics and machine learning to "provide a prediction of how likely an individual is to commit a violent or non-violent offence over the next two years." (This was described in oral evidence to the committee by Kit Malthouse MP, Minister for Crime, Policing and Probation as "“a modern phenomenon of the police officer knowing who the bad lads were in the community.”)

As with the use of live facial recognition technologies, ministers have been reluctant to legislate and prefer to let deployments be challenged in the courts. Existing rules across human rights, data protection, discrimination, and public administration make it hard for police forces and their technology partners meanwhile to get clarity on compliance. NCC Group told Peers that “there is very little legislation and regulation overseeing the safe and secure rollout of [Artificial Intelligence] and [Machine Learning]-based technologies" and The Bar Council agreed: “For some technologies, such as AI, it is not clear that there is an effective existing legal framework."

The report concludes that "a stronger legal framework is required to prevent damage to the rule of law" and recommends that ministers bring forward primary legislation to prevent this.

(In a written submission the Met Police called for a "code of practice [that] would provide a framework for ethical decision making when considering whether to use a new technology. Ideally, to ensure consistency, effective oversight, future-proofing and predictability, it would focus on technology by types rather than seeking to regulate a specific tool or deployment methodology. Areas it would be helpful to cover include, artificial intelligence, advanced data analytics, sensor data, automation and biometrics but in a way which could apply to applications from drones and ANPR to a staff database or property management system. The approach would be based around the policing purposes pursued, people’s expectations of privacy informed by community engagement, alternatives to the intrusion, and the means by which effectiveness and demographic performance can be assessed...")

Artificial Intelligence in the Justice System: Risks to a fair trial?

One contributor also the Committee that algorithmic technologies could be used without the court being made aware, and that in some cases, evidence may have been subject to “manipulation”. David Spreadborough, a Forensic Analyst, gave the example that algorithmic error correction could be built into CCTV systems, and that “parts of a vehicle, or a person, could be constructed artificially”,without the court being aware.

Another contributor suggested to the Committee in oral evidence that the judiciary may feel compelled to cede to algorithmic suggestions, and that this would render judges “the long arm of the algorithm” -- with Peers noting that "solid understanding of where advanced technologies may appear, how they work, their weaknesses, and how their validity will be determined is therefore a critical safeguard for the right to a fair trial."

Oversight of this rapidly evolving "Wild West" is sprawling where it exists (there are over 30 public bodies, initiatives, and programmes playing a role*), the report warns, saying that "the number of entities and public bodies which have a role in the governance of these technologies indicates duplication and a lack of cohesion."

When it comes to the rapid rise of new technologies in the justice system, "there appears in practical terms to be a considerable disconnect across Government, exemplified by confusing and duplicative institutional oversight arrangements and resulting in a lack of coordination. Recent attempts to harmonise have instead further complicated an already crowded institutional landscape. Thorough review across Departments is urgently required" the House of Lords' Justice and Home Affairs Committee concluded in its report.

Ultimately, it suggested: "The Government should establish a single national body to govern the use of new technologies for the application of the law. The new national body should be independent, established on a statutory basis, and have its own budget." The full report is here.

Follow The Stack on LinkedIn

*These include:

- Her Majesty’s Inspectorate of Constabulary and Fire and Rescue Services

- The AI Council

- The Association of Police and Crime Commissioners (APCC), and its various working groups and initiatives, including the APCC Biometrics and Data Ethics Working Group

- The Biometrics and Forensics Ethics Group

- The Biometrics and Surveillance Camera Commissioner

- The Centre for Data Ethics and Innovation

- The College of Policing

- The Data Analytics Community of Practice

- The Equalities and Human Rights Commission

- The Forensic Science Regulator

- The Home Office Digital, Data and Technology function

- The Independent Office for Police Conduct

- The Information Commissioner’s Office

- The National Crime Agency, and its TRACER programme

- The National Data Analytics Solution

- The National Digital and Data Ethics Guidance Group

- The National Digital Exploitation Centre

- The National Police Chiefs’ Council, and its eleven co-ordination committees, each responsible for a specific aspect related to new technologies

- The National Police Ethics Group

- The National Policing Chief Scientific Adviser

- The Office for AI

- The Police Digital Service, its Data Office and Chief Data Officer

- The Police Rewired initiative

- The Police Science, Technology, Analysis and Research (STAR) fund

- The Police, Science, and Technology Investment Board

- The Royal Statistical Society

- The Science Advisory Council to the National Policing Chief Scientific Adviser

- The Senior Data Governance Panel within the Ministry of Justice

- The specialist and generalist ethics committees of some police forces

- The Tackling Organised Exploitation programme