NVIDIA said it sold $12 billion in Blackwell architecture hardware in the last quarter alone across both cloud service providers and enterprises – and expects its forthcoming Blackwell Ultra GPUs to be easier to adopt.

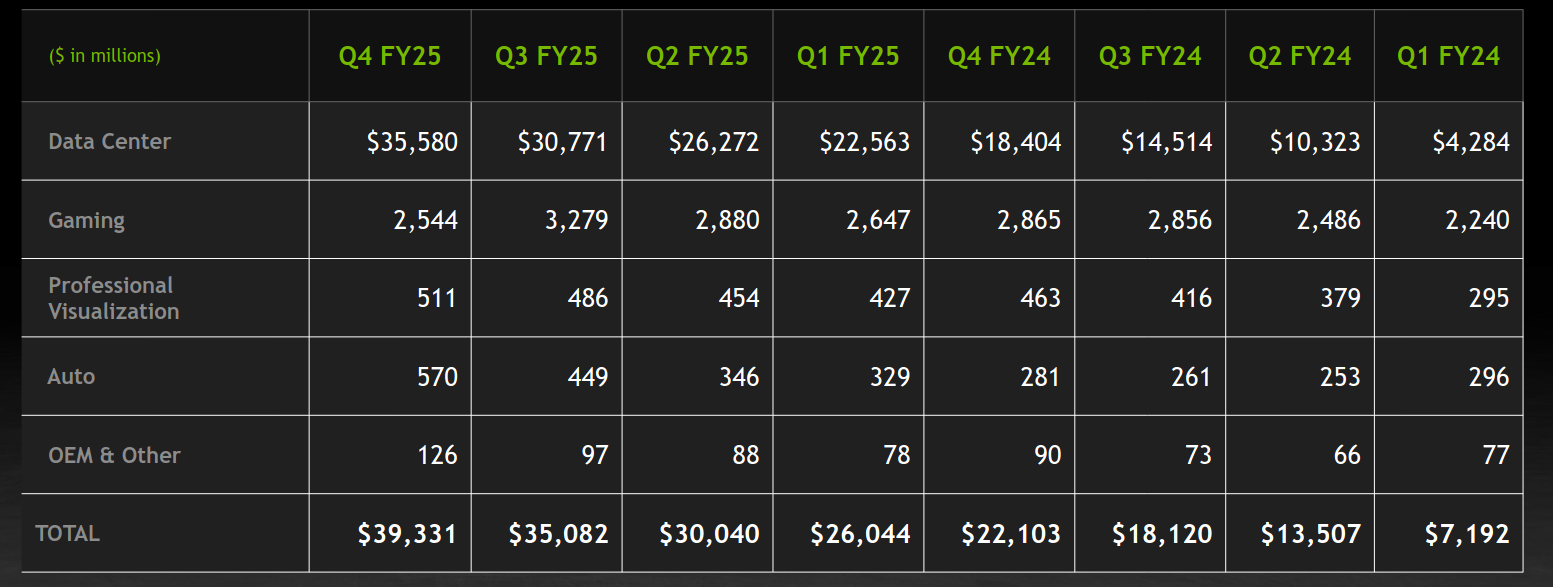

Major businesses keen to run their own AI inference in-house helped NVIDIA deliver record data centre segment revenues of $35.6 billion in Q4. CFO Collette Kress said on an earnings call: “Our Enterprise business… grew 2X – very similar to what we were seeing with our large CSPs.”

The company only reached full production of its Blackwell GPU architecture in late 2024 but plans to reveal a "Blackwell Ultra" in March. That will be built on the same architecture as Blackwell.

Blackwell Ultra: More plug and play?

CEO Jensen Huang said the company had moved past production “hiccups” with Blackwell and “Blackwell Ultra with new networking, new memories, and of course, new processors… is coming online.”

Its forthcoming Blackwell Ultra and “Vera Rubin” chipsets will be a lot easier to integrate into customers’ racks, CEO Jensen Huang promised.

NVIDIA plans to reveal more about “Blackwell Ultra” next month.

He added: “This time between Blackwell and Blackwell Ultra, the system architecture is exactly the same. It's a lot harder going from Hopper to Blackwell because we went from an NVLink 8 system to an NVLink 72-based system. So, the chassis, the architecture of the system, the hardware, the power delivery, all of that had to change. This was quite a challenging transition. But the next transition will slot right in.”

Cloud accelerators not a threat: Huang

Asked about the future balance between “customer ASIC and merchant GPU”, NVIDIA CEO Jensen Huang played down the threat to AI workloads from hyperscalers’ ASIC AI accelerators (e.g. Microsoft’s “Maia” chip.)

He suggested that the complexities of building software and workflows around multiple hardware propositions would mitigate the risk to NVIDIA’s growth. (Annual revenues were $130.5 billion, up 114%.)

Nadella says “Maia” will power Copilot, Azure OpenAI

“The ecosystem that sits on top of our architecture is 10 times more complex today than it was two years ago… bringing that whole ecosystem on top of multiple chips is hard. These things are not for the faint of heart.

“Just because the chip is designed doesn't mean it gets deployed. And you've seen this over and over again. There are a lot of chips that get built, but when the time comes, a business decision has to be made… our technology is not only more advanced, more performance, it has much, much better software capability and very importantly, our ability to deploy is lightning fast.

Whilst debate over the extent to which the efficiency innovations of China’s DeepSeek lab will impact AI spending, Huang noted that “we're observing another scaling law, inference time or test time scaling, more computation. The more the model thinks the smarter the answer.

“OpenAI, Grok 3, DeepSeek-R1 are reasoning models that apply inference time scaling. Reasoning models can consume 100x more compute…”

In other news, Cisco is porting its NX-OS networking operating system to run on NVIDIA’s Spectrum networking hardware in a major partnership.

“The NVIDIA Spectrum-X Ethernet networking platform based on Cisco and NVIDIA silicon will form the foundation for many enterprise AI workloads. By enabling interoperability between both companies' networking architectures, the two companies are prioritizing customers' needs for simplified, full-stack solutions” Cisco said on February 25.

Sign up for The Stack

Interviews, Insight, Intelligence for Digital Leaders

No spam. Unsubscribe anytime.