Microsoft AI researchers exposed 38 terabytes of data including employees’ personal computer backups, passwords to Microsoft services and secret keys for three years in a major security blunder that could also have let a malicious attacker inject malicious code into exposed AI models.

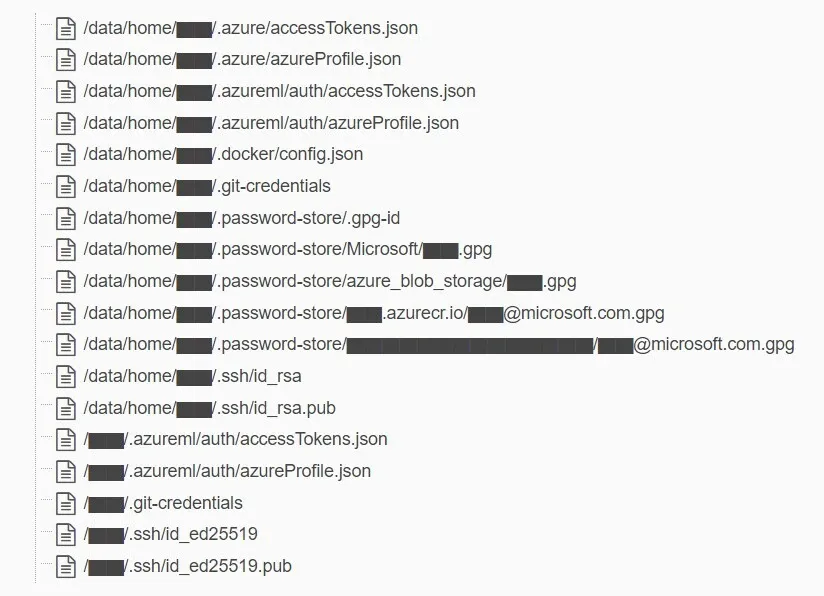

Scanning just a small portion of the data, security researchers at Wiz found credentials for Git, MS Teams, Azure, Docker, Slack, as well as personal “@microsoft.com” passwords along with private SSH keys (used for authentication), private GPG keys (used for encryption), and private Azure Storage account keys (used for file access) they told The Stack.

The incident happened because Microsoft’s AI research team published a bucket of open-source training data on GitHub using Azure’s “SAS tokens” (a way of sharing/restricting access to resources in your storage account) that pointed readers to a Azure storage URL that was configured to share the entire storage account with "full control" (read and write) to all.

They had also set the SAS token's expiry date to October 6, 2051.

The issue was discovered by cloud security firm Wiz and reported to Microsoft in June 2023. It comes as Microsoft continues to deal with the fallout caused by the theft of a cryptographic key and subsequent forgery of authentication tokens that resulted in multiple government customers having their email accessed by a group affiliated with China.

Wiz said: “The root cause was the usage of Account SAS tokens as the sharing mechanism. Due to a lack of monitoring and governance, SAS tokens pose a security risk, and their usage should be as limited as possible. These tokens are very hard to track, as Microsoft does not provide a centralized way to manage them within the Azure portal.”

Microsoft warns in its own guidance on the use of SAS (shared access signature) tokens that "it's not possible to audit the generation of SAS tokens. Any user that has privileges to generate a SAS token, either by using the account key, or via an Azure role assignment, can do so without the knowledge of the owner of the storage account. Be careful to restrict permissions that allow users to generate SAS tokens" it adds.

Wiz's prolific security researchers added: “Generating an Account SAS is a simple process… the user configures the token’s scope, permissions, and expiry date, and generates the token. Behind the scenes, the browser downloads the account key from Azure, and signs the generated token with the key. This entire process is done on the client side; it’s not an Azure event, and the resulting token is not an Azure object. Because of this, when a user creates a highly-permissive non-expiring token, there is no way for an administrator to know this token exists and where it circulates. Revoking a token is no easy task either — it requires rotating the account key that signed the token, rendering all other tokens signed by same key ineffective as well.”

Wiz's full blog is here.

Updated: Microsoft has played down the exposure, saying it spanned "Microsoft backups of two former employees’ workstation profiles and internal Microsoft Teams messages of these two employees with their colleagues. No customer data was exposed, and no other internal services were put at risk because of this issue" and added in a September 18 blog that "GitHub’s secret scanning service monitors all public open-source code changes for plaintext exposure of credentials and other secrets. This service runs a SAS detection, provided by Microsoft, that flags Azure Storage SAS URLs pointing to sensitive content, such as VHDs and private cryptographic keys. Microsoft has expanded this detection to include any SAS token that may have overly-permissive expirations or privileges."

Whilst it is not possible to audit the generation of SAS tokens, detecting post-generation activity is different, says Microsoft, and once allocated it is possible to follow and audit the token if it is checked into source code or otherwise mishandled...

More to follow.