Earlier this week, we revealed serious concerns around LinkedIn's decision to quietly start harvesting users' personal data to train Generative AI (GenAI) models.

Now the Microsoft-owned social network has performed a dramatic u-turn and announced the UK would no longer be involved in its controversial training program following "engagement" with the Information Commissioner's Office (ICO).

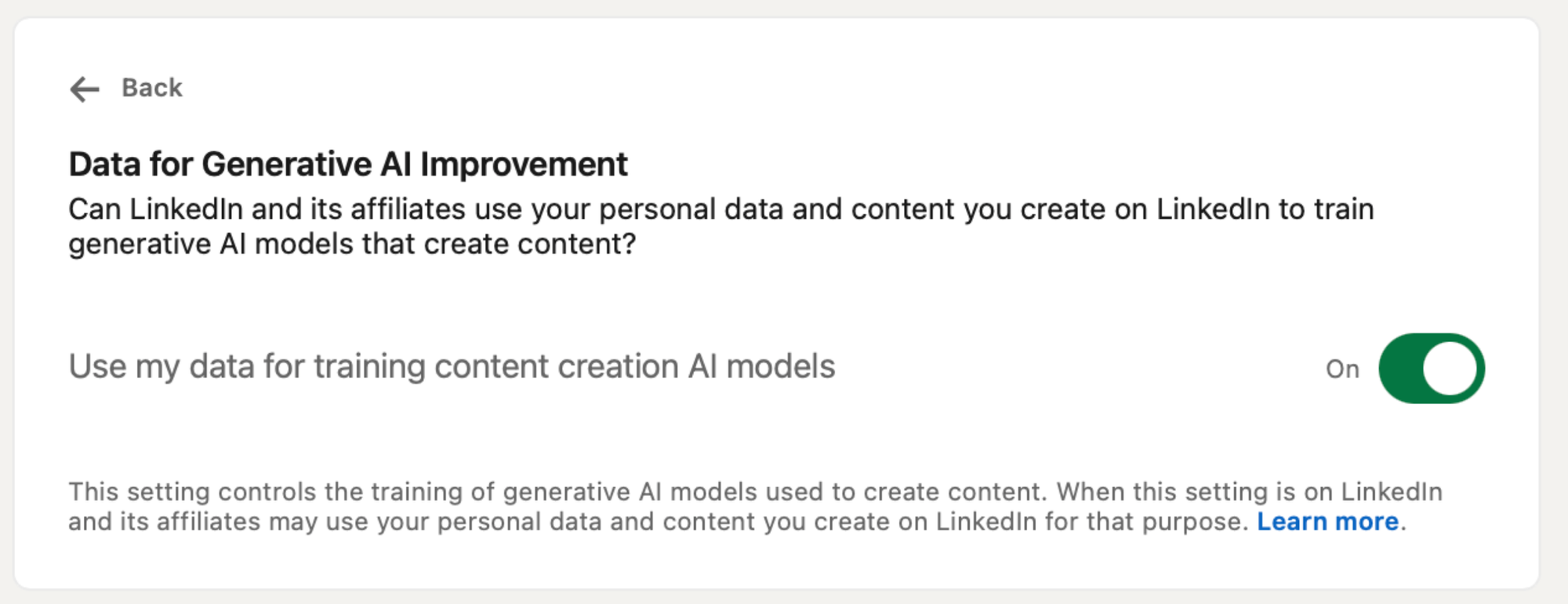

It has removed the option to share data with LinkedIn for model training, which was enabled by default.

The news that LinkedIn had backed down was first shared in an update to a blog from Blake Lawit, SVP and General Counsel at LinkedIn, setting out LinkedIn's new policies and announcing that training Generative AI would not take place in the UK. It was previously known that users in Switzerland and the European Economic Area would also be exempted from the experiment.

LinkedIn "will not provide the setting to members in those regions until further notice."

The General Counsel said new terms in the LinkedIn User Agreement will go into effect on November 20, including "new provisions relating to the generative AI features we offer."

"As technology and our business evolves, and the world of work changes, we remain committed to providing clarity about our practices and keeping you in control of the information you entrust with us," he wrote.

It is still unknown whether LinkedIn will train its AI models on data from users' DMs. We have asked LinkedIn for clarification several times and will update this article if we get an answer.

Stephen Almond, Executive Director Regulatory Risk at the Information Commissioner's Office, said: "We are pleased that LinkedIn has reflected on the concerns we raised about its approach to training generative AI models with information relating to its UK users. We welcome LinkedIn’s confirmation that it has suspended such model training pending further engagement with the ICO.

"In order to get the most out of generative AI and the opportunities it brings, it is crucial that the public can trust that their privacy rights will be respected from the outset.

"We will continue to monitor major developers of generative AI, including Microsoft and LinkedIn, to review the safeguards they have put in place and ensure the information rights of UK users are protected."

Why has LinkedIn stopped gathering data for GenAI in the UK?

We asked legal experts for their opinion on why LinkedIn has backed down. Vanessa Barnett, a technology partner at Keystone Law, said: “As with all technology, there is an element of how comfortable the users feel about what’s being rolled out. It’s possible that LinkedIn has taken stock of that, and taken another look at its legal obligations.

“I think it’s hard for LinkedIn to argue that deploying AI like this is not a change of purpose – this is a new use of data, which it should be getting meaningful consent for.

"This is partly due to the good old UK GDPR, but with one eye on the fact that we will likely over time see the so-called ‘Brussels effect’, and similar rules on this side of the Channel. This is why it’s so important to look at AI on a use case basis, and then making sure the right rules are being complied with.”

Ellen Keenan-O'Malley, a solicitor at the IP law firm EIP, pointed out: "This is not the first time we have seen a u-turn from a large tech company. In 2023, Zoom suffered a backlash after amending its policy to include the right to use user data to train its AI models.

“Hopefully LinkedIn (and other tech companies) will think twice before quietly opting-in everyone without consent.”

In Europe, the EU AI Act is likely to be responsible for LinkedIn's decision to keep its models away from users.

"LinkedIn can't train its AI on the personal data of EU users because it's restricted from doing so by law," Paul Bischoff, Consumer Privacy Advocate at Comparitech, told The Stack. "The EU AI Act requires LinkedIn to get additional opt-in, informed consent from EU users for such purposes. That requirement doesn't exist in most other countries.”

The advice for enterprises remains clear: be careful when posting data on social media, particularly when you know it will be used to generate AI outputs.

Bischoff added: “Enterprises should be wary of what they and their employees post on LinkedIn because that info becomes part of the corpus of data used to train LinkedIn's AI. The AI might attempt to draw conclusions about your company based on the posts and profiles of companies and their employees and present those conclusions to third parties."