IBM’s z17 mainframe is here and, as teased, Big Blue is going big on AI – with an on-chip AI coprocessor to reduce latency for “in-transaction inferencing” and the ability to support small and large language models.

Each system is powered by 32 of its Telum II processors, revealed last year. z17 inference capabilities are powered by IBM’s second-gen on-chip AI accelerator, with a new “Spyre” Accelerator set to provide additional capabilities via PCIe card when it launches at the end of this year.

Tina Tarquino, Chief Product Officer at IBM Z, told The Stack that z17’s capabilities showed it was “going in the opposite direction” to large GPU farms when it comes to energy usage by providing “more AI capability using less energy” with z17 energy demand down 17% from the z16.

“Organizations can embed AI directly into business processes and existing IBM Z applications” Big Blue told the airlines, banks, insurance companies and governments still heavily running mainframe applications.

See also: IBM bullish on mainframe ahead of z17 as workloads, revenue grow

The z17 takes the same form factor as the z16: a 19-inch rack mount design that can scale from a single frame up to four frames.

The new IBM mainframe boasts up to 40% more shared cache per core; its on-chip AI acceleration adds more than 24 TFLOPS of processing power that is shared by all cores on the chip; and can be fitted with up to 64 TB memory: “Cloud elasticity requirements are covered by IBM z17 granularity offerings, including capacity levels, Tailor Fit Pricing (for unpredictable, high spiking, and business-critical workloads), and Capacity on Demand (CoD)” Big Blue told customers on the z17 launch today.

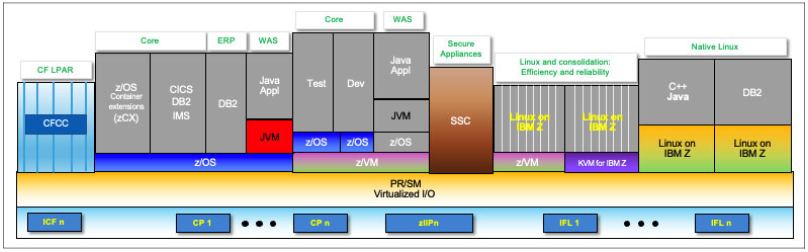

The mainframes can support z/VM 7.4 and z/VM 7.3 hypervisors as well as the KVM hypevisor for IBM Z via Linux distributions from Canonical, Red Hat and SUSE said IBM.

See the full specs here.

These capabilities will give more on-site control to those who need to keep their data close, Elpida Tzortzatos, AI CTO at IBM Z, told The Stack, as the company attempts to convince those eager to get off the mainframe to stay put.

She said many IBM clients can’t bring data to the cloud due to “data residency and sovereignty regulations” highlighting the need for on-site GenAI processing, “especially when it comes to real time in-transaction [inferences] for high volume transactional workloads, which, by the way, we know better than anybody else in the industry.”

Join peers following The Stack on LinkedIn

Referencing the use of AI to detect fraud in financial transactions, Tarquino said “what we’ve introduced with Spyre and Tellum allows us to run multiple models with improved accuracy and fewer false positives … [so clients can] focus more time on that nefarious activity.”

Informed by 2,000 hours of clients input, development of z17 also focussed on consistency and the mainframe is able to give a one millisecond inference response time no matter how many transactions it is processing, Tzortzatos claimed.

“Now clients can use not only predictive AI models but large language and coder models where they can extract new features or insights from unstructured data,” she said.

The new mainframe is also “fully participating in hybrid cloud” with IBM soon to expand its Red Hat OpenShift capabilities to include OpenShift Bert, AI, and confidential containers.

With IBM having recently annihilated one challenger in court and now being the only mainframe-maker in the world, it has a clear run at a fascinating market.

Big Blue may sell single digit numbers of new mainframes in the UK each year (about seven or eight on a normal year without a new model) if The Stack’s sources are accurate, but licences for Big Iron remain Big Business for Big Blue: IBM CFO Jim Kavanaugh said they account for “about 30% of our software book of business" back in 2023 and remain a "very important value vector for IBM… It carries a very high profit and cash generation that provides financial flexibility for us to reinvest” he told investors.

Sign up for The Stack

Interviews, insight, intelligence, and exclusive events for digital leaders.

No spam. Unsubscribe anytime.