Updated: 15:20 BST with comment from Bob Sutor, Ali El Kaafarani, Ashley Montanaro.

A new quantum semiconductor from Google code-named “Willow” conducted a computation so fast that it “lends credence to the notion that quantum computation occurs in many parallel universes, in line with the idea that we live in a multiverse” the company suggested this week.

It was referring to physicist David Deutsch’s theory of universes. Deutsch, a visiting professor of physics at Oxford University, posited in his seminal 1997 book “The Fabric of Reality” that there are many universes ‘parallel’ to the one around us that are detectable through quantum interference.

(The Stack has contacted Professor Deutsch for comment.)

In a paper in Nature and associated blog post, Google said, crudely, that it had achieved a significant milestone in quantum computing by reducing errors as the number of qubits increased, through a combination of advanced quantum error correction techniques and improved hardware.

CNOTs and superpositions: A quantum refresher

Classical computing deploys “bits” that use the 0 and 1 vocabulary of binary code. Quantum computers use “qubits” that draw on two-state quantum-mechanical systems – the ability of quantum particles to be in “superposition”; two different states at the same time.

As IBM Research’s Edwin Pednault puts it: “A qubit can represent both 0 and 1 simultaneously – in fact, in weighted combinations; for example, 37%-0, 63%-1. Three qubits can represent 2^3, or eight values simultaneously: 000, 001, 010, 011, 100, 101, 110, 111; 50 qubits can represent over one quadrillion values simultaneously.”

Whilst classical computing circuits use ANDs and ORs and NOTs and XORs (binary gates) on which users build up higher level instructions, then support for languages like Java, Python, etc., quantum computers use different kinds of gates like CNOTs and Hadamards.

That resulted in some extraordinary performance as measured against Google’s RCS computational benchmark: Willow “performed a computation in under five minutes that would take one of today’s fastest supercomputers 1025 or 10 septillion years,” claimed Google, adding that “if you want to write it out, it’s 10,000,000,000,000,000,000,000,000 years…”

So, should you worry about your Bitcoin, encrypted communications, or anything else that could in theory be brute-forced by this kind of power?

Not yet, is the short answer. This was tested in an extremely limited way.

“The next challenge for the field is to demonstrate a first "useful, beyond-classical" computation on today's quantum chips that is relevant to a real-world application… we’ve run the RCS benchmark, which measures performance against classical computers but has no known real-world applications” – Google, December 9, 2024.

Dr Bob Sutor, a former IBM Quantum leader, told The Stack: "There’s no question that they have made some important hardware progress, and people should now compare their specifications to where other superconducting qubit vendors are. I believe Google can and should begin to show progress on practical problems rather than those with only benchmarking value. It would be interesting to see how their system performs on more modern and efficient quantum error correcting codes."

"All in all, good work and make it useful!" he added.

What is the RCS benchmark?

Google created and first published the RCS benchmark (on which Willow performed so powerfully) in 2019. It describes it as follows.

"The specific output of RCS benchmarking is an estimate of fidelity, a number between 0 and 1 that characterizes how close the state of the noisy quantum processor is to an ideal noise-free quantum computer implementing the same circuit. Despite the simulation of RCS circuits being beyond the capacity of classical supercomputers, it is possible to obtain an estimate of the fidelity. This is achieved by slightly modifying the circuits to make them amenable to classical computation without inducing a significant change in the value of the fidelity.

The value of fidelity is verified using a technique called patch cross-entropy benchmarking (XEB). For large circuits, this involves dividing the full quantum processor into smaller “patches” and calculating the XEB fidelity for each patch, a computationally feasible task. By multiplying these patch fidelities, an estimation of the overall fidelity of the entire circuit is obtained."

“Our arrays of qubits have longer lifetimes than the individual physical qubits do, an unfakable sign that error correction is improving the system overall [and represents the] most convincing prototype for a scalable logical qubit built to date” said Google in its December 9 blog.

“With 105 qubits, Willow now has best-in-class performance across the two system benchmarks…. quantum error correction and random circuit sampling” it added. “Our T1 times, which measure how long qubits can retain an excitation — the key quantum computational resource — are now approaching 100 µs (microseconds). This is an impressive ~5x improvement over our previous generation of chips” the company added.

The paper, titled “Quantum error correction below the surface code threshold” comes weeks after another Google team published another major paper in Nature “Learning high-accuracy error decoding for quantum processors” that demonstrated their creation of a transformer-based neural network that “learns to decode… quantum error-correction code”. The AI was trained on synthetic data and helps to correct the significant errors that continue to plague quantum computing.

Alan Ho, CEO of quantum startup Qolab, wrote on LinkedIn:

Good: Google has definitively shown error correction is “possible” on superconducting qubits...

Technically speaking, Willow is the most powerful quantum computer. Even though IBM has larger chips with similar error rates, Google’s connectivity is higher and this gives it higher computational power

Google’s result cannot be reproduced on other superconducting qubit processors today, which does put Google in the lead...

Bad:- it took 5 years to go from 50 qubits to 100 qubits. We need a million qubits for a practical quantum computer. Extrapolating the timeline isn’t very positive.

Figure S1 of the supplemental shows that there is an order of magnitude difference between the best and worst 2q errors. This indicates that there is challenging fabrication issues with regards to uniformity of the qubits.

Without uniformity, one cannot scale because you end up picking up the outliers. You can already see this affect between the 72 qubit chip and 100 qubit chip

The error spread goes up, and the median error rates go up. This is bad.

Comparing Google’s 2q error distribution and IBM’s 2q error distribution, they are approximately the same, showing that this uniformity issue has not been solved.

Google's quantum chip Willow – and fixing errors

For quantum computing to work effectively, calculations need to keep going in superposition for the duration of the computational cycle.

But they can easily be thrown off by noise (“the central obstacle to building large-scale quantum computers”) which could stem from diverse sources including disturbances in Earth’s magnetic field, local radiation, cosmic rays, or the influence that qubits exert on each other by proximity.

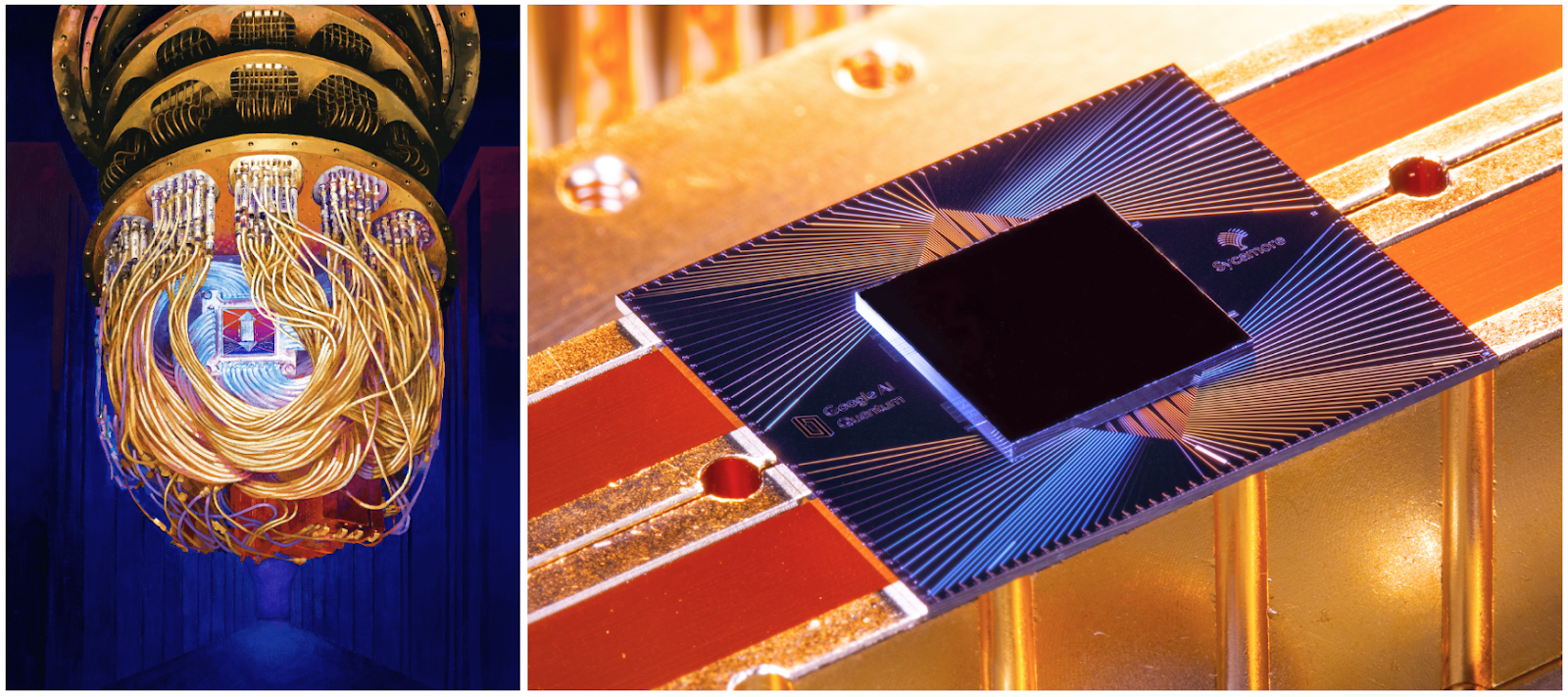

This is typically tackled at least in part physically: signals for configuring and programming a quantum computer come from outside the machines, travel down coaxial cables where they are amplified and filtered, and eventually reach the quantum device with its qubits at ~0.015K (-273.135 degrees C), with noise tackled by minimising the exposure of the chips and cables to heat and electromagnetic radiation in all its forms; all sorts of novel mathematical schemes are also used to compensate for noise.

Google's newly proven ability to scale the number of qubits in a quantum computer without increasing the number of errors is important.

But as professor Ashley Montanaro, founder of quantum startup Phasecraft, recently noted in The Stack: "Quantum computers below 40-50 qubits can easily be simulated by their classical counterparts, so it was essential to get beyond this barrier to achieve a meaningful quantum advantage. But now we’re past this stage, gate fidelity is emerging as a more relevant metric. As qubit numbers have increased, so too has the potential to run meaningful algorithms on quantum hardware.

"With this", he added, "comes an increased chance of errors in the computations, due to noise and decoherence. It is therefore more important to perform quantum operations accurately..."

He added today: "Google’s Willow chip represents exciting progress for the quantum industry. As well as demonstrating significant performance improvements in terms of key parameters such as gate fidelities and qubit counts, this chip meets a crucial milestone for the field: scaling of quantum error correction to reduce errors exponentially as the size of the quantum chip grows...

"However, there is still work to be done to make quantum computers truly useful. To get to this goal of practical quantum advantage, we need to focus on developing not only the hardware but also the software that will make full use of the technology, and enable us to use quantum computers to model next-generation materials for batteries and solar cells or optimize our energy grid."

Dr Ali El Kaafarani, founder and CEO of PQShield added that "Willow may be an experimental chip for now, but whoever builds a quantum computer that harnesses this power will have a game-changing cybersecurity and geopolitical advantage - and almost certainly won’t publicly announce their breakthrough when they do...

"This announcement from Google should serve as a reminder of why NIST has said RSA will be deprecated by 2030 and the NSA is urging some enterprises to implement PQC [post-quantum encryption] as soon as 2025."

Sign up for The Stack

Interviews, Insight, Intelligence for Digital Leaders

No spam. Unsubscribe anytime.