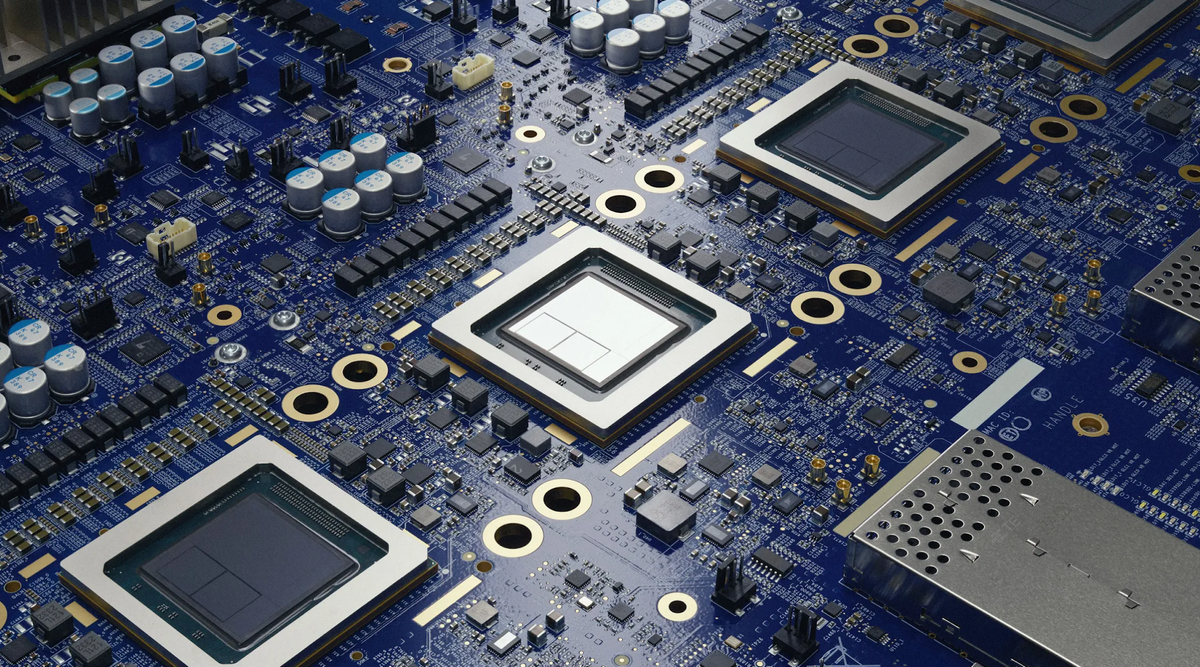

Three generations of Tensor Processing Units (TPU) created by Google Deepmind's AI chipmaker have now been deployed in data centres across the world.

In 2021, Google first unveiled an AI capable of designing the "floorplan" of TPUs, which are AI accelerator chips.

Now it has released an addendum to its original Nature paper detailing the fab model's impressive achievement and announcing its new name: AlphaChip.

In a blog which uses the word "superhuman" no less than four times, Anna Goldie and Azalia Mirhoseini wrote: "Our AI method has accelerated and optimized chip design, and its superhuman chip layouts are used in hardware around the world.

"AlphaChip was one of the first reinforcement learning approaches used to solve a real-world engineering problem. It generates superhuman or comparable chip layouts in hours, rather than taking weeks or months of human effort, and its layouts are used in chips all over the world, from data centres to mobile phones."

Our AI for chip design method AlphaChip has transformed the way we design microchips. ⚡

— Google DeepMind (@GoogleDeepMind) September 26, 2024

From helping to design state-of-the-art TPUs for building AI models to CPUs in data centers - its widespread impact can be seen across Alphabet and beyond.

Find out more →… pic.twitter.com/KTFVUMYOiD

How does AlphaChip work?

"Designing a chip layout is not a simple task," Google notes. Chips are made up of interconnected blocks and layers of components connected by "incredibly thin wires".

Due to "complex and intertwined design constraints" and the "sheer complexity" of the task, it's proven very difficult to automate chip designs.

AlphaChip was designed to approach chip floorplanning as "a kind of game", hence the name echoing AlphaGo and AlphaZero, which are expert players of the game Go.

AlphaChip takes a blank grid and places one circuit component at a time until all the components are in place and is then "rewarded" when the layout is of a good quality. .

A "novel edge-based” graph neural network lets AlphaChip learn how chip components interact and then generalise these lessons across chips, enabling the model to incrementally improve its work.

"To design TPU layouts, AlphaChip first practices on a diverse range of chip blocks from previous generations, such as on-chip and inter-chip network blocks, memory controllers, and data transport buffers," Google Deepmind wrote.

"This process is called pre-training. Then we run AlphaChip on current TPU blocks to generate high-quality layouts. Unlike prior approaches, AlphaChip becomes better and faster as it solves more instances of the chip placement task, similar to how human experts do.

"With each new generation of TPU, including our latest Trillium (6th generation), AlphaChip has designed better chip layouts and provided more of the overall floorplan, accelerating the design cycle and yielding higher-performance chips."

Does AI make better chips than humans?

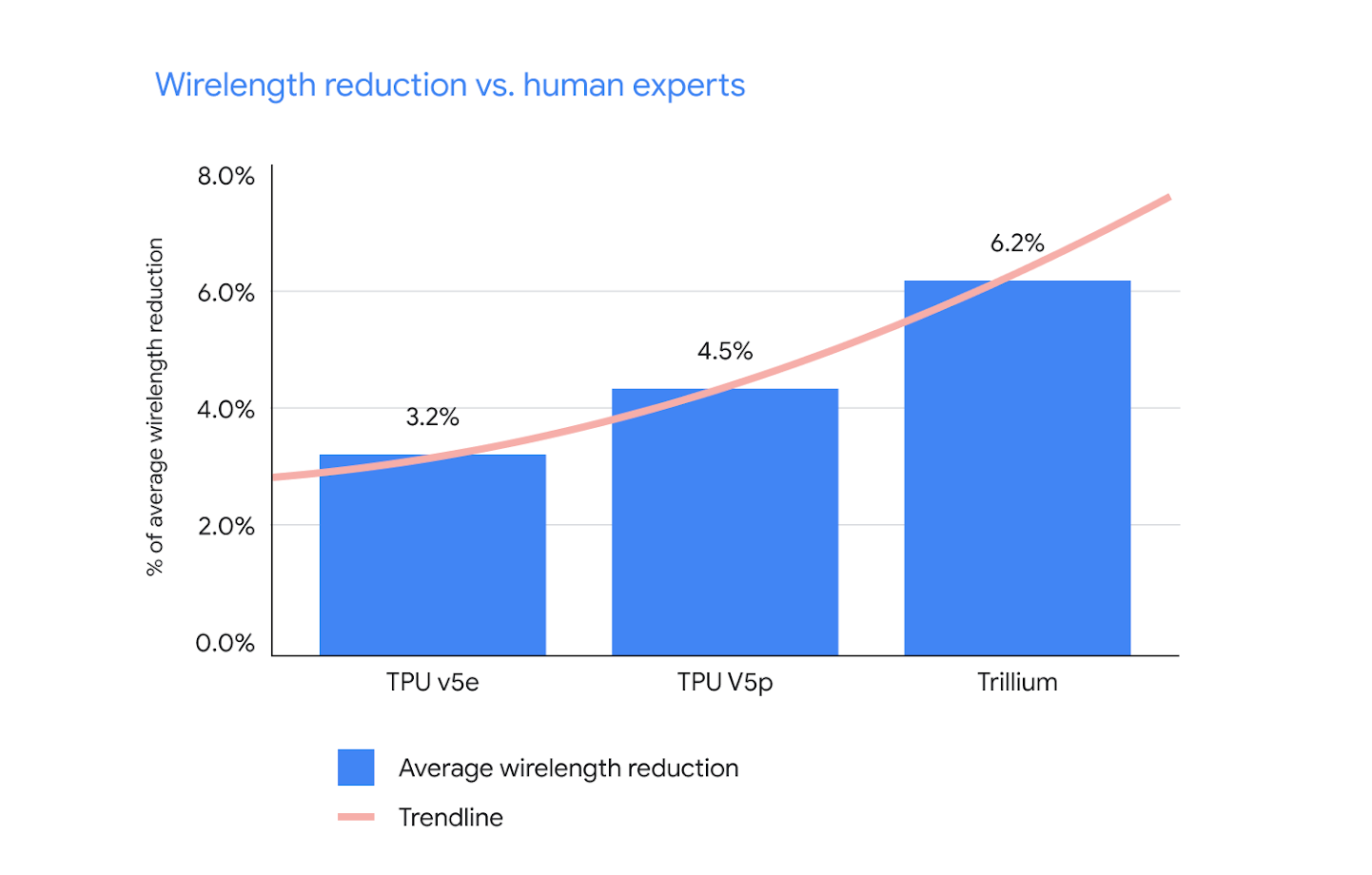

With every generation of TPU, the performance gap between AlphaChip and human chip designers has grown. The graph above shows improvements in wirelength, which refers to the total length of electrical connections between components on a semiconductor, which is crucial because shorter wirelengths cut delays and power consumption whilst improving overall performance.

AlphaChip has also generated other "superhuman chip layouts" for blocks used in chips such as Axion, an Arm-based CPU, and other "unannounced chips" across Alphabet. Other organizations have also adopted Deepmind's approach including MediaTek, which used AlphaChip to make its own chips and improve their size, power and performance.

"AlphaChip is just the beginning," Google wrote. "We envision a future in which AI methods automate the entire chip design process, dramatically accelerating the design cycle and unlocking new frontiers in performance through superhuman algorithms and end-to-end co-optimization of hardware, software, and machine learning models. We are excited to work together with the community to close the loop between AI for chip design and chip design for AI."