What can enterprises learn from Gandalf? That's the question we asked David Haber, CEO and Co-Founder of an AI security firm called Lakera which recently secured $20M of Series A funding to deliver "real-time GenAI security".

We weren't talking about the famous wizard from Lord of the Rings, but an educational game called Gandalf in which players try to trick a large language model into revealing a secret password using prompt injection techniques (attacks in which malicious inputs are used manipulate an AI’s behavior or outputs).

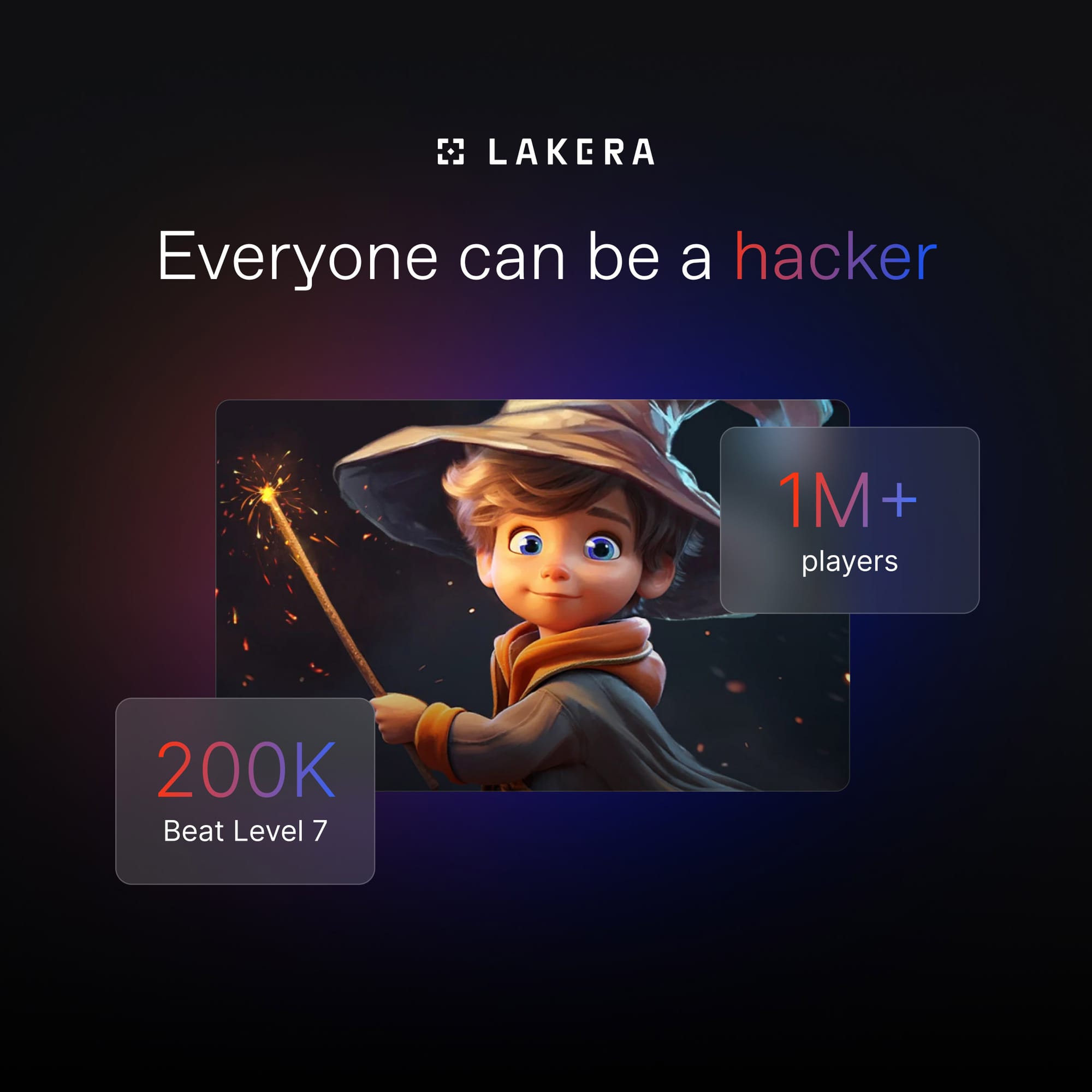

Gandalf has now been played by more than one million people. A total of 200,000 of these players have successfully completed seven levels of the game by manipulating models into taking unintended actions - providing Lakera with deep insights into GenAI hacking.

"The way to think about Gandalf is that it's really the world's largest red team or a live stream of the world's creativity around how to tamper with Gen AI systems," Haber tells The Stack. "When Anthropic releases a new paper describing a new vulnerability or type of attack targeting GenAI models, people are trying it on Gandalf just minutes after publication."

"It's a beautiful illustration of just how accessible hacking has become and also how easy it is to manipulate Gen AI systems," he adds.

Gandalf was originally going to be called Yoda until someone sent a meme around the company showing the Lord of the Rings wizard and his famous words: "You shall not pass." The game has proven so popular that it has become one of Lakera's key marketing channels.

"A large portion of our commercial activity is a direct result of people playing Gandalf, realising what their security gaps are, and reaching out to Lakera to protect from this new type of cyber risk."

Prompt action to tackle a growing threat

Haber describes GenAI as the ”biggest technological transformation that we as humans will likely see in our lifetime,” comparing the breakneck speed of adoption with the relatively sluggish rollout of the cloud. To recap: ChatGPT launched just 20 months ago. Roughly one year after it was unleashed on the world, more than 90% of Fortune 500 companies were using GenAI.

"We're only starting to understand what the threat landscape around AI - but we know existing security solutions and tools don't address these new risks," the Lakera boss adds. "So we are in a catch-up race in which technology is adopted at speed and we need to protect enterprise from being exposed to major risks."

GenAI can make corporate data available to vast numbers of people - then let bad actors maliciously access that information simply by typing a cleverly-worded comment.

Lakera's customers have also reported that their chatbots have revealed internal data, harassed customers, provided incorrect advice, behaved unpredictably or shared data with the wrong people both inside and outside the organisation.

"Today, anyone that can speak can also hack," Haber says.

He highlights two "major concerns" for enterprises. The first is prompt attacks, which are enabled by a "whole universe of phrases that can be used to trick the model into taking unintended actions".

"It's been shown that these Gen AI models are essentially Turing Complete machines, which means attackers can make them do absolutely anything if they discover the right set of words and terminology," he warns.

The second threat is data leakage, in which GenAI models leak sensitive customer or corporate data.

He adds: "As we connect these models to all corporate sensitive data and humans input credit card information or names and addresses, organisations must ensure it doesn't leak into the world or even the model provider. That's a major concern."

READ MORE: "It's 100 times more productive": Walmart reveals impact of GenAI

Lessons for enterprises

The key takeaway from Gandalf is that security leaders should take the GenAI threat seriously and prioritise mitigation using defensive products that are up to the job.

"Security solutions need to be alive and breathing - just like the underlying technology of GenAI," Haber advises. "They need to evolve continuously."

Solutions that rely on rules "won't cut it", he adds, because they're "too simplistic for the complexity of data" that models process. Organisations should also avoid using a GenAI model to secure another GenAI model, because they will both fall victim to the same exploits or attacks.

Instead, Haber advises enterprises to build custom models that are architecturally different from those they guard and ensure they are continually updated with the latest data and threat intelligence.

"GenAI poses completely new risks that traditional security does not address," Haber concludes. "That means organisations have blind spots and we're in a race to protect enterprises from these major emerging risks."

The threat of GenAI is certainly great. Yet organisations need to face it, Frodo-style, or face the consequences. For, in the words of Gandalf himself: “It’s the small things, everyday deeds of ordinary folk that keeps the darkness at bay.”