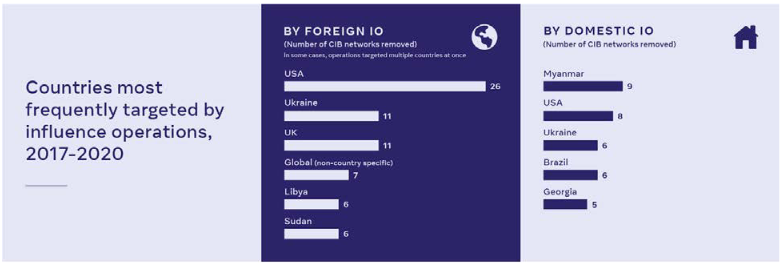

The UK is among the top three targets of threat actors running "Influence Operations" (IO), Facebook revealed today, in a detailed new threat report on its takedowns from 2017-2020 of "coordinated inauthentic behaviour".

These included the 2019 identification and takedown of multiple pages, groups and accounts that "represented themselves as far-right and anti-far-right activists, frequently changed Page and Group names, and operated fake accounts to engage in hate speech and spread divisive comments."

These accounts, targeting the UK -- and despite their apparent polar political views -- were connected and "frequently posted about local and political news including topics like immigration, free speech, racism, LGBT issues, far-right politics, issues between India and Pakistan, and religious beliefs including Islam and Christianity," one Facebook case study noted.

The US meanwhile joins Iran, Myanmar, Russia and Ukraine in the top five sources of "Coordinated Inauthentic Behavior (CIB)", Facebook said, highlighting its work to disrupt "white supremacy, militia and conspiracy groups who spoke with their own voice yet engaged in aggressive, adversarial adaptation against our enforcement."

Those seeking to influence public perception are pivoting hard from "widespread, noisy deceptive campaigns to smaller, more targeted operations" meanwhile, Facebook noted in the report, with sophisticated IO actors also getting "significantly" better at hiding their identities through improved technical obfuscation and OpSec.

Such OpSec improvements comes with significant trade-offs around engagement, Facebook noted, introducing friction into bad actors operations: "Using one-time-use or ‘burner’ accounts makes it difficult to gain followers or have people see your posts at all. Consistently hiding who you are and only resorting to copying other people’s existing content fails to build a distinct voice among authentic communities."

https://twitter.com/benimmo/status/1397528601193496581

With over 2.6 billion users yet a team of just 200 working on this problem, Facebook's IO Threat Intelligence Team focuses on uncovering and understanding "high-fidelity signals from the most sophisticated networks we disrupt" and relies heavily on machine learning to "identify behaviors and technical 'signatures' that are common for a particular threat actor, as well as some tactics that are common across multiple influence operations. We then work to automate detection of these techniques at scale", the report highlights.

(With fake accounts still at the heart of much IO, this means find and blocking millions daily: "This makes running these large networks as part of successful IO much more difficult and has been an important driver in the IO shift from wholesale to retail operations.")

See also: 6 free enterprise-ready cybersecurity tools you need to know

Facebook -- which said it has identified and removed over 150 networks for "violating our policy against CIB" also noted growing use of "witting and unwitting proxies" in IO campaigns. (It defines these as "coordinated efforts to manipulate or corrupt public debate for a strategic goal.”)

Detailing what is and what isn't IO is no simple task. As Facebook notes: "Over the past four years, threat actors have adapted their behavior and sought cover in the gray spaces between authentic and inauthentic engagement and political activity". As a result "it wouldn’t be proportionate or effective to use the same policy to enforce against a foreign government creating fake accounts to influence an election in another country as we do against a political action committee that isn’t fully transparent about the Pages it controls. Doing so would force us to either over-enforce against less serious violations, or under-enforce against the worst offenders."

It breaks IO down broadly into two categories: those undertaken by state actors, including military, intelligence, and cabinet-level bodies, and private IO run by everyone from "hacktivists" through to financially-motivated 'troll farms', commercial entities, political parties and campaigns, and special interest or advocacy groups.

Among the examples, a network targeting the US and operated by individuals in Mexico that "posted in Spanish and English about topics like feminism, Hispanic identity and pride, and the Black Lives Matter movement. Some of these fake accounts claimed to be associated with a nonexistent marketing firm in Poland. Others posed as Americans supporting various social and political causes and tried to contact real people to amplify their content."

Facebook notes: "As expected, improved operational security made it challenging to determine who was behind this operation using on-platform evidence. In fact, we did not see sufficient evidence to conclusively attribute this operation beyond the individuals in Mexico who were directly involved. However, following our public disclosure of the operation, the FBI further attributed the activity to Russia’s Internet Research Agency."

China lags Russia significantly in sophistication, Facebook suggests, noting that much of China's IO comproised "strategic communication using overt state-affiliated channels ( e.g . state-controlled media, official diplomatic accounts) or large-scale spam activity that included primarily lifestyle or celebrity clickbait and also some news and political content. These spam clusters operated across multiple platforms, gained nearly no authentic traction on Facebook, and were consistently taken down by automation."