The European Union's historic AI Act comes into force today. Termed the "world's first comprehensive AI law," it is likely to set the tone for similar policies across the globe.

The goal is "to ensure that AI systems used in the EU are safe, transparent, traceable, non-discriminatory, and environmentally friendly."

The legislation works on the principle that "AI systems should be overseen by people, rather than by automation, to prevent harmful outcomes."

The EU Parliament also notes that its priorities include establishing a "technology-neutral, uniform definition for AI that could be applied to future AI systems."

The AI Act includes all AI providers, deployers, importers, distributors, and product manufacturers working within the EU and those outside the region if the system's output is intended to be used in the EU.

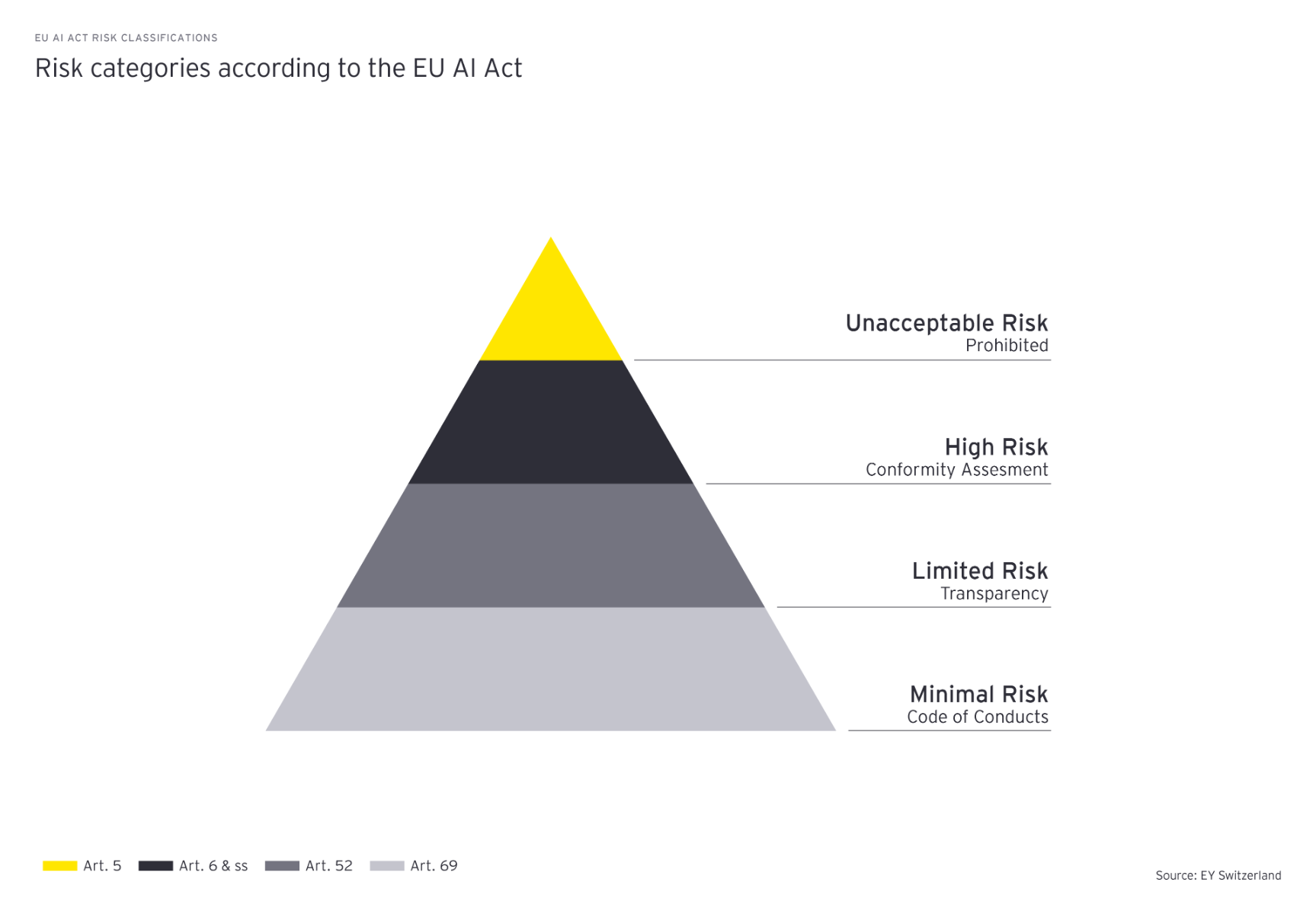

Obligations for providers and users will depend on the level of "risk" from artificial intelligence, with majority of the obligations falling upon developers of high risk AI.

Systems that pose an "unacceptable risk" will be banned entirely, except for some exceptions such as law enforcement's use of biometric identification. They include the following:

- Cognitive behavioural manipulation of people or specific vulnerable groups: for example voice-activated toys that encourage dangerous behaviour in children

- Social scoring: classifying people based on behaviour, socioeconomic status or personal characteristics

- Biometric identification and categorisation of people

- Real-time and remote biometric identification systems, such as facial recognition

See also: EU AI Act enters into law: Businesses face "potentially lethal" compliance risks, expert warns

The legislation notes that AI systems that negatively affect safety or fundamental rights will be considered high risk and will be divided into two categories, the first including systems used in products falling under the EU’s product safety legislation such as toys, aviation, cars, medical devices and lifts.

The second category relates to specific use cases (listed below) and will need to be registered into an EU database:

- Management and operation of critical infrastructure

- Education and vocational training

- Employment, worker management and access to self-employment

- Access to and enjoyment of essential private services and public services and benefits

- Law enforcement

- Migration, asylum and border control management

- Assistance in legal interpretation and application of the law.

These products will be assessed before being put on the market and throughout their lifecycle. Under the EU AI Act, "people will have the right to file complaints about AI systems to designated national authorities."

LLM chatbots will not be classified as high-risk, but will have to comply with transparency requirements and EU copyright law. AI systems classified as posing a minimal risk will have to comply with an EU-wide code of conduct.

Penalties for non-compliance with the law are severe, ranging from €7.5 million to €35 million or 1% to 7% of the company's global annual turnover, depending on the severity of the infringement.

According to guidance issued by EY: "Transition periods for compliance will subsequently be imposed with companies having 6 months to adhere to requirements for prohibited AI systems, 12 months for certain General Purpose AI requirements, and 24 months to achieve full legislative compliance."

Today, the Artificial Intelligence Act comes into force.

— Ursula von der Leyen (@vonderleyen) August 1, 2024

Europe's pioneering framework for innovative and safe AI.

It will drive AI development that Europeans can trust.

And provide support to European SMEs and startups to bring cutting-edge AI solutions to market. pic.twitter.com/cRoVoRtEy0

Industry opinions on the EU AI law

We asked industry leaders for their thoughts about the world's first AI law. While most argued it was well-intentioned, they also expressed doubts about whether it could solve the sector's most pressing concerns.

Peter van der Putten, Head of AI Labs at Pegasystems and Assistant Professor, AI at Leiden University, said the AI Act "provides a strong framework for regulating AI systems in a number of different categories based on their purpose and risk to do harm – unacceptable, high, intermediate level and low-level risk applications."

Der Putten put down the argument that tighter regulations could "harm or even stop the innovative use of AI".

"That is not the case," he said. "To ensure that AI is ethical, trusted and only used for good purposes, regulation is necessary. "

He advised businesses to focus on recognising which AI uses fall into the high-risk category and introduce tighter controls, governance, and documentation for accountability, oversight, accuracy and bias testing, privacy, and transparency. Leaders running the systems used in credit risk decisioning, HR recruitment, or risk-based pricing in life and health insurance must be particularly cautious and thorough in their compliance.

However, regulators can only help industry travel some of the distance - and it's easy for them to go far.

"Legislation isn’t a standalone solution," Steve Bates, Chief Information Security Officer at Aurum Solutions, told The Stack.

"Many of the act’s provisions don’t come into effect until 2026, and with this technology evolving so rapidly, legislation risks becoming outdated by the time it actually applies to AI developers," he added.

Eleanor Lightbody, CEO at Luminance, echoed the sentiment and said: "A one-size-fits-all approach to AI regulation risks being rigid and, given the pace of AI development, quickly outdated."

However, Lightbody did commend the Act for striking a balance between clear guidelines for acceptable uses and prevention of harms.

"With the passing of the Act, all eyes are now on the new Labour government to signpost the UK’s intentions for regulation in this crucial sector," she added.

"Implementing a flexible, adaptive regulatory system will be key, and this involves close collaboration with leading AI companies of all sizes."

Raj Koneru, Founder and CEO at Kore.ai, set out his advice for enterprises, which "should focus on implementing technologies that prioritise transparency and fairness.

"Any solutions they build must have guardrails that guide the behaviour and responses of AI systems. To eliminate hallucination and deep fakes and ensure the factual integrity of information, over-reliance on pure AI models trained without human supervision needs to be avoided.

Koneru also said that models should be improved through a "human-in-the-loop" process". Google describes this as "a collaborative approach that integrates human input and expertise into the lifecycle of machine learning and artificial intelligence systems".

Dr Ellison Anne Williams, founder and CEO of secure AI and privacy tech provider Enveil, also told us: "The EU's AI Act is another significant milestone in promoting the responsible, safe and secure adoption and implementation of AI across organisations and industries. It also emphasises the importance of data privacy in shaping the future of AI innovation."