A software tool that lets users deploy AI to interact with SQL databases has a CVSS 10 prompt injection flaw, security researchers at JFrog warned.

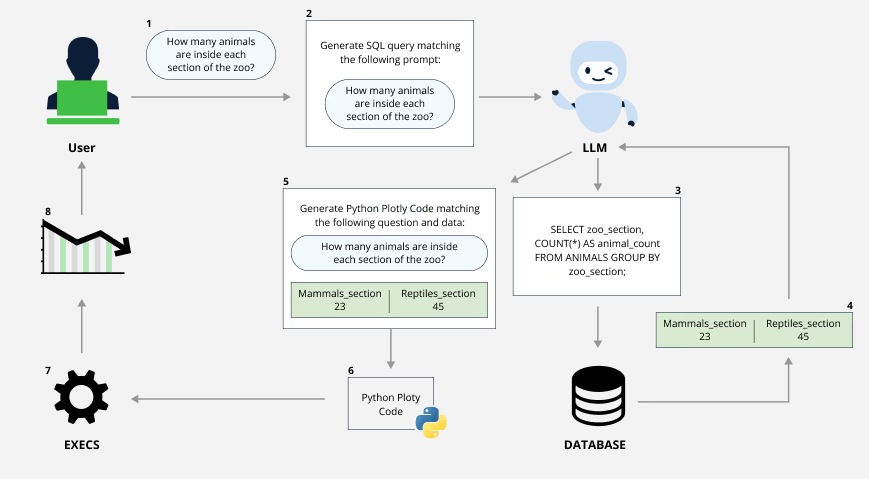

Vanna.AI is a Python-based RAG framework for developers wanting to let application users ask a database questions using natural language.

The vulnerability has been allocated CVE-2024-5565.

The bug gives an attacker remote code execution (RCE) capabilities in the text-to-SQL interface product, Nasdaq-listed JFrog’s researchers said.

“When we stumbled upon this library we immediately thought that connecting an LLM to SQL query execution could result in a disastrous SQL injection and decided to look into it” JFrog said in a June 27 paper.

See also: Feds to CIOs: Ask your software vendors for proof of a SQL Injection audit

“What surprised us was part of a different feature of Vanna’s library – the visualization of query results” it added, giving a detailed breakdown.

( CVE-2024-5565, crudely, lets users craft a special question that injects malicious code into the LLM prompt. This code can be disguised as a valid SQL statement but actually contain commands to be executed on the system. The LLM generates code based on the modified prompt, and the library then executes this code using Python's exec function for RCE.)

The library’s maintainer has since added a hardening guide.

(Vanna, the company, is led by a team of three people. It has nearly 9,000 stars on GitHub and 41 contributors.)

In it, Vanna AI’s maintainer noted: “Running vn.generate_sql can generate any SQL. If you're allowing end users to run this function, then you should use database credentials that have the appropriately scoped permissions.

They added: “For most data analytics use cases, you want to use a read-only database user. Depending on your specific requirements, you may also want to use Row-level security (RLS), which varies by database.

Plotly Code

“Running vn.generate_plotly_code can generate any arbitrary Python code which may be necessary for chart creation. If you expose this function to end users, you should use a sandboxed environment...” Vanna AI added.

The guidance in it may look obvious to those with a security mindset.

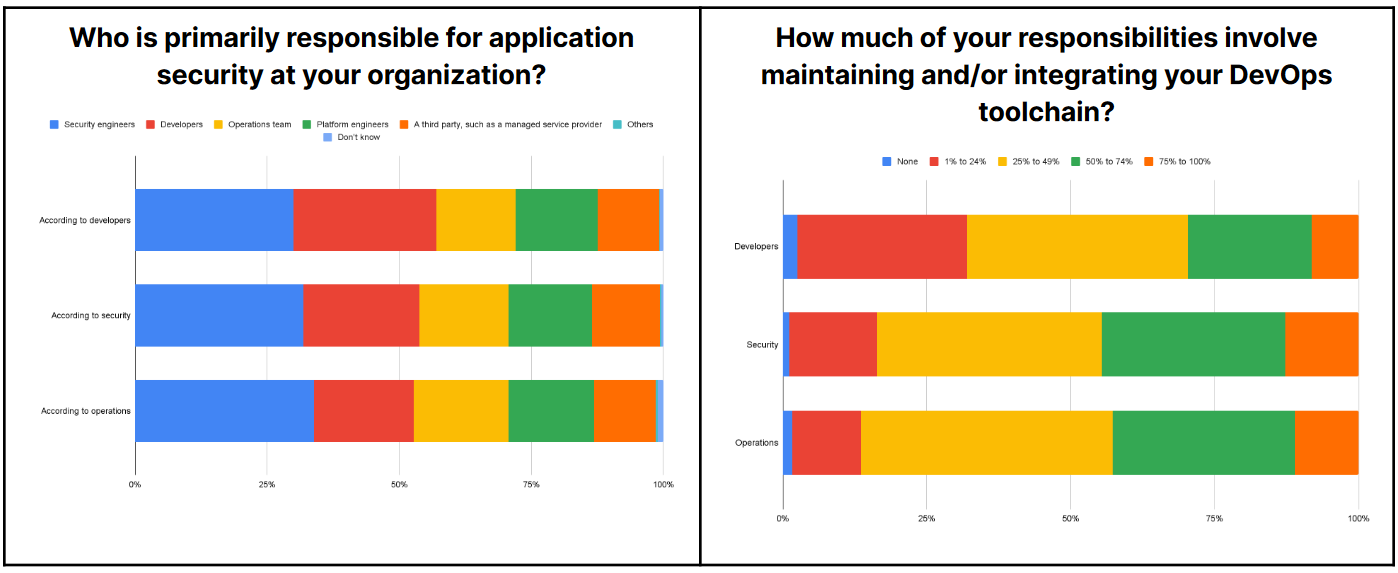

Unfortunately, as is well recognised, most developers do not have this – although a new GitLab DevSecOps report this week gives an indication that this is changing; this year, devs were significantly more likely than security respondents to say that “developers are responsible for security.

Yet with the AI toolchain still immature and security issues of this criticality cropping up, the bug may be one small reminder why despite developers at one bank coming up with over 1,000 generative AI application ideas, none are yet in production. (Other causes also abound.)

The bug was revealed days after cloud security startup Wiz reported an “easy-to-exploit” RCE bug, CVE-2024-37032, in the widely used Ollama (which impressively pushed a patch within four hours of disclosure; downstream customer patching, as expected, has been less impressive.)

JFrog’s Shachar Menasche said CVE-2024-5565 “demonstrates that the risks of widespread use of GenAI/LLMs without proper governance and security can have drastic implications for organisations. The dangers of prompt injection are still not widely well known, but they are easy to execute.”

Users should "not rely on pre-prompting as an infallible defense mechanism and should employ more robust mechanisms when interfacing LLMs with critical resources such as databases or dynamic code generation.”

Multiple RCE bugs in inference servers...

Referring to the Ollama vulnerability this week meanwhile, Wiz's team said: "Over the past year, multiple RCE vulnerabilities were identified in inference servers, including TorchServe, Ray Anyscale, and Ollama. These vulnerabilities could allow attackers to take over self-hosted AI inference servers, steal or modify AI models, and compromise AI applications.

"The critical issue is not just the vulnerabilities themselves but the inherent lack of authentication support in these new tools. If exposed to the internet, any attacker can connect to them, steal or modify the AI models, or even execute remote code as a built-in feature (as seen with TorchServe and Ray Anyscale). The lack of authentication support means these tools should never be exposed externally without protective middleware, such as a reverse proxy with authentication."

Wiz added: "Despite this, when scanning the internet for exposed Ollama servers, our scan revealed over 1,000 exposed instances hosting numerous AI models, including private models not listed in the Ollama public repository, highlighting a significant security gap."