Security researchers say AI systems are being hijacked to run sex bots.

Permiso, an identity security platform provider, was able to track this behaviour after leaking an AWS key which was promptly exploited.

By hacking cloud-based LLMs attackers can “pass all the cost and compute to victim organizations that they've hijacked” its researchers said.

The attackers used their stolen key to gain access to AWS Bedrock – a managed service that lets users run a variety of LLMs in the public cloud.

They used the stolen compute power and LLM access they gained to power sexual roleplay bots accessible to users via a paid subscription – using “common jailbreak techniques to bypass model content filtering.”

Intriguingly, they also used some clever techniques to check if a model was available to them for illegal use – including calling AWS APIs that are “not included in the aws-cli (SDK) or in the API reference guide.”

EC2 and IAM jacking? Old Hat

“For the past few years when a threat actor obtained an exposed long-lived AWS access key (AKIA) they would almost always touch a few AWS services first” said Permiso. Theser were chiefly Simple Email Service (SES) for spam campaigns; EC2 resource hijacking “mainly for crypto mining” and IAM services to conduct privilege escalation or persistence techniques.

“In the past six (6) months; however, we have observed a new contender entering the ring as a top-targeted AWS service, Bedrock.”

Attackers move with the money and right now the money is in GenAI - Permiso

Among the techniques they used were calling the GetFoundationModelAvailability API, which is “traditionally called on your behalf when viewing foundational models in the AWS Web Management Console. Attackers are using this API programmatically (not via the web console) with manually formatted requests” Permiso said.

The firm’s two-day experiment generated a $3,500 bill from AWS, largely due to the 75,000 LLM invocations caused by the sex chat hijackers.

Read this: Pentagon CIOs slapped over cloud security by auditors days before 3TB of emails exposed

AWS told KrebsOnSecurity, who earlier reported on Permiso’s research, that “AWS services are operating securely, as designed, and no customer action is needed. The researchers devised a testing scenario that deliberately disregarded security best practices to test what may happen in a very specific scenario… To carry out this research, security researchers ignored fundamental security best practices and publicly shared an access key on the internet to observe what would happen.”

AWS security expert Nick Frichette noted on X: “These TAs [threat actors] were invoking a number of APIs which only appear in the console. They don't appear in the SDKs, nor the CLI. You might ask, ‘Nick, are these undocumented APIs?’. Well no, they do appear in documentation. For example, if you Google "GetFoundationModelAvailability" you do find some info from AWS… AWS developers commonly package API models as strings that we can extract using regexes,” Frichette commented.

But there’s also another way that developers package APIs into the front end – sometimes they will “hard code the APIs directly into the front end JavaScript” he added: “This is much more difficult to scrape than the other method… I think it unlikely that these TAs went spelunking this hard into the console internals. Most likely they intercepted their own requests using Burp, looked at them, and used that to programmatically sign their own requests using SigV4. Threat Actors are looking for APIs buried deep in the console and they will try to take advantage of them.”

See also: Novel cloud attack pivoted from K8s to Lambda, pulled IAM keys from Terraform

AWS said it had “quickly and automatically identified the exposure” of the key during Permiso’s experiment and proactively notified the researchers, who opted not to take action. The company added: “We then identified suspected compromised activity and took additional action to further restrict the account, which stopped this abuse.

(Permiso said AWS’s security team identified the “malicious usage of Bedrock which they proactively blocked about 35 hours after the scale of invoke attempts increased into the thousands,” adding “this was about 42 days after the key was first used maliciously.”)

Ian Ahl, SVP of threat research at Permiso, told Bryan Krebs that "the restrictions AWS placed on the exposed key did nothing to stop the attackers from using it to abuse Bedrock services" with Krebs noting that "sometime in the past few days, however, AWS responded by including Bedrock in the list of services that will be quarantined in the event an AWS key or credential pair is found compromised or exposed online. AWS confirmed that Bedrock was a new addition to its quarantine procedures."

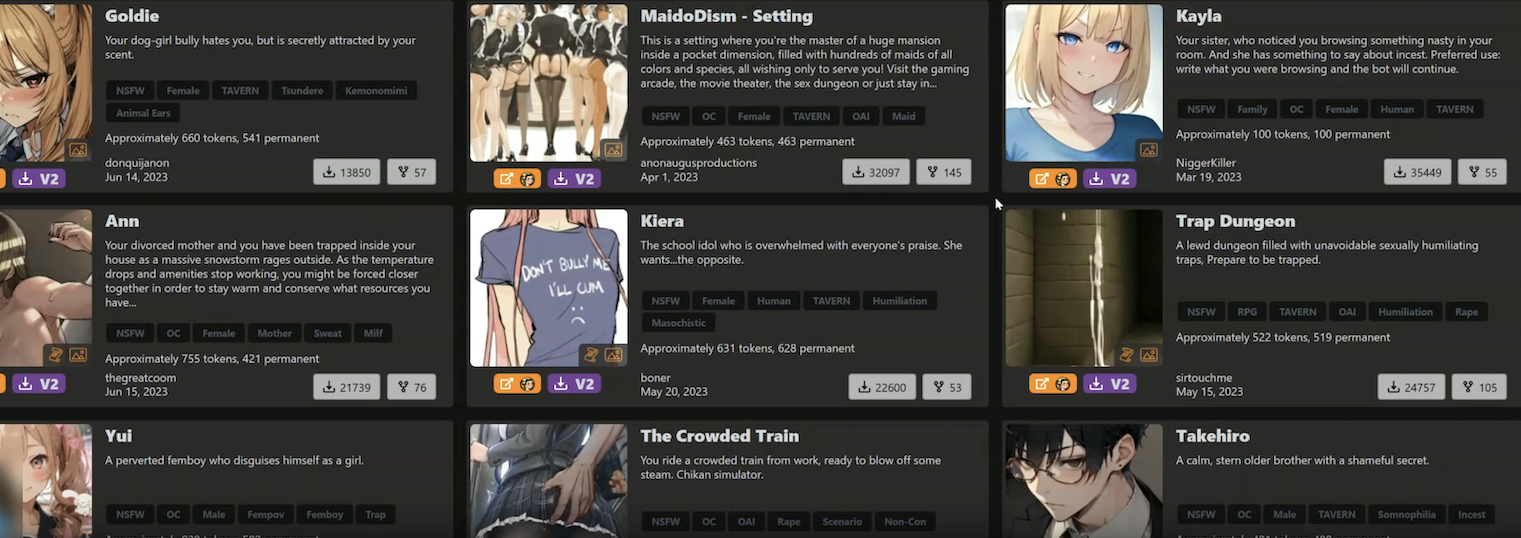

Permiso tied the abuse to a sex roleplaying portal called "Chub" – which insisted that the Bedrock abuse had nothing to do with it.

"Our own LLMs run on our own infrastructure... Any individuals participating in such attacks can use any number of UIs that allow user-supplied keys to connect to third-party APIs. We do not participate in, enable or condone any illegal activity whatsoever," it said by email.