OpenAI describes its latest ChatGPT model as a whizz at "complex reasoning tasks" that is "a significant advancement" and "represents a new level of AI capability."

But not everyone agrees.

A top academic once described as "the Mozart of math" has issued a gently brutal review of OpenAI o1 after setting it a series of complex tasks.

The model, dubbed "Strawberry", uses reinforcement learning and chain-of-thought reasoning to generate more analytical responses.

"I have played a little bit with OpenAI's new iteration of GPT... which performs an initial reasoning step before running the LLM," Terence Tao, Professor of Mathematics at the University of California, Los Angeles, wrote on Mathstadon, a Mastodon instance for people in the numbers game. "It is certainly a more capable tool than previous iterations, though still struggling with the most advanced research mathematical tasks.

"The experience seemed roughly on par with trying to advise a mediocre, but not completely incompetent, graduate student. However, this was an improvement over previous models, whose capability was closer to an actually incompetent graduate student."

Tao tested GPT 01 with a series of problems that would make many human brains bleed. During the first analysis, GPT was asked to answer a "vaguely worded mathematical query" which could be solved by identifying a theorem from the literature and then applying it.

"Previously, GPT was able to mention some relevant concepts but the details were hallucinated nonsense," Tao wrote. "This time around, Cramer's theorem was identified and a perfectly satisfactory answer was given."

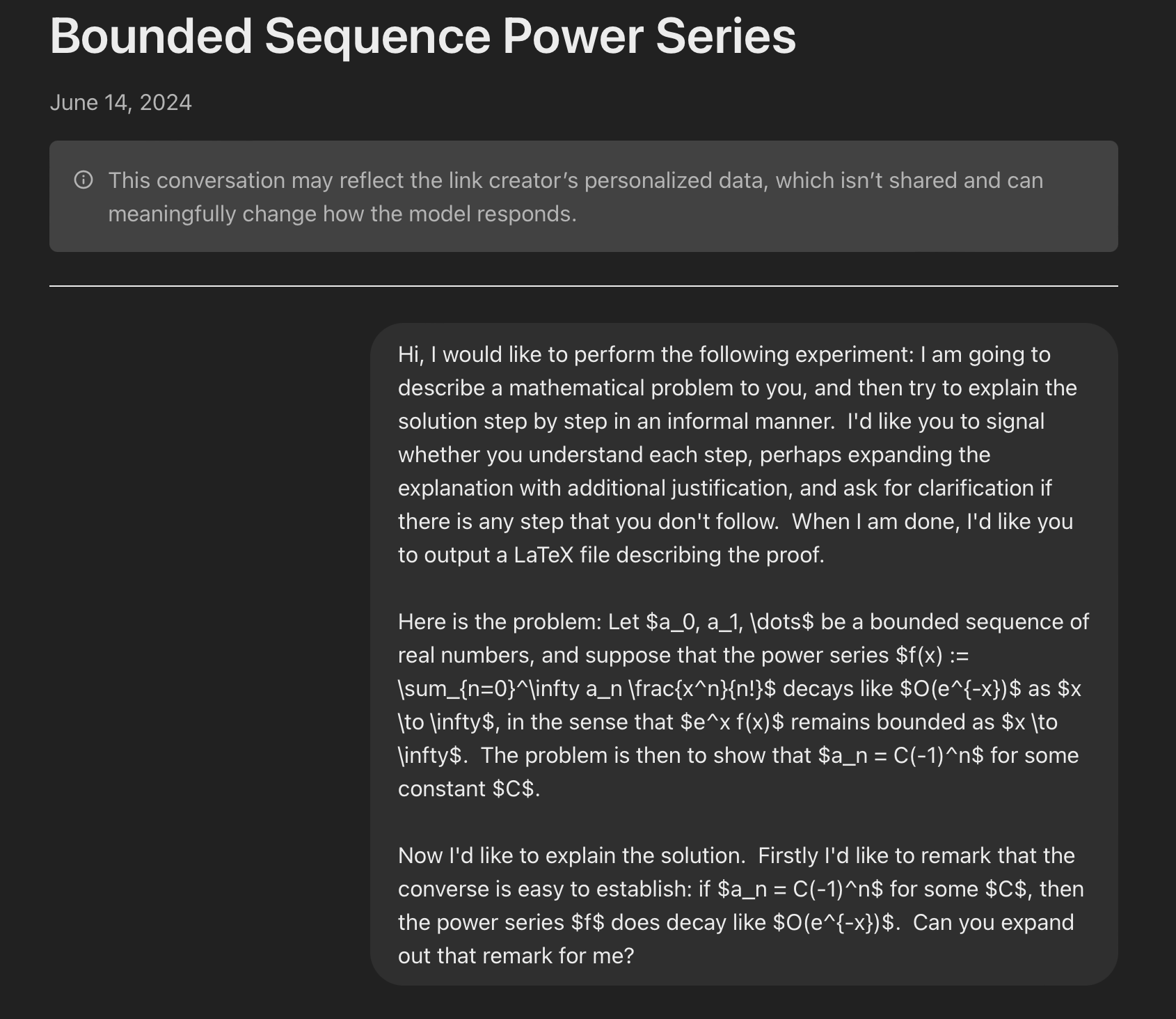

Tao then stepped up the complexity a bit, asking about a bounded sequence power series. You can see the results here and the query in the screenshot below.

"Here the results were better than previous models, but still slightly disappointing: the new model could work its way to a correct (and well-written) solution *if* provided a lot of hints and prodding, but did not generate the key conceptual ideas on its own, and did make some non-trivial mistakes," Tao added.

However, the human advantage may not last forever.

"It may only take one or two further iterations of improved capability (and integration with other tools, such as computer algebra packages and proof assistants) until the level of 'competent graduate student' is reached, at which point I could see this tool being of significant use in research level tasks," Tao continued.

He also said the model "understood the task well and performed a sensible initial breakdown of the problem" when asked to produce a result using the programming language Lean.

"I could imagine a model of this capability that was specifically finetuned on Lean and Mathlib, and integrated into an IDE [integrated development environment], being extremely useful in formalization projects," Tao concluded.

One of the advantages of using machines over humans is that they are less likely to take offence at incautiously phrased sentences.

Perhaps cognisant of all the very real feelings out there looking for reasons to be hurt, Tao issued an update to his analysis today, apologising for giving "the incorrect (and potentially harmful) impression" that human graduate students could be "reductively classified according to a static, one-dimensional level of 'competence'."

READ MORE: Did a Samsung exec just leak key details and features of OpenAI's ChatGPT-5?

"This was not my intent at all," he said.

Tao pointed out that the ability to contribute to an existing research project is a "relatively minor" part of life as a maths student. And even if a mathemetician is poor in this area but "excels in other dimensions such as creativity, independence, curiosity, exposition, intuition, professionalism, work ethic, organization, or social skills" can "end up being a far more successful and impactful mathematician than one who is proficient at assigned technical tasks but has weaknesses in other areas.|

"Human students learn and grow during their studies, and areas in which they initially struggle with can become ones in which they are quite proficient after a few years," he said.

"In contrast, while modern AI tools have some ability to incorporate feedback into their responses, each individual model does not truly have the capability for long term growth, and so can be sensibly evaluated using static metrics of performance. However, I believe such a fixed mindset is not an appropriate framework for judging human students, and I apologize for conveying such an impression."