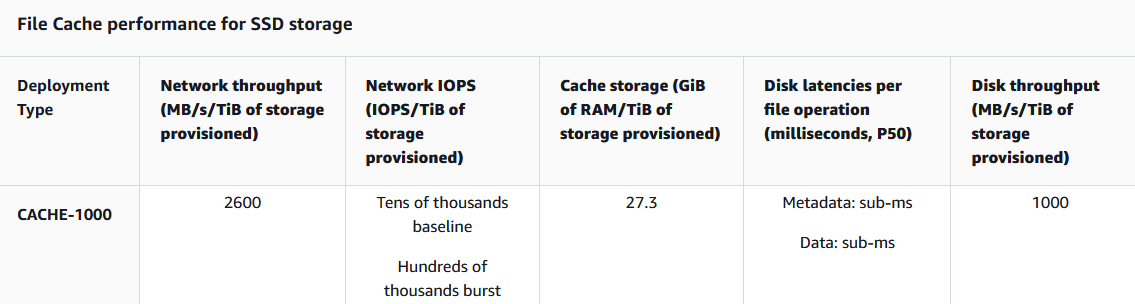

AWS has launched a new fully managed service, Amazon File Cache – a new high-speed in-memory cache on AWS for “processing file data stored in disparate locations, including on-premises” that is built on the open source Lustre file system. The service has a pacy baseline throughput capacity of 1,000 MB/s per TiB of cache.

In a blog announcing the new service on September 30 Amazon pointed to potential use across “cloud bursting” (pushing workloads to cloud when your own infrastructure is maxed out or unavailable) and hybrid workflows in media and entertainment, financial services, health and life sciences, microprocessor design, energy, etc.

Amazon File Cache lets users connect their applications access to files using the POSIX interface. (Those wanting to connect to on-premises file servers must ensure that they support the NFSv3 protocol and will need to set up an AWS Direct Connect or VPN connection between the on-premises network and the Amazon VPC.)

The managed service comprises file servers that the clients communicate with, and a set of disks attached to each file server that stores cache data. Each file server employs an in-memory cache to enhance performance for the most frequently accessed data. When a client accesses data that's stored in the in-memory cache, the file server doesn't need to read it from disk, reducing latency and increasing the total amount of throughput.

See also: Unusual bedfellows: Microsoft, AWS team up on...

The service automatically releases the less recently used cached files to ensure the most active files are available in the cache for your applications. Any of the caches can be concurrently accessed by thousands of compute instances. Because it supports concurrent access to the same file or directory from thousands of compute instances, users can do rapid data checkpointing (saving a snapshot of a state) from app memory to storage, which is a common technique in high performance computing (HPC), as Amazon’s user guide puts it.

Lustre is a parallel file system first developed at Carnegie Mellon and now used in some leading supercomputing/HPC systems. The scale of the sort of folks potentially using this new service makes its pricing somewhat more understandable (storage is a not-inconsiderable $1.330 GB/month, the pricing page shows.)

(As one commentator, Orbital Sidekick’s Andrew Guenther had noted: “Who the hell is Amazon File Cache for? I saw the announcement and was hoping for a managed storage gateway. We use storage gateway as an NFS interface for S3 with a 10TB cache and it costs ~$1k/month. That same cache is $13k on File Cache. What?!”)

Amazon says you can run it from RHEL, CentOS, SUSE Linux, and Ubuntu: “The service is also compatible with x86 based Amazon Elastic Compute Cloud (EC2) instances and Arm-based Amazon EC2 instances powered by the AWS Graviton2, and Amazon Elastic Container Service (ECS) container instances. With Amazon File Cache, you can mix and match the instance types and Linux AMIs that are connected to a single cache…”

“This is gonna help *loads* of people (including, but not even remotely limited to #HPC folks)” said AWS’s Brendan Bouffler, Head of Developer Relations, HPC Engineering, adding to The Stack that it uses “the same file syncing tech we built into FSx for Lustre, which enables it to map a Lustre fs [file system] over an S3 bucket (or several, in fact). I love it when HPC stuff goes mainstream. Feels like we’re moving the needle a lot.”

Amazon File Cache is now generally available (GA) in Canada (Central), Dublin, Frankfurt, London, Singapore, Sydney, Tokyo and US East (North Virginia, Ohio) and West (Oregon). The user guide is here.

The Stack reached out to AWS for further insight but was drawing a blank as we published. We’d welcome further views from potential users as well as those involved in the build. Email our team here.