"The claim that cloud is always 80% more efficient than on-premise? That's been disproven," says Mark Butcher of Posetiv Cloud, talking about the exaggerated "greenwashing" claims made by cloud service providers.

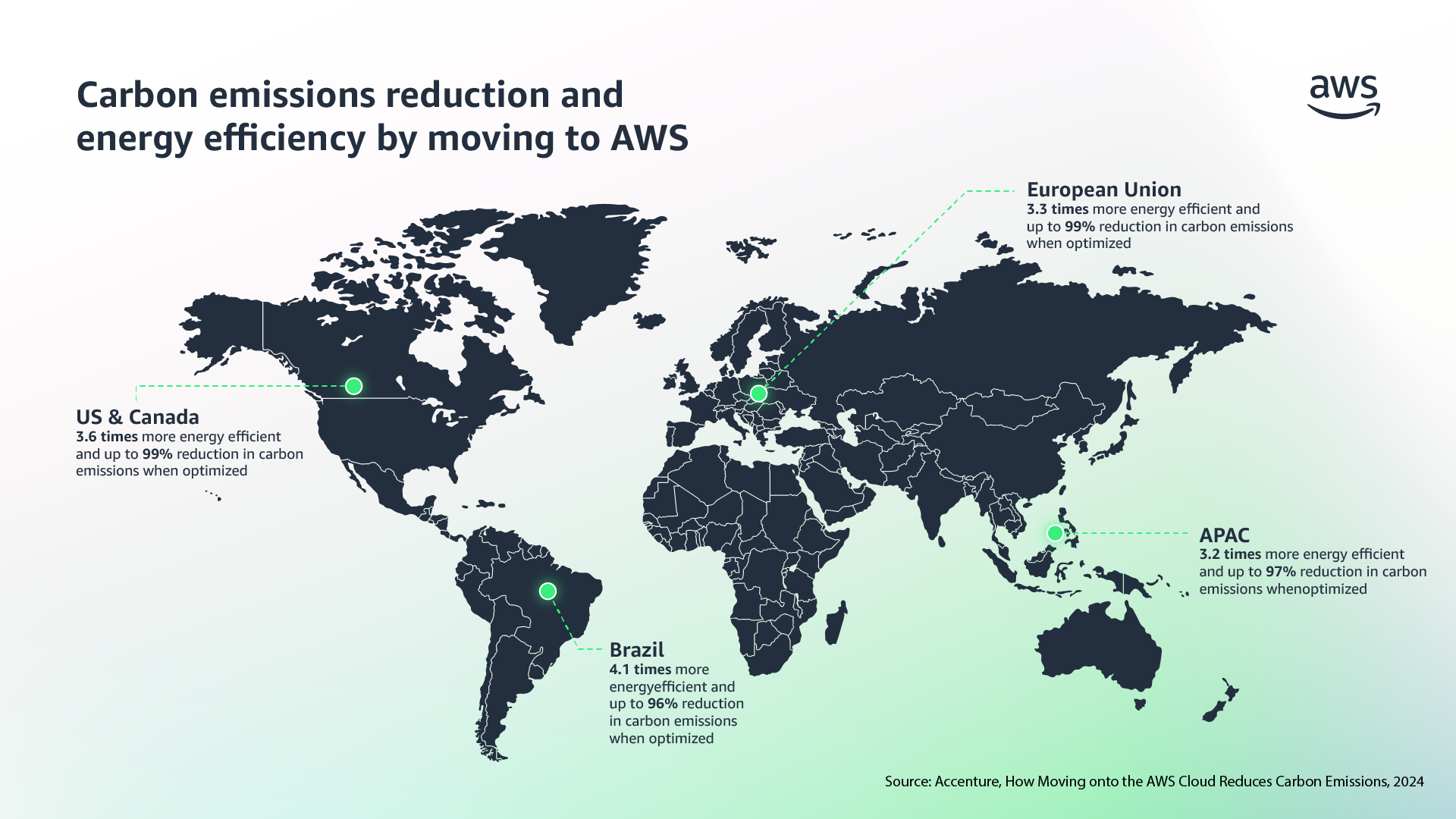

Butcher spoke to The Stack in light of a new report released by AWS and Accenture. Amongst some of its more striking claims, the report states that when AI workloads are optimized on AWS, the associated carbon footprint can be reduced by up to 99%.

The report focuses on compute heavy workloads and compared two sub-scenarios. The first compared an on-premises deployment with the Lift-and-Shift Scenario—where the workload was migrated to AWS as-is, without any optimization.

The second compared the Lift-and-Shift Scenario with the AWS-Optimized Scenario—where the workload was migrated to AWS and then optimized by leveraging a modernized AWS architecture and purpose-built silicon.

Its through these optimised scenarios that AWS estimates the possible jump in efficiency. It notes the example of Illumina, a health and genomics company that reduced its carbon footprint by an estimated 89% after optimising on AWS.

AWS wrote: "For compute-heavy workloads, potential carbon emissions reductions of running AI workloads on AWS versus on-premises were assessed by analyzing the operational and embodied emissions of a representative workload provided by AWS. Accenture found when optimizing compute-heavy workloads on AWS, organizations can reduce their associated carbon footprint across several geographical regions by up to 99%."

Butcher, whose company helps customers "achieve a successful cloud-native strategy with agile and automated high-quality development", alleges there is an issue with the hyperbole of comparing an extreme worst-case scenario with the best-case scenario.

"I'm not saying is that Amazon run their services badly. They run hyper-efficient, optimised and well-run data centres and platforms, but then so do lots of other people. They're not 99% better at running things than everyone else. Maybe 10% and maybe 15%? But they're not 99%," he asks.

However, AWS does have case studies that appear to back up its claims. It reported that Baker Hughes, an energy company, reduced its carbon footprint by 99%, "setting an example for the community."

Butcher and other critics of cloud greenwashing are concerned about sustainability claims. Cloud hyperscalers are quite reliant on carbon offsets, and companies (including AWS) report zero Scope 2 emissions (carbon produced indirectly, such as by utility companies supplying energy) in markets where they have agreements to buy renewable energy.

James Hall, head of GreenOps at Greenpixie, a provider & integrator of cloud emissions data, said AWS' emission claims could be true - but only if has measured them "in totality". In practice, this is not easy to do.

He told The Stack that there are "literally thousands of different variables" which go into calculating total emissions.

"In my experience people need transparency to trust numbers," he said. "What's gone into the calculation, the source of those numbers, how they're applied. These kinds of details are missing from all the hyperscalers in my opinion.

"I'd care more about how much electricity and water you could save running AI workloads in AWS, then the accounting exercise that carbon calculations can sometimes become wouldn't matter, as hyperscalers are typically a lot more energy and cooling efficient."

Hall also said efficiencies are "only part of the picture" and added: "AI is a prime example of Jevons' Paradox, whereby an increase in efficiency in resource use ultimately results in an increase in resource consumption, rather than the decrease you'd logically expect."

As AI increases the workload on data centres and pushes up carbon emissions - better reporting and transparency from cloud providers are needed. Google has reported that its emissions are up by 48% in the past five years due to the AI boom.

Microsoft's emissions are up by 29% from its 2020 baseline, and Amazon is yet to release its latest numbers. At the current rate, AI is projected to drive up 160% power consumption by 2028.

See also: AWS's new carbon footprint tool criticised

Responding to Google's findings, Google CSO Kate Brandt and Benedict Gomes, SVP, Learning & Sustainability, said that they were "actively working through" significant challenges, and they were committed to the systemic level change needed for a sustainable future.

For companies themselves, there are ways to make smarter choices when it comes to the cloud. Migrating to a cloud service provider that is carbon conscious is only part of the puzzle. Selecting a low carbon-intensity region data centre is the second.

There is a role for FinOps to play too. Reports indicate that nearly 30% of cloud spend is wasted. Optimisation can save both money and limit emissions in this case.

"You should have a really hardened optimization practice. You should have standards where you're modernising all your applications and services to make them truly cloud native. You should have a culture which discourages waste," says Butcher.

We have written to AWS for comment.