There was one mention of Gandalf, one mention of Odin, three mentions of digital transformation, four references to ponies, five of containers, seven mentions of stars, 56 references to security, 65 mentions of customers and, of course, 224 mentions of data. But the phrase “cloud costs” – a growing customer concern – did not come up once in AWS CEO Adam Selipsky’s keynote address at the re:Invent conference in Vegas this week.

Selipsky’s keynote – replete as it was with some esoteric metaphors and eclectic new service announcements – did touch on cost, with the AWS CEO telling his audience that “if you’re looking to tighten your belt the cloud is the place to do it”, but those naively hoping for a more substantial announcement were out of luck.

To at least one audience member, that was a real oversight. As Rahul Subramaniam put it: “AWS had a large opportunity that they didn’t fully capitalize on. I wish Adam had done more to directly address customers' concerns about the cloud being more expensive. He discussed customers saving anywhere from 40-70%, but with no detail. I wish he said more about how they achieved these savings. Customers need greater clarity.”

The phrasing may be pithy but Subramaniam has deep experience here. As Head of Innovation at Texas-based ESW Capital, a private equity firm controlled by Texas billionaire Joseph Liemandt, he’s been a significant AWS customer since 2007, at one point managing over 45,000 AWS accounts across a sprawling portfolio of software companies that were racking up AWS bills that in aggregate were costing hundreds of millions.

“There are over 100,000 APIs…”

Speaking with The Stack, he said that it was in AWS’s interests to get people to optimise their basic cloud spending so they can allocate more to the higher order services AWS is building: “They don’t just want you to buy EC2, they want you to get up the stack; that’s where the value is for them.”

AWS does make an effort to help customers optimise spend, but both inherent service complexity and its culture stop it doing more, he suggested: “They’ve got AWS Cost Management, AWS Cloud Economics, a whole bunch of podcasts… But AWS is not just a simple service. There are over 100,000 different APIs as part of the AWS service catalogue and each has a best practice associated with it."

He added: “AWS has also never, as part of their culture, wanted to tell customers what to do. They're like, ‘here are the building blocks, go build whatever you want!’ That was great for the initial adopters who figured stuff out with trial and error. But today, when you have 100,000 APIs, navigating best practice is incredibly challenging and most customers do not understand how to be efficient in that complex world. You’ve basically [now] got a situation where almost everyone is contending with runaway costs.”

Cutting AWS costs: The Airbnb case

Adam Selipsky did reference Airbnb cutting AWS costs in his November 29 keynote.

“When the bottom fell out of the hospitality industry in 2020, Airbnb was able to take down their cloud spending by 27%. Then when the world began to emerge from the worst pandemic, Airbnb was able to quickly turn on the cloud infrastructure that they needed, and continue to drive innovation” he told delegates.

Luckily for those interested in details, Airbnb’s team have written about this extensively, here and here.

They've emphasised that one big win was moving from manual EC2 instance orchestration to dynamically flexing their cloud clusters using the Kubernetes Cluster Autoscaler. (Previously, as Airbnb said, “each instance of a service was run on its own machine, and manually scaled to have the proper capacity to handle traffic increases. Capacity management varied per team and capacity would rarely be un-provisioned once load dropped…”)

(Kubernetes automates the operational tasks of container management and orchestrates the compute, networking, and storage requirements of your workloads. As Patrick McFadin on DataStax puts it to The Stack: “Kubernetes is the equalizer. It allows users to declare what they need from the parts supplied by cloud providers. It's getting us closer to infrastructure conforming to the application and not the other way around. Every cloud provider offers a Kubernetes service. When AWS announced theirs, I knew K8s had won.”)

Focussed on cutting AWS costs? Let spot instances be your friend

Airbnb also built dashboards with open source data exploration and visualisation platform Apache Superset that ingested data from the AWS Cost and Usage Report to help create a “clear accounting structure for all AWS usage aimed largely at understanding holistically how we consume and utilize AWS services”, tapped AWS’s “Savings Plan” (a flexible pricing model that allows customers to save up to 72% on Amazon EC2 and AWS Fargate in exchange for making a commitment to a consistent amount of compute usage over a fixed term) and used spot instances more including for its Continuous Integration environment, among many other steps.

Simply making greater use of spot instances (a discounted way of getting access to spare EC2 capacity that is available for less than AWS’s On-Demand price) can generate huge AWS cloud cost savings.

That was something learned the hard way by the UK’s own Home Office early on its cloud journey, which had resulted in AWS service bloat and pre-production and prod environments set up in the same way to simplify development, resulting in unused development services running as if they were a full production service.

(After a root and branch review, one of its teams went from running 20 containers in production to two, which scaled only there was an increase in demand; it had also only been using 10 to 20% of booked resources.)

Darío Fernández Barrio, Head of Devops at NYSE-listed Wallbox, an EV charging supplier, also told The Stack this week that the company cut its AWS bill by 70% through intelligent use of spot instances. (These are best used, as AWS says, “if you can be flexible about when your applications run and if your applications can be interrupted [and are] well-suited for data analysis, batch jobs, background processing, and optional tasks.”)

Sudhir Kesavan, SVP at Wipro FullStride Cloud Services at global IT consultancy Wipro Ltd. emphasized that irrespective of what cloud provider customers use, there are best practices that can help dramatically trim costs.

He told The Stack: "[A common failing is] lack of runtime sizing and choosing appropriate mechanics of dynamically downsizing/upsizing based on workloads. This needs sophisticated tooling, measurements and interventional automation to change runtime parameters. Eg: Dynamically changing the IOPS configurations from say 5000 to 50000 per second based on computational needs.

He added: "[There's also] not enough control and automation of Storage dynamics. Eg: Enterprises have not yet fully implemented storage movement techniques from Flash to Traditional disk. Offloading unused or rarely used data or low-throughput/response time data can be moved to conventional disk. Optimization techniques across Storage are still evolving. This includes the DB vendors as well."

See also -- DataStax takes Cassandra serverless: Game-changer

ESW Capital’s Rahul Subramaniam meanwhile got so tired of having to optimise spot instances, configure services most effectively and generally focus on cutting AWS costs that he built a tool to automate this and a year ago decided to take it to market – calling it “CloudFix”. As he told The Stack: “We basically took a whole lot of AWS recommendations for what the ideal way to use particular services are. And we went through a whole lot of very generic suggestions that they were making, created concrete versions of them, got them ratified by AWS, and just implemented an automation that you can execute systematically over and over again.”

He added: “A lot of enterprises still think of AWS as a data centre. That's literally not where the value of AWS is. AWS adds values in all of the higher order services; when you can leverage the elasticity of the cloud.

“That means, if you're, if your requirements are miniscule, use miniscule amounts of resources, if suddenly you have a peak, like 10 minutes later, you should be able to scale up to meet that peak. All of that value is actually unlocked in what I call the higher order services, it's not in actually getting an EC2 instance, it's in leveraging things like Lambda, and DynamoDB, and all the serverless stuff that's out there. That is what allows you to be elastic. The more of these higher order servers that you leverage, you can actually achieve cost benefits that are orders of magnitude better than literally just buying the instance and operating everything yourself. So getting up that stack, I think is something that every organisation should be doing…”

That’s something that Adam Selipsky would no doubt agree with.

And although it didn’t warrant a mention in the AWS CEO’s keynote – which did touch on a raft of other new services – one bit of AWS news that quietly slipped the net may actually be useful for cutting AWS costs.

AWS Compute Optimizer analyses your AWS resource utilisation data collected by Amazon CloudWatch from the past 14 days – or three months if you cough up some cash – to identify the optimal resource configuration for your EC2 instances, EBS volumes, AWS Auto Scaling groups, and AWS Lambda functions.

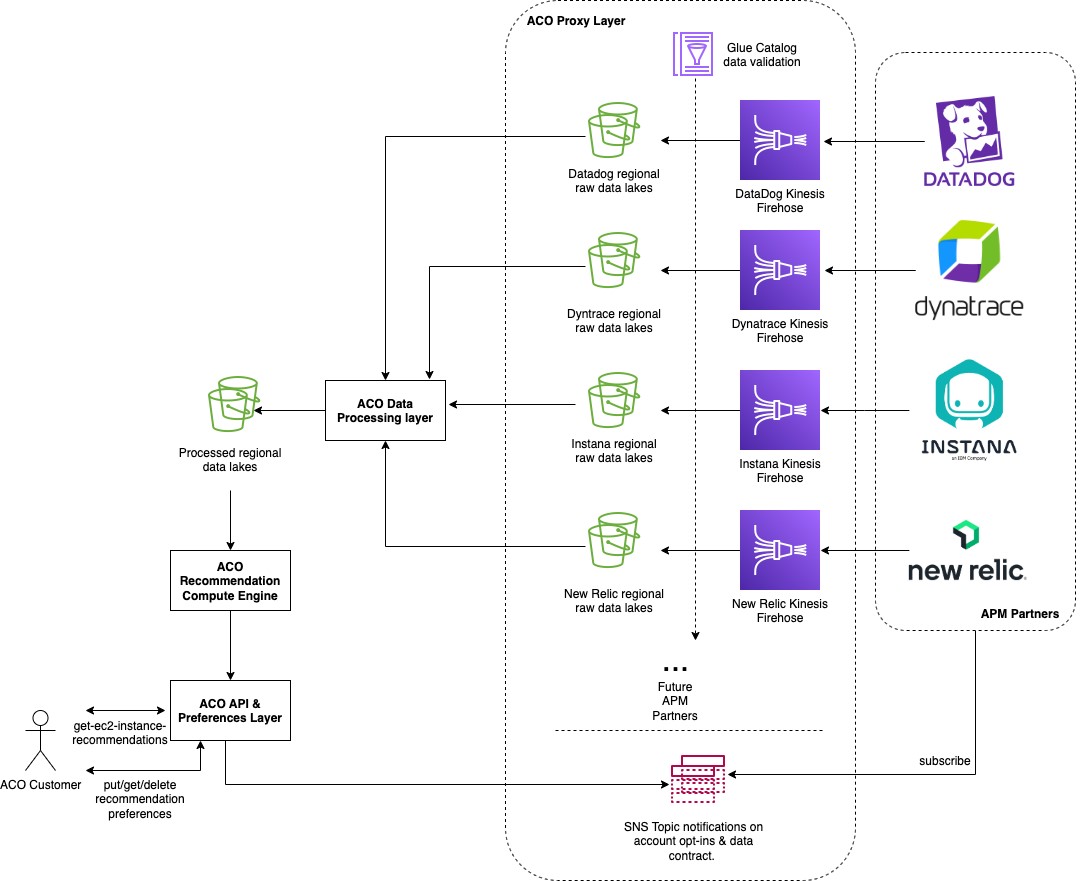

It’s been a “closed” AWS service until now, but this week the cloud colossus opened it up to four partners.

Datadog, Dynatrace, Instana, and New Relic have all designed and released integrated “push” models that feed EC2 memory utilisation data to Compute Optimizer. This insight is fed into the AWS recommendation engine to provide customers with “more intelligent and cost saving recommendations.” (Customers of these respective companies will need to deploy a software agent to their AWS environments. This will then discover new EC2 instances as they come online – without the need for manual configuration – and, as Dynatrace puts it, let users “see how much memory is being used per EC2 instance, in addition to contextual insights into what apps are running on each instance [and the impact of] overutilized memory among services and dependencies.”)

As AWS noted this week: “This design is a departure from the traditional pull model with calling public APIs using customer quotas to retrieve the required metric data. With this push data platform, the Compute Optimizer team built a scalable interface that APM [Application Performance Monitoring] providers can develop against… creating a shared responsibility to deliver intelligent recommendations to our customers.”

Every little helps and it shouldn’t take too long before there is very demonstrable ROI but as with all things, it’s not a magic bullet and as Airbnb has emphasised, cutting AWS costs also requires cultural change. Its approach was to “give teams the necessary information to make appropriate tradeoffs between cost and other business drivers to maintain their spend within a certain growth threshold” and then “incentivize engineers to identify architectural design changes to reduce costs, and also identify potential cost headwinds.”

No wizards, starlight, or ponies necessary.