When Dynatrace gathered the “brightest minds in the modern cloud” in Amsterdam this week for its Innovate EMEA conference, the word that was supposed to sum up the event was “observability”. But underneath the surface, a darker, more challenging concept repeatedly reared its head: complexity.

Enterprises are drowning in data. Software is more important to the functioning of human civilisation than ever before, yet is terrifyingly vulnerable and has created vast numbers of points of failure in critical systems across the planet. Meanwhile, Generative AI (GenAI) is creating novel risks that are difficult to predict, let alone mitigate. It’s a mess - and Dynatrace wants to help its customers untangle it.

Rick McConnell, CEO, kicked off his keynote using the phrase: “Observability and application security are mandatory for business resilience.” That’s already a change from the past, in which they were “becoming” mandatory. Today, he insisted, both those two buzzwords are non-optional business practices.

Speaking to McConnell at a media briefing before the event, we asked him to set the scene by considering if we're currently experiencing a dramatic and threatening exponential increase in organisational fragility - an inability to prevent and deal with shocks.

“Yes, is the short answer,” he said. “There’s an explosion of data and billions of interconnected data points…. combined with an unbelievable shortage in IT resources across the planet to keep things running the way they used to.

“I think of it as a curve. Look back maybe a decade ago, maybe even five years ago, and we had at least a rough alignment of the resources needed to manage a software environment. I believe that equilibrium is beginning to break.

"The cloud was the foray into breaking that equilibrium. Gen AI is going to finish the job because you just can't keep up, and it is getting harder and harder to keep systems working as a result because many more things can go wrong.”

Complexity is not just the mind-killer that wipes out productivity. It’s a threat to organisations of all sizes, ranging from a tiny one-man company struggling to keep track of finances to the biggest enterprises trying to set sail atop a churning, fathomless data lake. In Joseph Tainter’s famous book, The Collapse of Complex Societies, a civilisation ends when its investments in complexity face diminishing returns. Put simply: things fall apart when they get too complex. And it's not easy to hold them together.

Customers' complex concerns

Dynatrace’s CEO has been meeting customers across the world who have been deeply unsettled by the Crowdstrike mega-outage. Whether they’re focused on keeping the electricity grid up and running or managing a manufacturing process involving complicated international supply chains, they need their software to work perfectly all the time. They are also concerned about security and want to reduce incidents, drive down downtime and ensure swift recovery following a disaster.

Other trends such as cloud modernisation, surging demand for GPUs, the rise of AI and an ever-worsening security landscape in which “threats are never more pervasive” are placing further pressure on resources, putting productivity and customer satisfaction at risk.

“The one element all these giant megatrends have in common is that they are causing an explosion of data and a massive increase in its complexity, making the processes that organisations have use to manage software almost impossible to deploy effectively,” McConnell told us.

"When I first graduated from college, my first job was as a programmer in investment banking, doing financial engineering. I was programming in Fortran on IBM mainframes,” he added. “Back then, if my software didn’t work, I knew exactly what the problem was: it was my code. The IBM mainframe was stable - its operating system didn’t change, and if the code didn’t work, it was because of something I’d done.

"Today, it’s a completely different environment. We have microservices, containers, complex software environments, compiled libraries, cloud-based systems, and routers, all interacting across numerous points of presence worldwide. At any given moment, thousands of things could go wrong, often without any change in the code itself. This complexity means you need a master controller, an overarching system to monitor and diagnose the entire ecosystem. It’s impossible to keep track manually, so that’s precisely what we built.”

READ MORE: Levi's blames earnings miss on mystery customer "cybersecurity breach"

To address complexity and improve resilience, Dynatrace provides comprehensive automated observability, centralizing logs, traces, metrics, and user data into a single, contextualized platform that quickly identifies and isolates issues. Through causal, predictive, and generative AI it enables rapid root cause analysis, proactively detects anomalies, and offers insights through natural language interfaces, reducing incidents and resolution time.

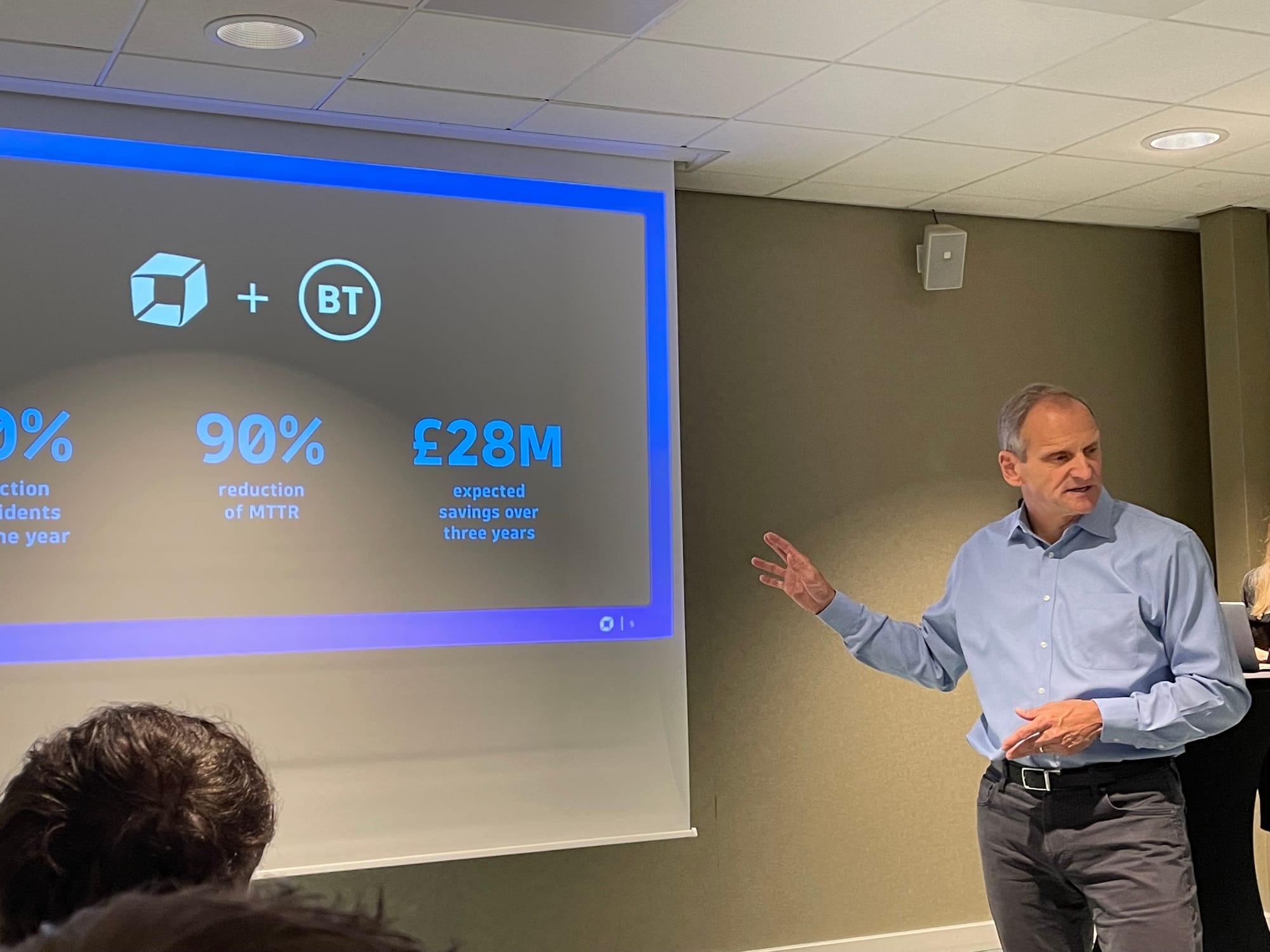

The example of success cited most prominently at the event was BT, which had been using 16 observability tools to manage its technology estate. Dynatrace gave BT the observability it needed through a single platform offering real-time insights across its stack, aiming for £28m in cost savings by 2027. Automated issue detection and AI-driven root cause analysis have reduced MTTI (mean time to investigate) by up to 90%. Additionally, it uses Dynatrace’s AIOps capabilities to support proactive optimization. Ultimately, BT is aiming for a zero-ops, self-healing model.

Delivering change

One of the most interesting case studies of the conference came from Mateusz Piasta, Lead Site Reliability Engineer at InPost. His firm makes the automated delivery boxes you often see outside petrol stations here in the UK. From a standing start, there are now more than 60,000 across Europe.

When Piasta began working at InPost in 2019, the CEO tasked him with optimising its logistics network to handle five times more parcels daily - a total of 10 million. At the time, its data was spread across various tools like Kibana, Grafana, and other platforms.

To achieve the CEO’s ambitious goal, Inpost built a performance testing environment that mirrored its production setup, deploying Dynatrace on-premises for both production and testing. Initially, it simply couldn’t handle the 10 million load, but Dynatrace helped it identify and resolve issues like database optimization and Java tuning.

“We also preemptively installed Dynatrace in pre-production environments, enabling us to catch bugs early," Piasta said. "This success led to numerous optimization projects across our systems.

"We realized that scaling further would require our own logistics system, so we started building it in 2021. Now, we use a scalable system both on-prem and in the cloud. Dynatrace provides a centralized data source for infrastructure, network, and app monitoring, which has been a huge asset for our daily operations."

It managed to migrate 24 Kubernetes clusters at a pace of two per week with "minimal disruption" to complete a migration in just three months.

READ MORE: AI cyberattacks will wreak "existential" damage, Ministry of Defence warns

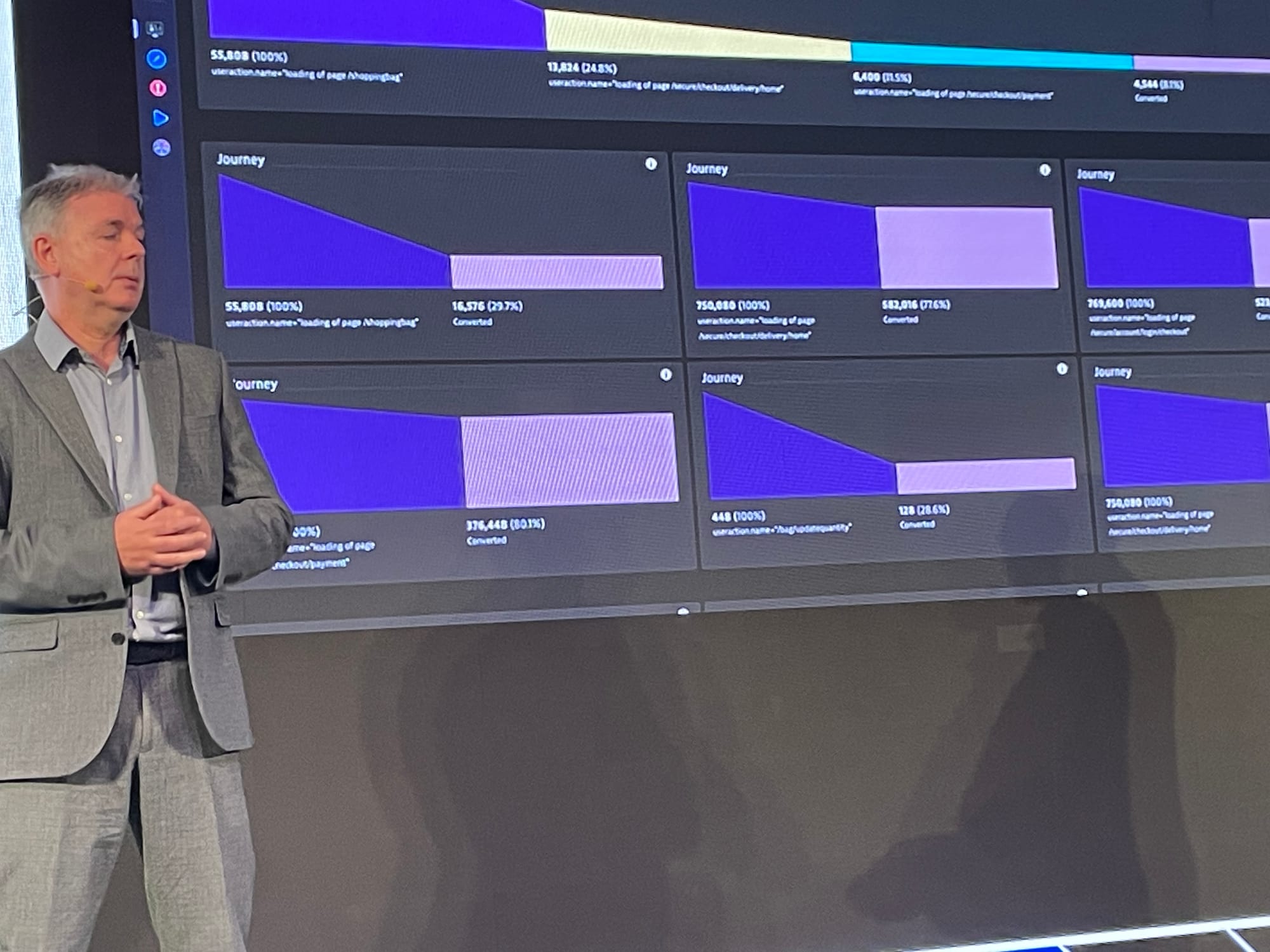

Alex Preston, Technology Development Director at the British retailer Next, is also using Dynatrace to support his company’s international expansion. “Several years ago, I was focused on managing a retail website; now, I oversee a global retail platform with a vast range of brands, third-party partnerships, and an expanding product offering in various markets, currencies, and languages," he said. "With this growth comes complexity, and insights are crucial.”

Next operates a “total platform” which lets third-party brands leverage the Next infrastructure and enlist its help in building websites, warehousing stock, fulfilling orders, and providing customer service. Currently, there are around 270,000 products on the main Next site, which “necessitates new data and insights to get the right products to the right audience”.

The retailer now uses AI to ensure the “right products are presented to the right audience” in the correct language and currency. It employs Dynatrace’s real-user monitoring to identify translation issues across markets in real time and uses the Opportunity Insights application to “understand which metrics have the greatest influence on specific market segments".

“This lets us set more meaningful KPIs - like time to first byte for a quick-loading experience for mobile users from TikTok,” Preston says. “Observability tools enable us to launch in new markets faster, gathering feedback to make rapid improvements. This agility is essential for rolling out products and improving navigation.”

The retailer also deploys synthetic monitoring across every major market within its environments to detect performance regressions before production and address them proactively. It’s experimenting with tech that lets customer support access session replay whenever a shopper reports a problem, tracks back through their interaction, finds the problem, and fixes it. Additionally, it can identify when actors use leaked passwords or email lists on the Next website, triggering immediate password resets and blocking access to compromised accounts.

Regulation vs innovation: Dynatrace's European presence

One of the unique aspects of Dynatrace’s global operations is that it has an engineering headquarters by the River Danube in Linz, Austria, where the story began almost 20 years ago in 2005.

Speaking to The Stack, Bernd Greifeneder, CTO and Founder, light-heartedly said: “I want to show that you can build better software here than on the West Coast company. We are a very technical team and we always strive to do things differently than the norm.

“So, for instance, we decided to reinvent the way you store and process data and realized: ‘Hey, this is a data lake house.’ And it was the first and only one on the market that does graph, events, metrics, user sessions and much more. We built this because we had those requirements and could not find anything. We don't go with the mainstream approaches like everyone else. We take the harder route.”

Europe is a particularly fertile place to tackle the enduring challenge of new regulations, a reliable generator of complexity and an issue Greifeneder knows is “on the minds of the CIOs, CTOs and chief transformation officers right now,”.

Businesses in the EU are currently preparing for DORA (the Digital Operational Resilience Act), which requires extensive, ongoing compliance with strict cybersecurity and risk management standards.

“This is not a once-in-a-year accreditation process that is painful, takes a couple of days and is then done,” Greifeneder said. “The key difference is that this is an ongoing effort. You need to be compliant every day and you need to be able to prove it. This is totally game-changing.”

Dynatrace concluded that DORA is not only about security but also heavily focused on availability, which, to Greifeneder, “defines resilience”.

"DORA emphasises the convergence of observability and security data to prove availability consistently,” he continued, claiming that Dynatrace’s contextual analytics, AI, and platform capabilities save enterprises about 80% of manual tasks through automation, enabling them to continuously demonstrate compliance.

“This is transformative, and to support it, we’ve created a compliance assistant within Dynatrace that consolidates observability data from hyperscalers, security, infrastructure, and more into a real-time, comprehensive report,” he said. “This would not be possible without automated observability to ensure an accurate, up-to-date view of the production environment.”

To do this, Dynatrace auto-discovers environments, automatically mapping out topology and building on robust observability and security. It provides a shared pool of contextualized data, enabling multiple applications to manage infrastructure, end-user experience, and massive volumes of log data (reportedly hundreds of terabytes per day) within a single system.

Furthermore, security is embedded to “protect applications from the inside” and analyse threats in real time. Software delivery is also “key”, so the entire software delivery chain is monitored to discover problems preemptively and enable the delivery of secure, resilient updates.

“With all of this contextualized data, Dynatrace offers significant value not only for technical teams like operations, DevOps, SRE, and development, but also for business teams," Greifeneder continued. "It helps enterprises innovate and modernize digital services by connecting technical data with business analytics, optimizing processes like order-to-cash and procure-to-pay, and ultimately enhancing customer experiences.”

READ MORE: North Korean spies are infiltrating Fortune 100 companies disguised as IT freelancers, Mandiant warns

Greifeneder set out three key benefits of the Dynatrace platform, starting with predictive AI to predict and prevent issues, combined with remediation checks within cloud environments, with a final human approval step, if necessary.

The ability to handle “massive data volumes” is a second key capability. Dynatrace’s Grail data lakehouse is designed to handle petabytes and beyond. It provides “safe, structured, and layered data access” so that technical and business users can “access exactly what they need in real-time, with compliance ensured.” Finally, Dynatrace is “extending to the left”, extending responsibilities to development teams by enabling self-service capabilities to DevOps, SRE, and development teams.

“For me as a CTO enemy number one is complexity,” he shared. “‘Always keep it simple’ is a standard principle I have always followed and I care about reducing the number of parts.”

Observability is the key to understanding complex systems and then taking control of them, Dynatrace believes, and its customers seem to agree. As Miguel Alava, general manager at AWS, said during his own contribution to the keynote: "If it cannot be observed, it cannot be measured. If it cannot be measured, it cannot be improved. Observability produces the insight and information needed to ensure we are running organisations in the right way."