Abu Dhabi’s Technology Innovation Institute (TII) has released “Falcon 3”, the latest version of its open-source large language model (LLM) series – trained on 14 trillion tokens but capable of operating on a single GPU.

TII’s Falcon 3, licensed under an Apache 2.0-based license that “includes an acceptable use policy which promotes the responsible use of AI.”

The suite of models (Falcon3-1B, -3B, -7B and -10B) are currently text only but will get multi-modal capabilities as early as January 2025, TII said.

It had released its Falcon 2 family of models in May this year.

Falcon 3’s architecture is based on a decoder-only design using flash attention 2 to grouped query attention. It integrates Grouped Query Attention (GQA) to share parameters, minimizing memory for Key-Value (KV) cache during inference, ensuring faster and more efficient operations – TII.

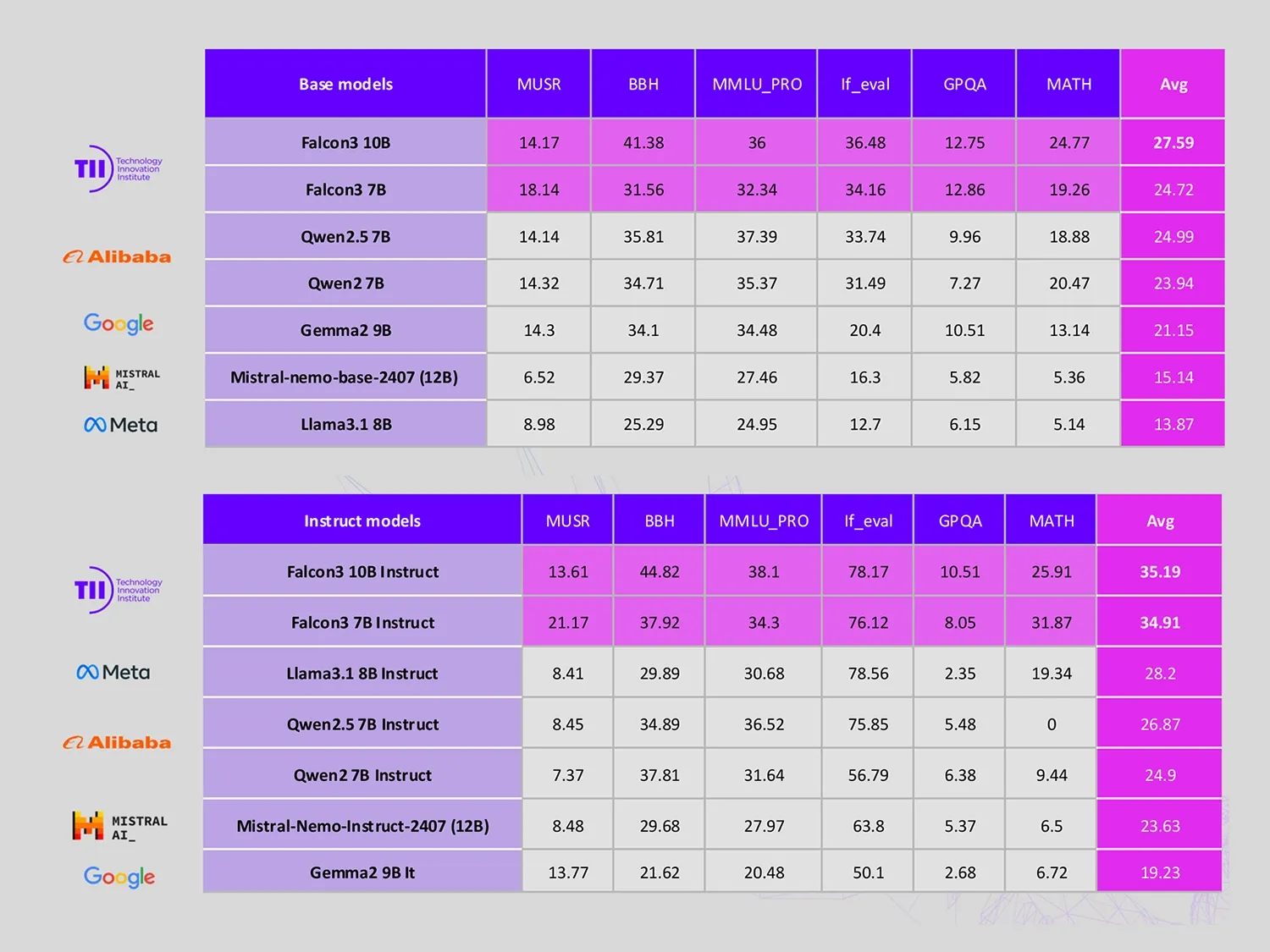

The models appear to perform highly on multiple benchmarks; its 7B-Base version is on par with Alibaba's Qwen2.5-7B in the under-9B category and its 10B-Base a category leader in the under-13B category. (TII chart below.)

"Falcon’s quantized [a way of making models faster and less memory-intensive] versions, such as GGUF, AWQ, and GPTQ (in int4, int8, and 1.58 Bitnet), make it highly efficient, even for resource-constrained environments," Abu Dhabi's TII explained. "Optimized for lightweight systems... with both Base and Instruct versions tailored for different needs. Falcon 3 models can be further customized through tools like vLLM, Llama.cpp, and MLX, ensuring seamless adoption for developers."

A quick play with the demo by The Stack showed that the good engineers of Abu Dhabhi have no more managed to do away with rampant hallucinations than those of the US or China, but Falcon 3's lightweight capabilities and benchmark performance will interest many.

Dr. Hakim Hacid, Chief Researcher of the TII’s AI and Digital Science Research Center (AIDRC), said in a canned statement: “AI is fast evolving, and we are glad to be an active part of this journey. Falcon 3 pushes the boundaries of small LLMs further, contributing to the open-source community by providing access to a better-performing AI."